Search Results for author: Shirsha Bose

Found 6 papers, 1 papers with code

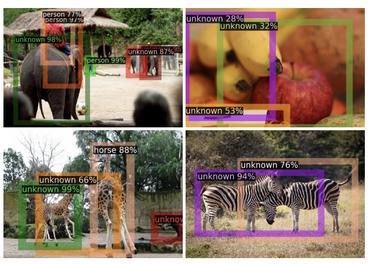

Finding Dino: A plug-and-play framework for unsupervised detection of out-of-distribution objects using prototypes

no code implementations • 11 Apr 2024 • Poulami Sinhamahapatra, Franziska Schwaiger, Shirsha Bose, Huiyu Wang, Karsten Roscher, Stephan Guennemann

It is an inference-based method that does not require training on the domain dataset and relies on extracting relevant features from self-supervised pre-trained models.

CDAD-Net: Bridging Domain Gaps in Generalized Category Discovery

no code implementations • 8 Apr 2024 • Sai Bhargav Rongali, Sarthak Mehrotra, Ankit Jha, Mohamad Hassan N C, Shirsha Bose, Tanisha Gupta, Mainak Singha, Biplab Banerjee

In Generalized Category Discovery (GCD), we cluster unlabeled samples of known and novel classes, leveraging a training dataset of known classes.

Unknown Prompt, the only Lacuna: Unveiling CLIP's Potential for Open Domain Generalization

no code implementations • 31 Mar 2024 • Mainak Singha, Ankit Jha, Shirsha Bose, Ashwin Nair, Moloud Abdar, Biplab Banerjee

Central to our approach is modeling a unique prompt tailored for detecting unknown class samples, and to train this, we employ a readily accessible stable diffusion model, elegantly generating proxy images for the open class.

APPLeNet: Visual Attention Parameterized Prompt Learning for Few-Shot Remote Sensing Image Generalization using CLIP

1 code implementation • 12 Apr 2023 • Mainak Singha, Ankit Jha, Bhupendra Solanki, Shirsha Bose, Biplab Banerjee

APPLeNet emphasizes the importance of multi-scale feature learning in RS scene classification and disentangles visual style and content primitives for domain generalization tasks.

MultiScale Probability Map guided Index Pooling with Attention-based learning for Road and Building Segmentation

no code implementations • 18 Feb 2023 • Shirsha Bose, Ritesh Sur Chowdhury, Debabrata Pal, Shivashish Bose, Biplab Banerjee, Subhasis Chaudhuri

Efficient road and building footprint extraction from satellite images are predominant in many remote sensing applications.

StyLIP: Multi-Scale Style-Conditioned Prompt Learning for CLIP-based Domain Generalization

no code implementations • 18 Feb 2023 • Shirsha Bose, Ankit Jha, Enrico Fini, Mainak Singha, Elisa Ricci, Biplab Banerjee

Our method focuses on a domain-agnostic prompt learning strategy, aiming to disentangle the visual style and content information embedded in CLIP's pre-trained vision encoder, enabling effortless adaptation to novel domains during inference.