Search Results for author: Shuailei Ma

Found 7 papers, 3 papers with code

CoReS: Orchestrating the Dance of Reasoning and Segmentation

no code implementations • 8 Apr 2024 • Xiaoyi Bao, Siyang Sun, Shuailei Ma, Kecheng Zheng, Yuxin Guo, Guosheng Zhao, Yun Zheng, Xingang Wang

We believe that the act of reasoning segmentation should mirror the cognitive stages of human visual search, where each step is a progressive refinement of thought toward the final object.

DreamLIP: Language-Image Pre-training with Long Captions

no code implementations • 25 Mar 2024 • Kecheng Zheng, Yifei Zhang, Wei Wu, Fan Lu, Shuailei Ma, Xin Jin, Wei Chen, Yujun Shen

Motivated by this, we propose to dynamically sample sub-captions from the text label to construct multiple positive pairs, and introduce a grouping loss to match the embeddings of each sub-caption with its corresponding local image patches in a self-supervised manner.

Understanding the Multi-modal Prompts of the Pre-trained Vision-Language Model

no code implementations • 18 Dec 2023 • Shuailei Ma, Chen-Wei Xie, Ying WEI, Siyang Sun, Jiaqi Fan, Xiaoyi Bao, Yuxin Guo, Yun Zheng

In this paper, we conduct a direct analysis of the multi-modal prompts by asking the following questions: $(i)$ How do the learned multi-modal prompts improve the recognition performance?

SKDF: A Simple Knowledge Distillation Framework for Distilling Open-Vocabulary Knowledge to Open-world Object Detector

1 code implementation • 14 Dec 2023 • Shuailei Ma, Yuefeng Wang, Ying WEI, Jiaqi Fan, Enming Zhang, Xinyu Sun, Peihao Chen

Ablation experiments demonstrate that both of them are effective in mitigating the impact of open-world knowledge distillation on the learning of known objects.

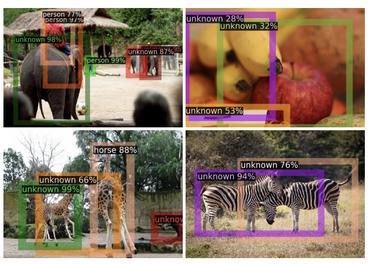

Detecting the open-world objects with the help of the Brain

1 code implementation • 21 Mar 2023 • Shuailei Ma, Yuefeng Wang, Ying WEI, Peihao Chen, Zhixiang Ye, Jiaqi Fan, Enming Zhang, Thomas H. Li

We propose leveraging the VL as the ``Brain'' of the open-world detector by simply generating unknown labels.

FGAHOI: Fine-Grained Anchors for Human-Object Interaction Detection

1 code implementation • 8 Jan 2023 • Shuailei Ma, Yuefeng Wang, Shanze Wang, Ying WEI

HSAM and TAM semantically align and merge the extracted features and query embeddings in the hierarchical spatial and task perspectives in turn.

Ranked #6 on

Human-Object Interaction Detection

on HICO-DET

Ranked #6 on

Human-Object Interaction Detection

on HICO-DET

CAT: LoCalization and IdentificAtion Cascade Detection Transformer for Open-World Object Detection

no code implementations • CVPR 2023 • Shuailei Ma, Yuefeng Wang, Jiaqi Fan, Ying WEI, Thomas H. Li, Hongli Liu, Fanbing Lv

Open-world object detection (OWOD), as a more general and challenging goal, requires the model trained from data on known objects to detect both known and unknown objects and incrementally learn to identify these unknown objects.