SKDF: A Simple Knowledge Distillation Framework for Distilling Open-Vocabulary Knowledge to Open-world Object Detector

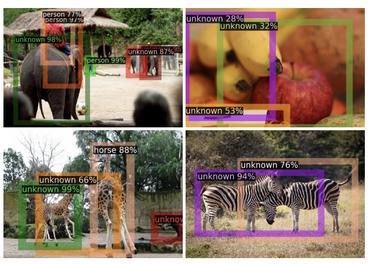

In this paper, we attempt to specialize the VLM model for OWOD tasks by distilling its open-world knowledge into a language-agnostic detector. Surprisingly, we observe that the combination of a simple \textbf{knowledge distillation} approach and the automatic pseudo-labeling mechanism in OWOD can achieve better performance for unknown object detection, even with a small amount of data. Unfortunately, knowledge distillation for unknown objects severely affects the learning of detectors with conventional structures for known objects, leading to catastrophic forgetting. To alleviate these problems, we propose the \textbf{down-weight loss function} for knowledge distillation from vision-language to single vision modality. Meanwhile, we propose the \textbf{cascade decouple decoding structure} that decouples the learning of localization and recognition to reduce the impact of category interactions of known and unknown objects on the localization learning process. Ablation experiments demonstrate that both of them are effective in mitigating the impact of open-world knowledge distillation on the learning of known objects. Additionally, to alleviate the current lack of comprehensive benchmarks for evaluating the ability of the open-world detector to detect unknown objects in the open world, we propose two benchmarks, which we name "\textbf{StandardSet}$\heartsuit$" and "\textbf{IntensiveSet}$\spadesuit$" respectively, based on the complexity of their testing scenarios. Comprehensive experiments performed on OWOD, MS-COCO, and our proposed benchmarks demonstrate the effectiveness of our methods. The code and proposed dataset are available at \url{https://github.com/xiaomabufei/SKDF}.

PDF Abstract

MS COCO

MS COCO

LVIS

LVIS