Convolutional Neural Networks

Convolutional Neural Networks

AmoebaNet

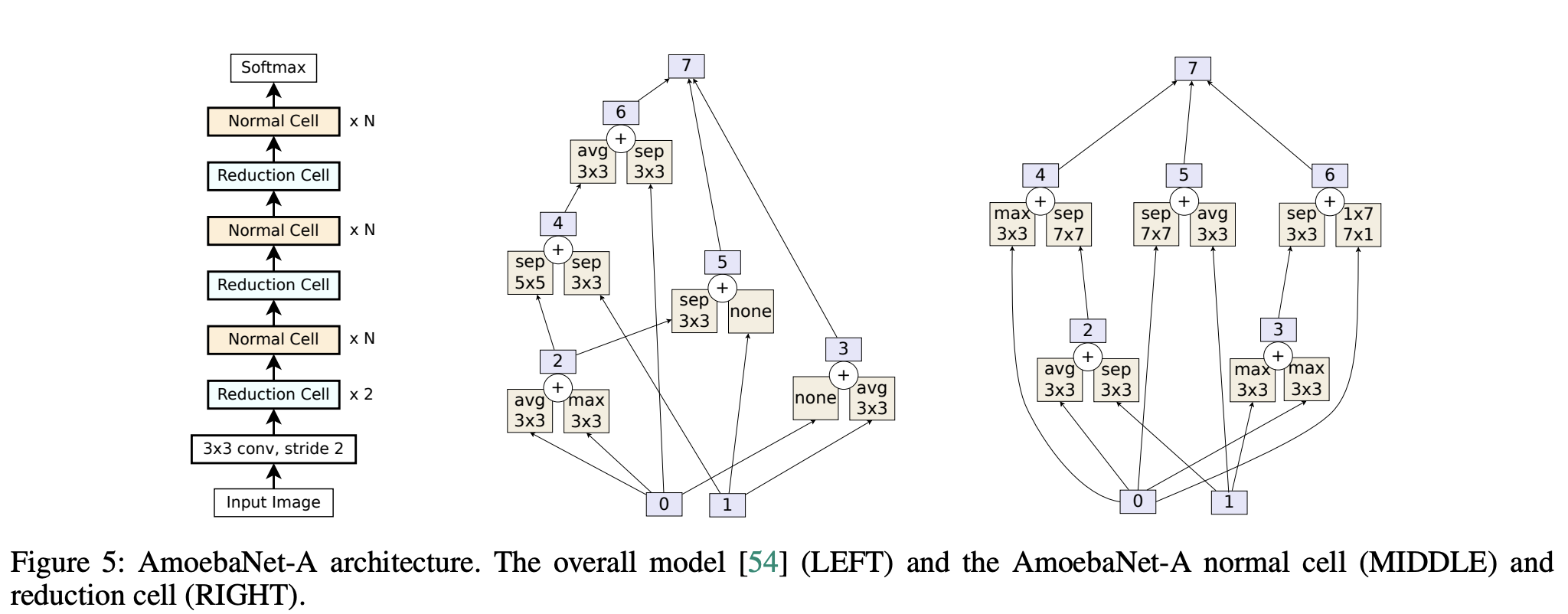

Introduced by Real et al. in Regularized Evolution for Image Classifier Architecture SearchAmoebaNet is a convolutional neural network found through regularized evolution architecture search. The search space is NASNet, which specifies a space of image classifiers with a fixed outer structure: a feed-forward stack of Inception-like modules called cells. The discovered architecture is shown to the right.

Source: Regularized Evolution for Image Classifier Architecture SearchPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Image Classification | 4 | 21.05% |

| Object Detection | 4 | 21.05% |

| Keypoint Detection | 2 | 10.53% |

| Semantic Segmentation | 2 | 10.53% |

| Image Augmentation | 1 | 5.26% |

| Robust Object Detection | 1 | 5.26% |

| Real-Time Object Detection | 1 | 5.26% |

| Fine-Grained Image Classification | 1 | 5.26% |

| Machine Translation | 1 | 5.26% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

Average Pooling

Average Pooling

|

Pooling Operations | |

Convolution

Convolution

|

Convolutions | |

Max Pooling

Max Pooling

|

Pooling Operations | |

Softmax

Softmax

|

Output Functions | |

Spatially Separable Convolution

Spatially Separable Convolution

|

Convolutions |