Initialization

Initialization

T-Fixup

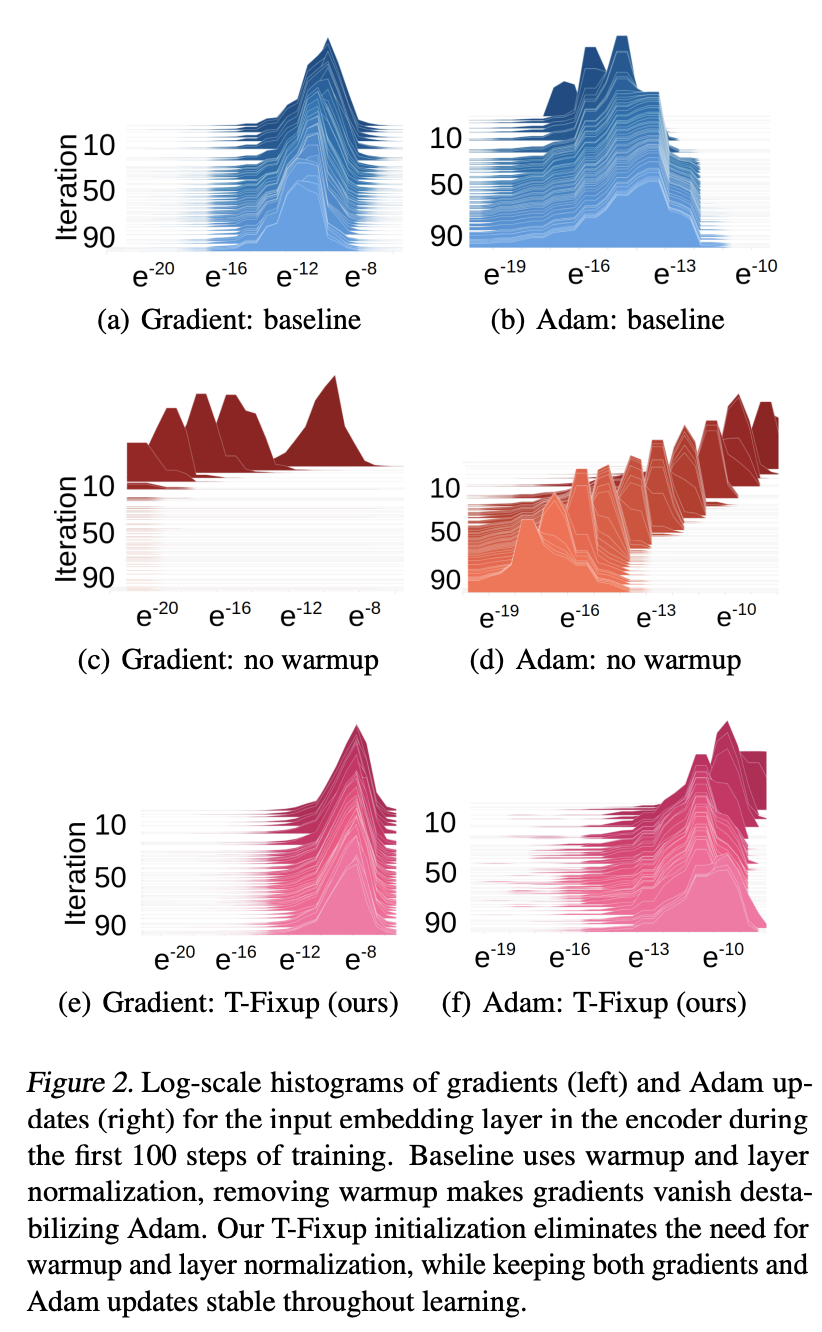

Introduced by Huang et al. in Improving Transformer Optimization Through Better InitializationT-Fixup is an initialization method for Transformers that aims to remove the need for layer normalization and warmup. The initialization procedure is as follows:

- Apply Xavier initialization for all parameters excluding input embeddings. Use Gaussian initialization $\mathcal{N}\left(0, d^{-\frac{1}{2}}\right)$ for input embeddings where $d$ is the embedding dimension.

- Scale $\mathbf{v}_{d}$ and $\mathbf{w}_{d}$ matrices in each decoder attention block, weight matrices in each decoder MLP block and input embeddings $\mathbf{x}$ and $\mathbf{y}$ in encoder and decoder by $(9 N)^{-\frac{1}{4}}$

- Scale $\mathbf{v}_{e}$ and $\mathbf{w}_{e}$ matrices in each encoder attention block and weight matrices in each encoder MLP block by $0.67 N^{-\frac{1}{4}}$

Papers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Reading Comprehension | 1 | 16.67% |

| Semantic Parsing | 1 | 16.67% |

| Text-To-SQL | 1 | 16.67% |

| Language Modelling | 1 | 16.67% |

| Machine Translation | 1 | 16.67% |

| Translation | 1 | 16.67% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |