75 Languages, 1 Model: Parsing Universal Dependencies Universally

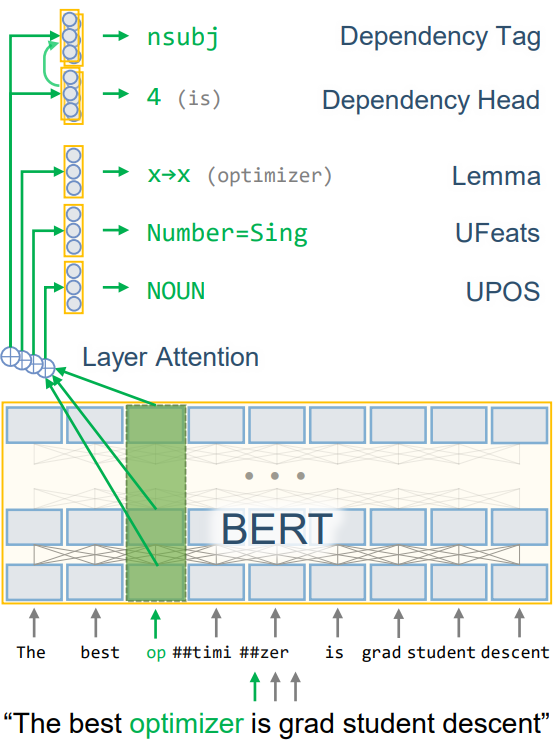

We present UDify, a multilingual multi-task model capable of accurately predicting universal part-of-speech, morphological features, lemmas, and dependency trees simultaneously for all 124 Universal Dependencies treebanks across 75 languages. By leveraging a multilingual BERT self-attention model pretrained on 104 languages, we found that fine-tuning it on all datasets concatenated together with simple softmax classifiers for each UD task can result in state-of-the-art UPOS, UFeats, Lemmas, UAS, and LAS scores, without requiring any recurrent or language-specific components. We evaluate UDify for multilingual learning, showing that low-resource languages benefit the most from cross-linguistic annotations. We also evaluate for zero-shot learning, with results suggesting that multilingual training provides strong UD predictions even for languages that neither UDify nor BERT have ever been trained on. Code for UDify is available at https://github.com/hyperparticle/udify.

PDF Abstract IJCNLP 2019 PDF IJCNLP 2019 Abstract

Universal Dependencies

Universal Dependencies