A Simple Baseline for Low-Budget Active Learning

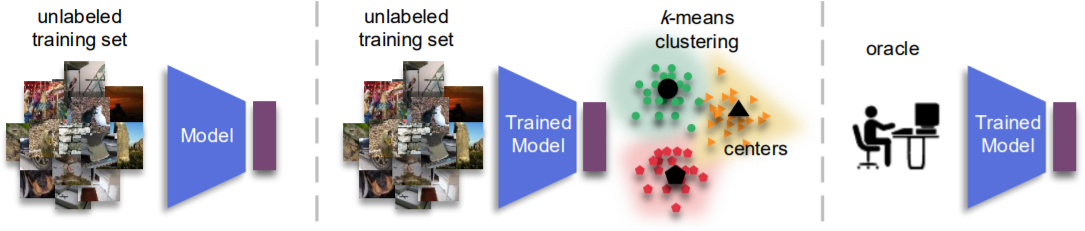

Active learning focuses on choosing a subset of unlabeled data to be labeled. However, most such methods assume that a large subset of the data can be annotated. We are interested in low-budget active learning where only a small subset (e.g., 0.2% of ImageNet) can be annotated. Instead of proposing a new query strategy to iteratively sample batches of unlabeled data given an initial pool, we learn rich features by an off-the-shelf self-supervised learning method only once, and then study the effectiveness of different sampling strategies given a low labeling budget on a variety of datasets including ImageNet. We show that although the state-of-the-art active learning methods work well given a large labeling budget, a simple K-means clustering algorithm can outperform them on low budgets. We believe this method can be used as a simple baseline for low-budget active learning on image classification. Code is available at: https://github.com/UCDvision/low-budget-al

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

Oxford 102 Flower

Oxford 102 Flower

DTD

DTD