Acquiring and Modelling Abstract Commonsense Knowledge via Conceptualization

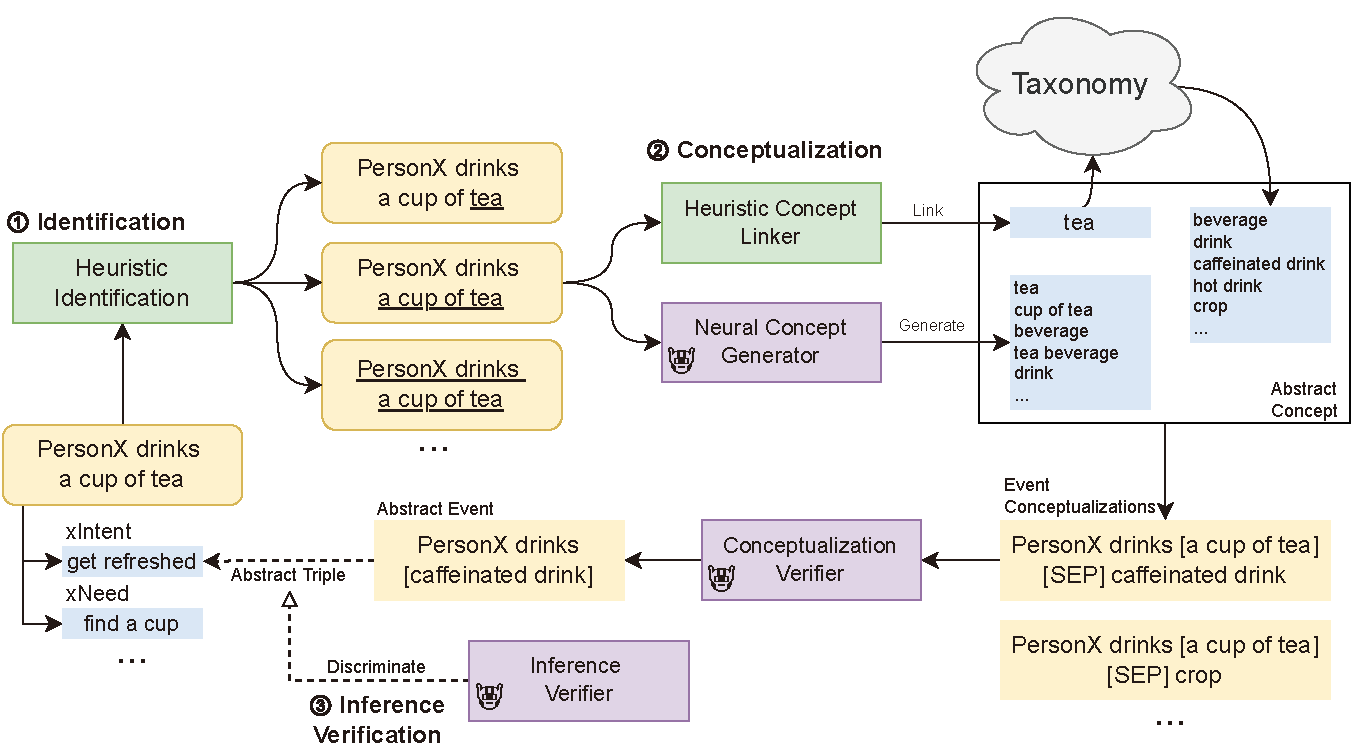

Conceptualization, or viewing entities and situations as instances of abstract concepts in mind and making inferences based on that, is a vital component in human intelligence for commonsense reasoning. Although recent artificial intelligence has made progress in acquiring and modelling commonsense, attributed to large neural language models and commonsense knowledge graphs (CKGs), conceptualization is yet to thoroughly be introduced, making current approaches ineffective to cover knowledge about countless diverse entities and situations in the real world. To address the problem, we thoroughly study the possible role of conceptualization in commonsense reasoning, and formulate a framework to replicate human conceptual induction from acquiring abstract knowledge about abstract concepts. Aided by the taxonomy Probase, we develop tools for contextualized conceptualization on ATOMIC, a large-scale human annotated CKG. We annotate a dataset for the validity of conceptualizations for ATOMIC on both event and triple level, develop a series of heuristic rules based on linguistic features, and train a set of neural models, so as to generate and verify abstract knowledge. Based on these components, a pipeline to acquire abstract knowledge is built. A large abstract CKG upon ATOMIC is then induced, ready to be instantiated to infer about unseen entities or situations. Furthermore, experiments find directly augmenting data with abstract triples to be helpful in commonsense modelling.

PDF Abstract

GLUE

GLUE

ConceptNet

ConceptNet

YAGO

YAGO

ATOMIC

ATOMIC

NomBank

NomBank