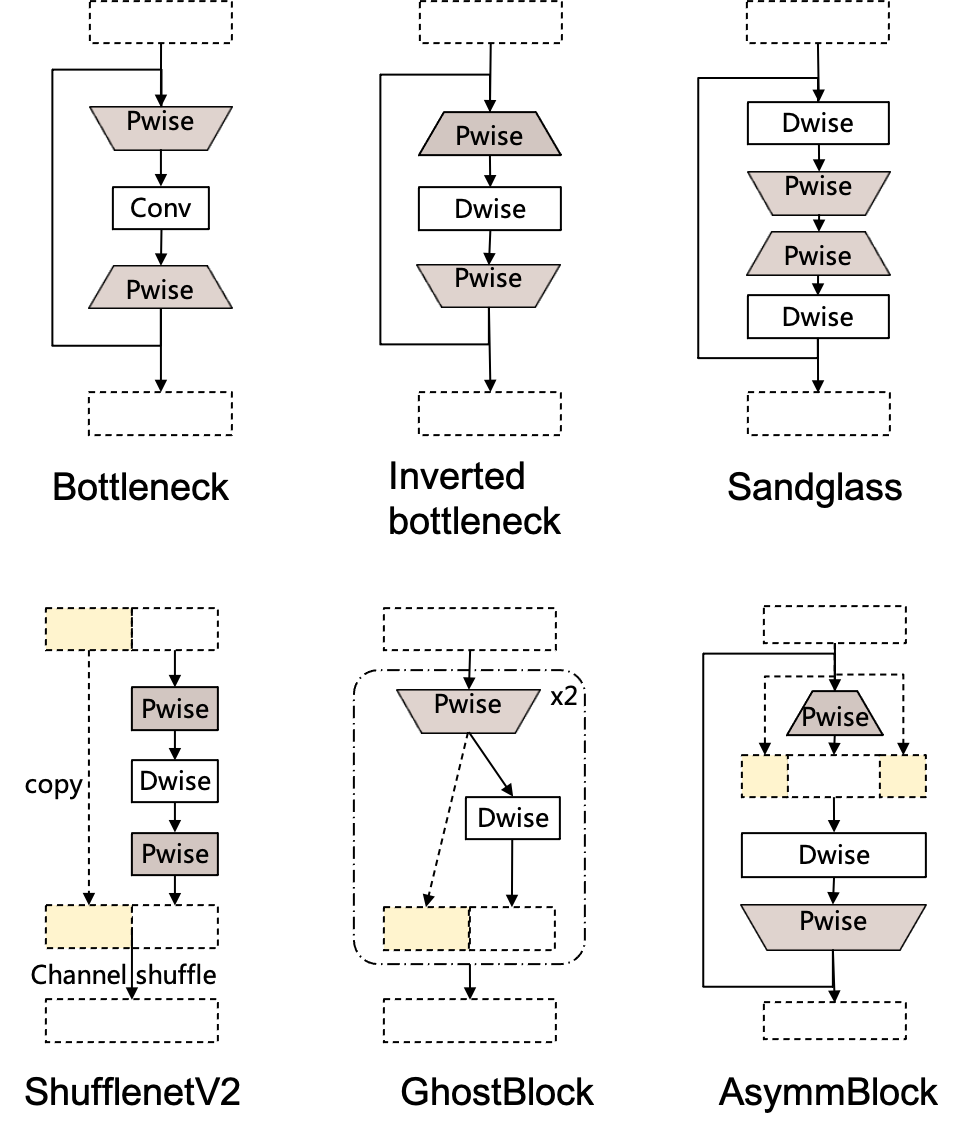

AsymmNet: Towards ultralight convolution neural networks using asymmetrical bottlenecks

Deep convolutional neural networks (CNN) have achieved astonishing results in a large variety of applications. However, using these models on mobile or embedded devices is difficult due to the limited memory and computation resources. Recently, the inverted residual block becomes the dominating solution for the architecture design of compact CNNs. In this work, we comprehensively investigated the existing design concepts, rethink the functional characteristics of two pointwise convolutions in the inverted residuals. We propose a novel design, called asymmetrical bottlenecks. Precisely, we adjust the first pointwise convolution dimension, enrich the information flow by feature reuse, and migrate saved computations to the second pointwise convolution. By doing so we can further improve the accuracy without increasing the computation overhead. The asymmetrical bottlenecks can be adopted as a drop-in replacement for the existing CNN blocks. We can thus create AsymmNet by easily stack those blocks according to proper depth and width conditions. Extensive experiments demonstrate that our proposed block design is more beneficial than the original inverted residual bottlenecks for mobile networks, especially useful for those ultralight CNNs within the regime of <220M MAdds. Code is available at https://github.com/Spark001/AsymmNet

PDF Abstract

ImageNet

ImageNet

MS COCO

MS COCO

HMDB51

HMDB51

VGGFace2

VGGFace2