CogVLM: Visual Expert for Pretrained Language Models

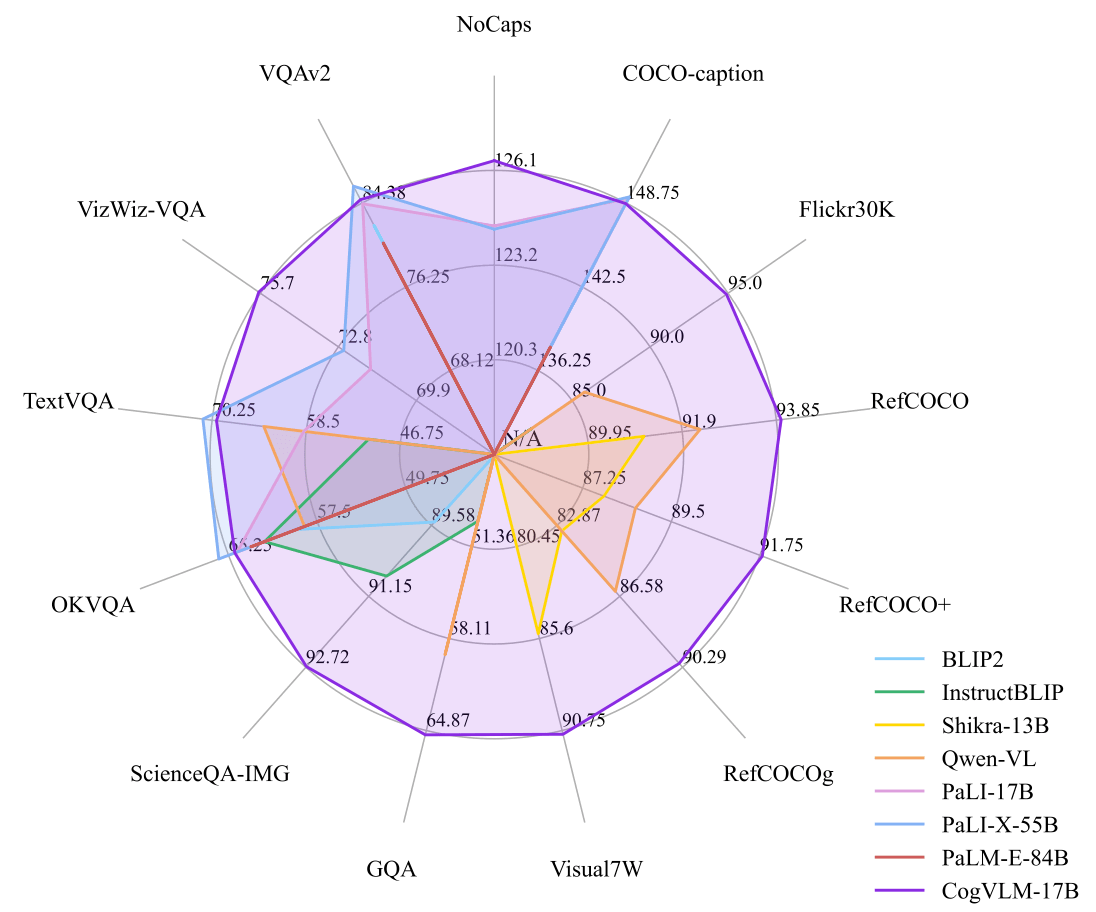

We introduce CogVLM, a powerful open-source visual language foundation model. Different from the popular shallow alignment method which maps image features into the input space of language model, CogVLM bridges the gap between the frozen pretrained language model and image encoder by a trainable visual expert module in the attention and FFN layers. As a result, CogVLM enables deep fusion of vision language features without sacrificing any performance on NLP tasks. CogVLM-17B achieves state-of-the-art performance on 10 classic cross-modal benchmarks, including NoCaps, Flicker30k captioning, RefCOCO, RefCOCO+, RefCOCOg, Visual7W, GQA, ScienceQA, VizWiz VQA and TDIUC, and ranks the 2nd on VQAv2, OKVQA, TextVQA, COCO captioning, etc., surpassing or matching PaLI-X 55B. Codes and checkpoints are available at https://github.com/THUDM/CogVLM.

PDF Abstract

MS COCO

MS COCO

Visual Question Answering

Visual Question Answering

MMLU

MMLU

RefCOCO

RefCOCO

OK-VQA

OK-VQA

TextVQA

TextVQA

NoCaps

NoCaps

ScienceQA

ScienceQA

Visual7W

Visual7W

MMBench

MMBench

MM-Vet

MM-Vet

SEED-Bench

SEED-Bench

MathVista

MathVista

InfiMM-Eval

InfiMM-Eval