DeepGRU: Deep Gesture Recognition Utility

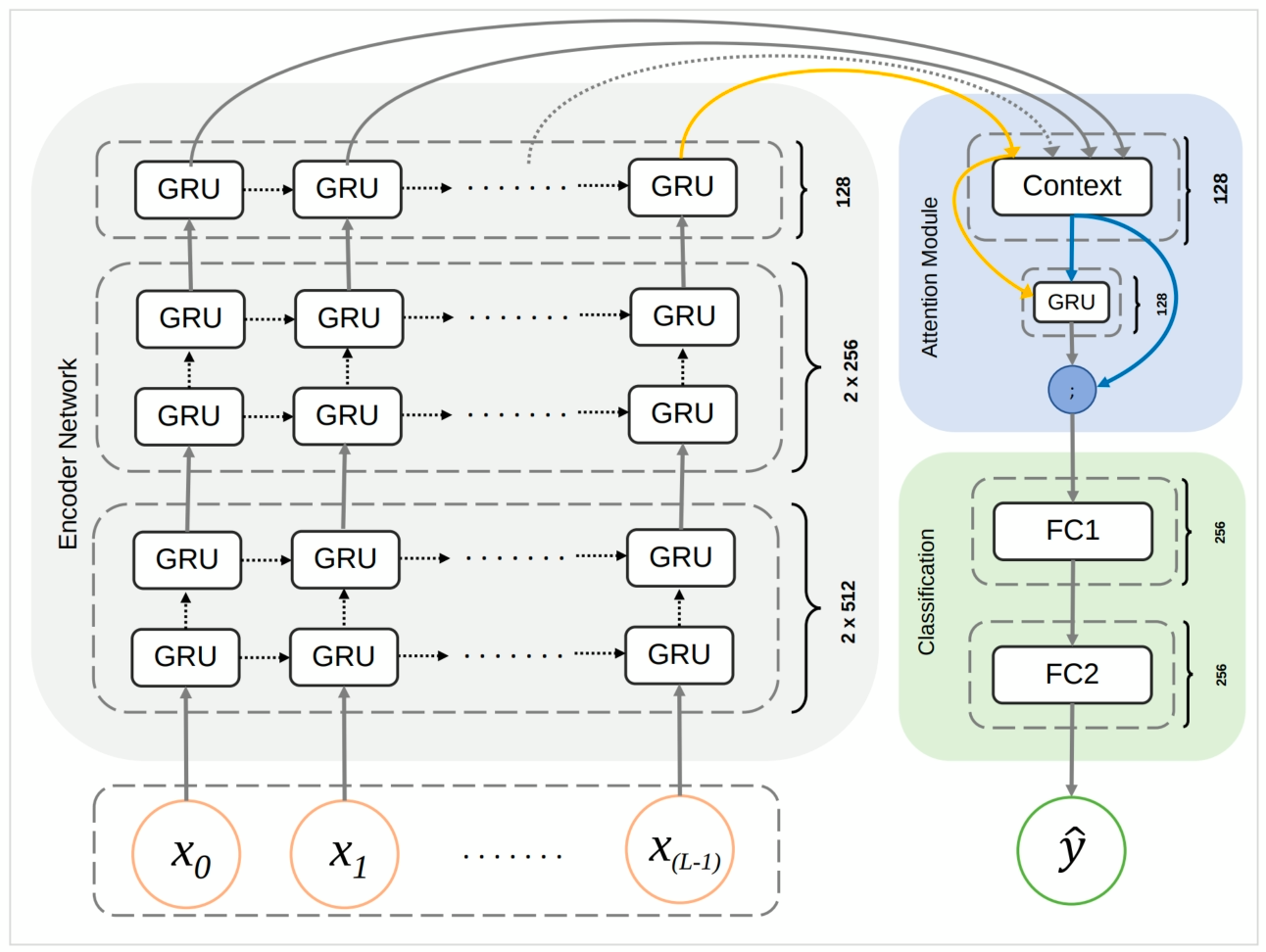

We propose DeepGRU, a novel end-to-end deep network model informed by recent developments in deep learning for gesture and action recognition, that is streamlined and device-agnostic. DeepGRU, which uses only raw skeleton, pose or vector data is quickly understood, implemented, and trained, and yet achieves state-of-the-art results on challenging datasets. At the heart of our method lies a set of stacked gated recurrent units (GRU), two fully-connected layers and a novel global attention model. We evaluate our method on seven publicly available datasets, containing various number of samples and spanning over a broad range of interactions (full-body, multi-actor, hand gestures, etc.). In all but one case we outperform the state-of-the-art pose-based methods. For instance, we achieve a recognition accuracy of 84.9% and 92.3% on cross-subject and cross-view tests of the NTU RGB+D dataset respectively, and also 100% recognition accuracy on the UT-Kinect dataset. While DeepGRU works well on large datasets with many training samples, we show that even in the absence of a large number of training data, and with as little as four samples per class, DeepGRU can beat traditional methods specifically designed for small training sets. Lastly, we demonstrate that even without powerful hardware, and using only the CPU, our method can still be trained in under 10 minutes on small-scale datasets, making it an enticing choice for rapid application prototyping and development.

PDF AbstractCode

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Skeleton Based Action Recognition | SBU | DeepGRU | Accuracy | 95.7% | # 6 |

NTU RGB+D

NTU RGB+D

SBU

SBU

UT-Kinect

UT-Kinect