End-to-end Trainable Deep Neural Network for Robotic Grasp Detection and Semantic Segmentation from RGB

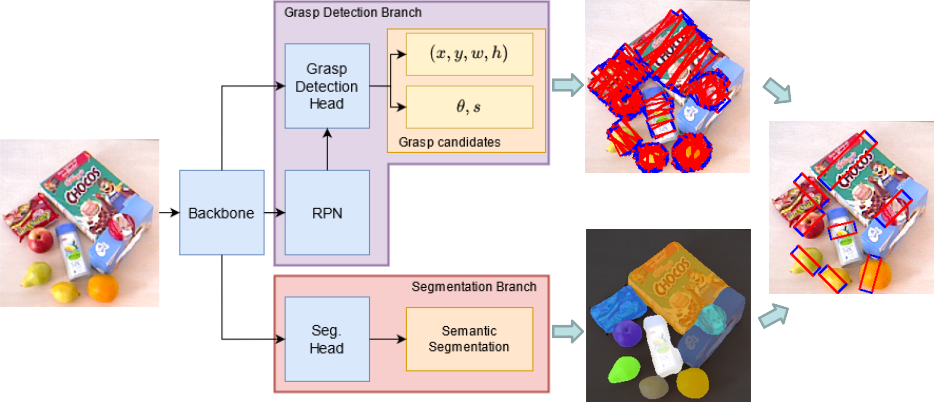

In this work, we introduce a novel, end-to-end trainable CNN-based architecture to deliver high quality results for grasp detection suitable for a parallel-plate gripper, and semantic segmentation. Utilizing this, we propose a novel refinement module that takes advantage of previously calculated grasp detection and semantic segmentation and further increases grasp detection accuracy. Our proposed network delivers state-of-the-art accuracy on two popular grasp dataset, namely Cornell and Jacquard. As additional contribution, we provide a novel dataset extension for the OCID dataset, making it possible to evaluate grasp detection in highly challenging scenes. Using this dataset, we show that semantic segmentation can additionally be used to assign grasp candidates to object classes, which can be used to pick specific objects in the scene.

PDF Abstract

OCID

OCID