EndoMapper dataset of complete calibrated endoscopy procedures

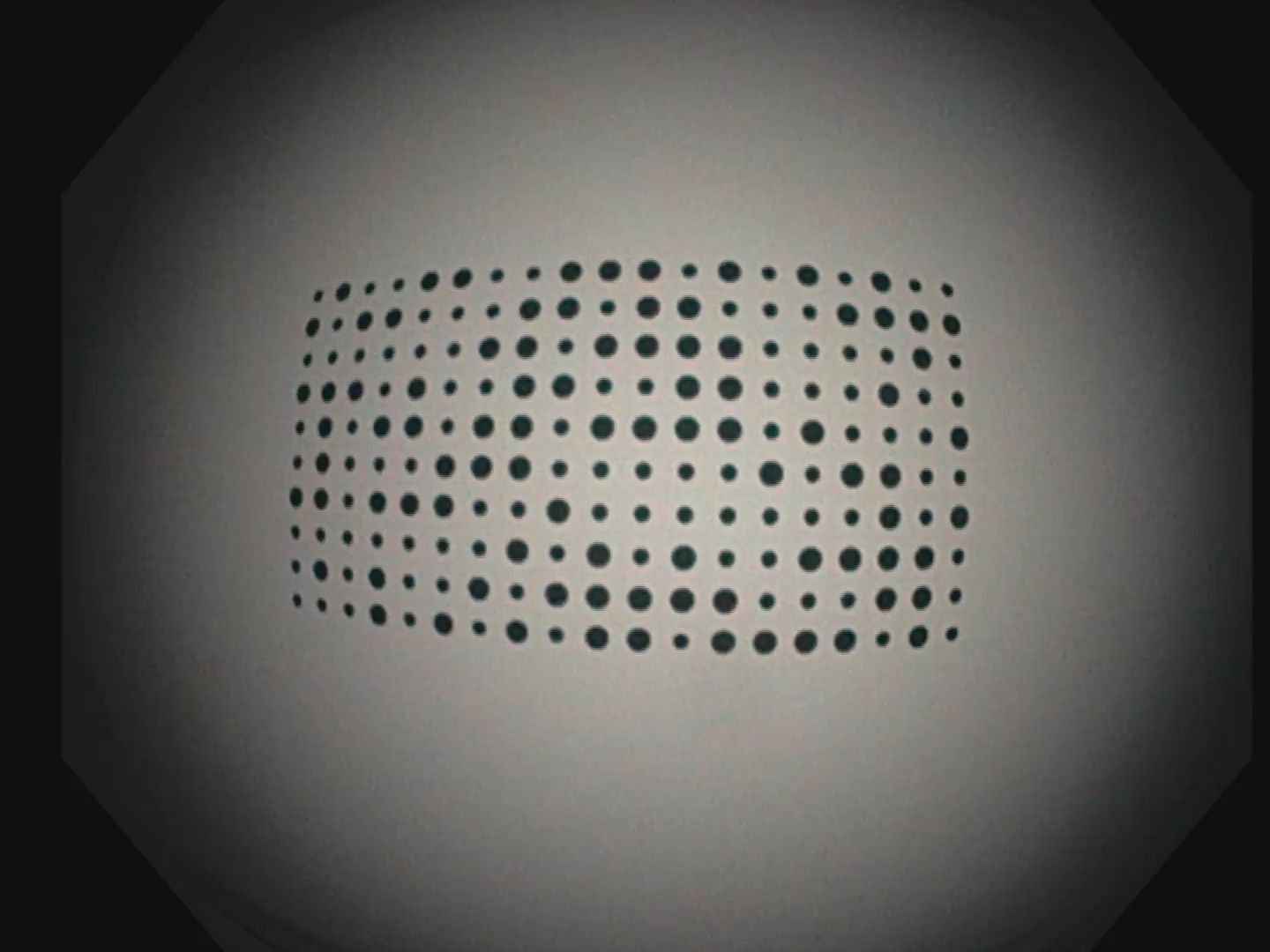

Computer-assisted systems are becoming broadly used in medicine. In endoscopy, most research focuses on the automatic detection of polyps or other pathologies, but localization and navigation of the endoscope are completely performed manually by physicians. To broaden this research and bring spatial Artificial Intelligence to endoscopies, data from complete procedures is needed. This paper introduces the Endomapper dataset, the first collection of complete endoscopy sequences acquired during regular medical practice, making secondary use of medical data. Its main purpose is to facilitate the development and evaluation of Visual Simultaneous Localization and Mapping (VSLAM) methods in real endoscopy data. The dataset contains more than 24 hours of video. It is the first endoscopic dataset that includes endoscope calibration as well as the original calibration videos. Meta-data and annotations associated with the dataset vary from the anatomical landmarks, procedure labeling, segmentations, reconstructions, simulated sequences with ground truth and same patient procedures. The software used in this paper is publicly available.

PDF AbstractCode

Datasets

Introduced in the Paper:

Endomapper