Generalizing MLPs With Dropouts, Batch Normalization, and Skip Connections

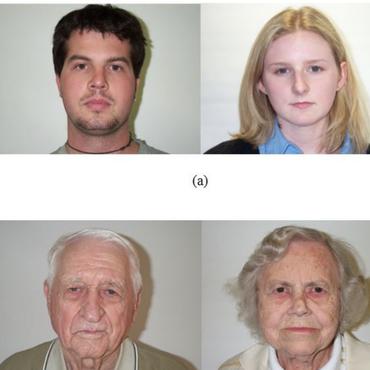

A multilayer perceptron (MLP) is typically made of multiple fully connected layers with nonlinear activation functions. There have been several approaches to make them better (e.g. faster convergence, better convergence limit, etc.). But the researches lack structured ways to test them. We test different MLP architectures by carrying out the experiments on the age and gender datasets. We empirically show that by whitening inputs before every linear layer and adding skip connections, our proposed MLP architecture can result in better performance. Since the whitening process includes dropouts, it can also be used to approximate Bayesian inference. We have open sourced our code, and released models and docker images at https://github.com/tae898/age-gender/

PDF AbstractCode

Datasets

Results from the Paper

Ranked #4 on

Age And Gender Classification

on Adience Gender

(using extra training data)

Ranked #4 on

Age And Gender Classification

on Adience Gender

(using extra training data)

Adience

Adience