Implicit Autoencoder for Point-Cloud Self-Supervised Representation Learning

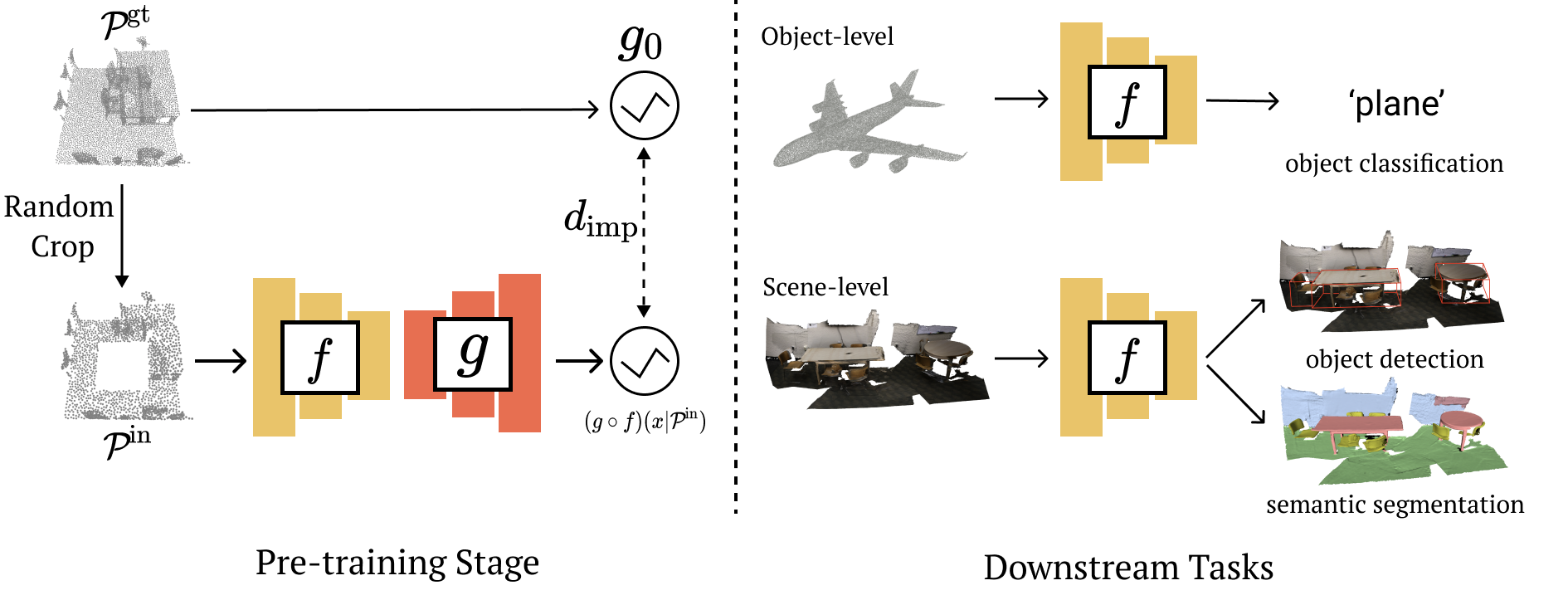

This paper advocates the use of implicit surface representation in autoencoder-based self-supervised 3D representation learning. The most popular and accessible 3D representation, i.e., point clouds, involves discrete samples of the underlying continuous 3D surface. This discretization process introduces sampling variations on the 3D shape, making it challenging to develop transferable knowledge of the true 3D geometry. In the standard autoencoding paradigm, the encoder is compelled to encode not only the 3D geometry but also information on the specific discrete sampling of the 3D shape into the latent code. This is because the point cloud reconstructed by the decoder is considered unacceptable unless there is a perfect mapping between the original and the reconstructed point clouds. This paper introduces the Implicit AutoEncoder (IAE), a simple yet effective method that addresses the sampling variation issue by replacing the commonly-used point-cloud decoder with an implicit decoder. The implicit decoder reconstructs a continuous representation of the 3D shape, independent of the imperfections in the discrete samples. Extensive experiments demonstrate that the proposed IAE achieves state-of-the-art performance across various self-supervised learning benchmarks.

PDF Abstract ICCV 2023 PDF ICCV 2023 AbstractCode

Results from the Paper

Ranked #5 on

3D Point Cloud Linear Classification

on ModelNet40

(using extra training data)

Ranked #5 on

3D Point Cloud Linear Classification

on ModelNet40

(using extra training data)

ShapeNet

ShapeNet

ScanNet

ScanNet

ModelNet

ModelNet

SUN RGB-D

SUN RGB-D

S3DIS

S3DIS

ScanObjectNN

ScanObjectNN