Lane Detection in Low-light Conditions Using an Efficient Data Enhancement : Light Conditions Style Transfer

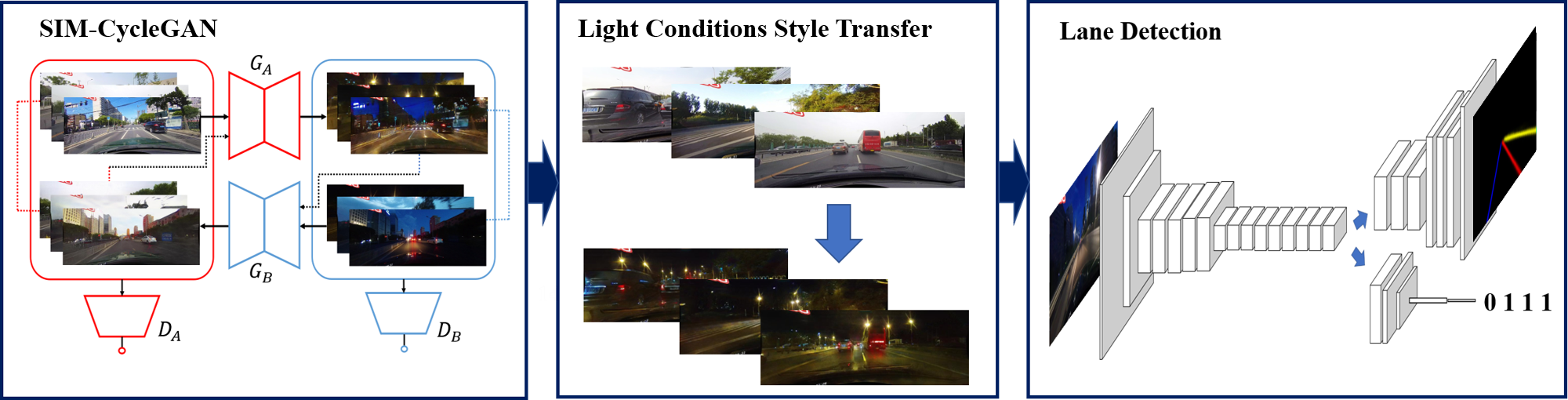

Nowadays, deep learning techniques are widely used for lane detection, but application in low-light conditions remains a challenge until this day. Although multi-task learning and contextual-information-based methods have been proposed to solve the problem, they either require additional manual annotations or introduce extra inference overhead respectively. In this paper, we propose a style-transfer-based data enhancement method, which uses Generative Adversarial Networks (GANs) to generate images in low-light conditions, that increases the environmental adaptability of the lane detector. Our solution consists of three parts: the proposed SIM-CycleGAN, light conditions style transfer and lane detection network. It does not require additional manual annotations nor extra inference overhead. We validated our methods on the lane detection benchmark CULane using ERFNet. Empirically, lane detection model trained using our method demonstrated adaptability in low-light conditions and robustness in complex scenarios. Our code for this paper will be publicly available.

PDF Abstract

CULane

CULane