Unsupervised Anomaly Detection with Adversarial Mirrored AutoEncoders

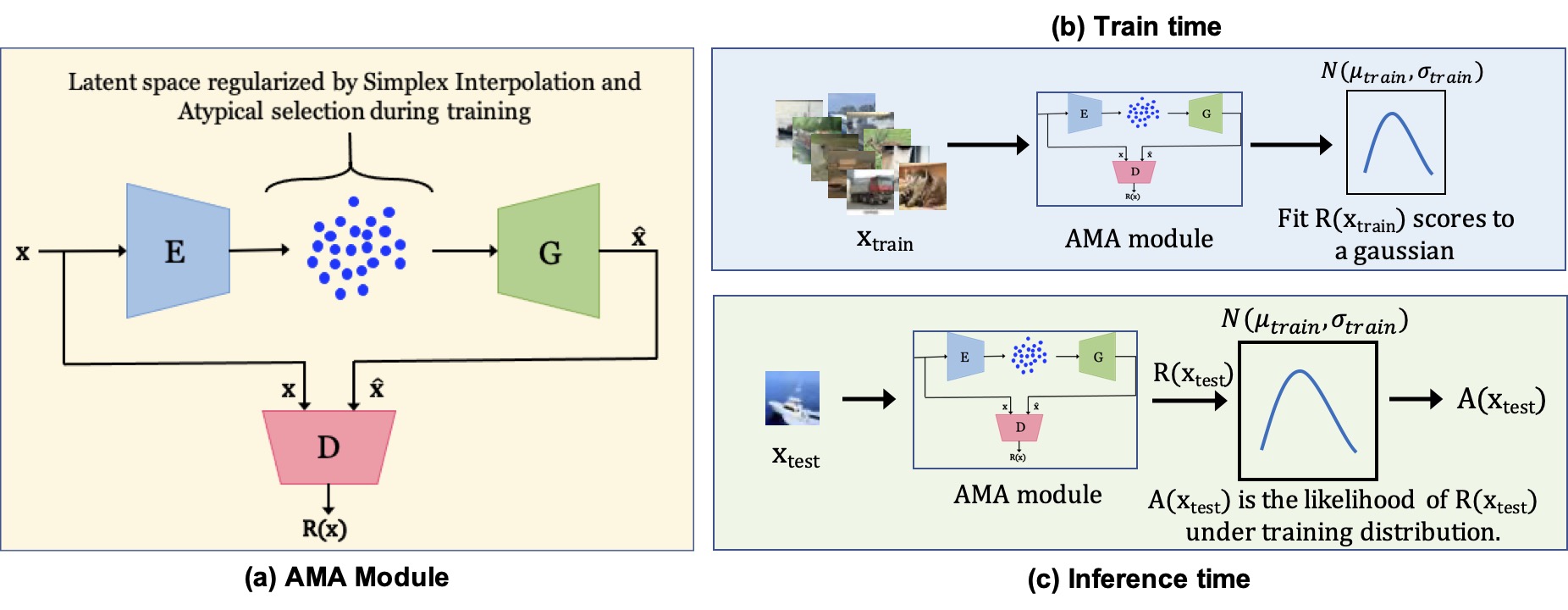

Detecting out of distribution (OOD) samples is of paramount importance in all Machine Learning applications. Deep generative modeling has emerged as a dominant paradigm to model complex data distributions without labels. However, prior work has shown that generative models tend to assign higher likelihoods to OOD samples compared to the data distribution on which they were trained. First, we propose Adversarial Mirrored Autoencoder (AMA), a variant of Adversarial Autoencoder, which uses a mirrored Wasserstein loss in the discriminator to enforce better semantic-level reconstruction. We also propose a latent space regularization to learn a compact manifold for in-distribution samples. The use of AMA produces better feature representations that improve anomaly detection performance. Second, we put forward an alternative measure of anomaly score to replace the reconstruction-based metric which has been traditionally used in generative model-based anomaly detection methods. Our method outperforms the current state-of-the-art methods for anomaly detection on several OOD detection benchmarks.

PDF Abstract

CIFAR-10

CIFAR-10

SVHN

SVHN