Multimodal Sensor Fusion In Single Thermal image Super-Resolution

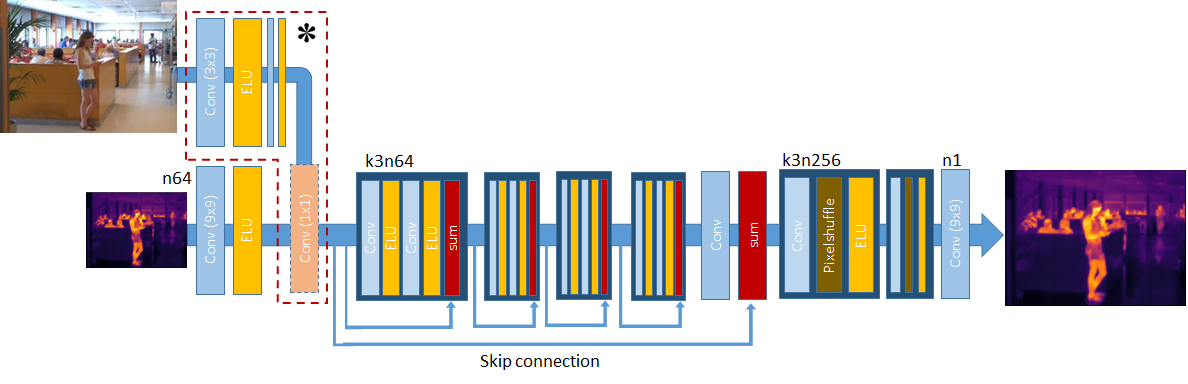

With the fast growth in the visual surveillance and security sectors, thermal infrared images have become increasingly necessary ina large variety of industrial applications. This is true even though IR sensors are still more expensive than their RGB counterpart having the same resolution. In this paper, we propose a deep learning solution to enhance the thermal image resolution. The following results are given:(I) Introduction of a multimodal, visual-thermal fusion model that ad-dresses thermal image super-resolution, via integrating high-frequency information from the visual image. (II) Investigation of different net-work architecture schemes in the literature, their up-sampling methods,learning procedures, and their optimization functions by showing their beneficial contribution to the super-resolution problem. (III) A bench-mark ULB17-VT dataset that contains thermal images and their visual images counterpart is presented. (IV) Presentation of a qualitative evaluation of a large test set with 58 samples and 22 raters which shows that our proposed model performs better against state-of-the-arts.

PDF Abstract