Neural Video Depth Stabilizer

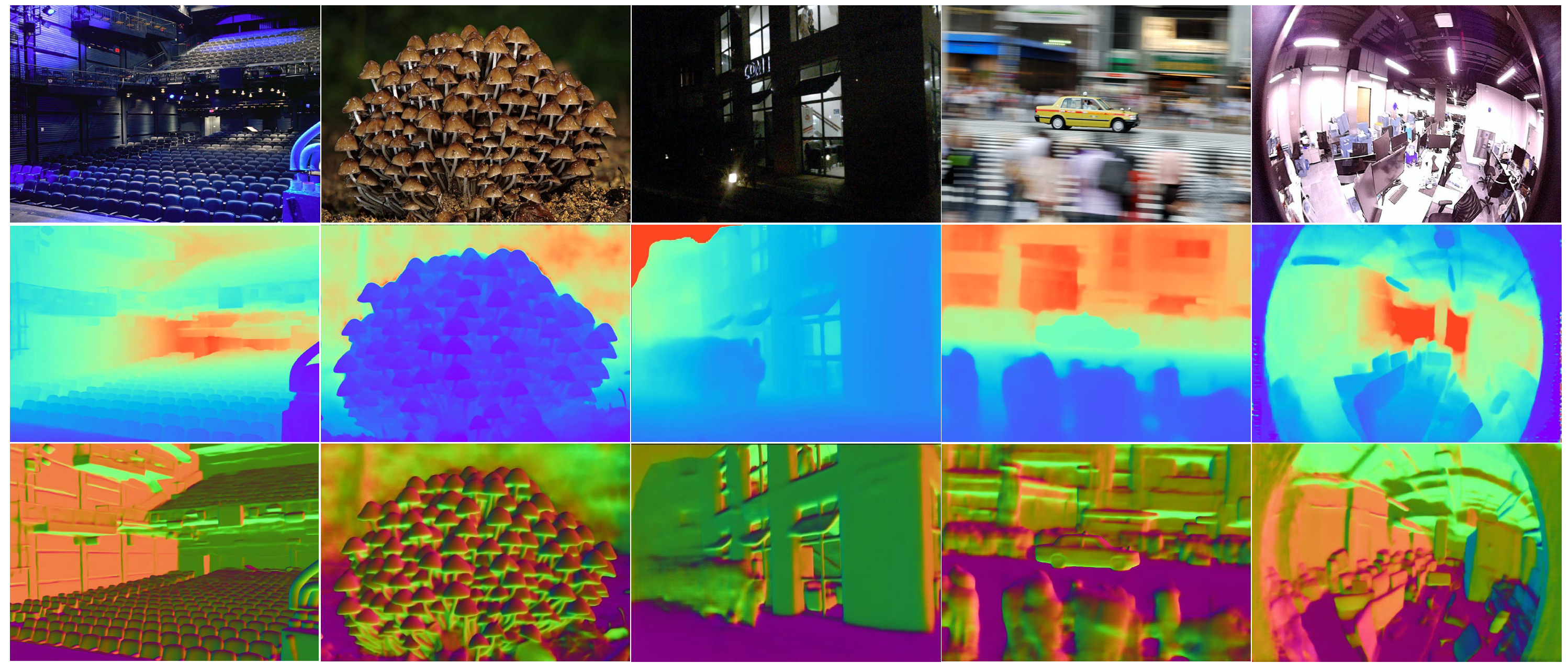

Video depth estimation aims to infer temporally consistent depth. Some methods achieve temporal consistency by finetuning a single-image depth model during test time using geometry and re-projection constraints, which is inefficient and not robust. An alternative approach is to learn how to enforce temporal consistency from data, but this requires well-designed models and sufficient video depth data. To address these challenges, we propose a plug-and-play framework called Neural Video Depth Stabilizer (NVDS) that stabilizes inconsistent depth estimations and can be applied to different single-image depth models without extra effort. We also introduce a large-scale dataset, Video Depth in the Wild (VDW), which consists of 14,203 videos with over two million frames, making it the largest natural-scene video depth dataset to our knowledge. We evaluate our method on the VDW dataset as well as two public benchmarks and demonstrate significant improvements in consistency, accuracy, and efficiency compared to previous approaches. Our work serves as a solid baseline and provides a data foundation for learning-based video depth models. We will release our dataset and code for future research.

PDF Abstract ICCV 2023 PDF ICCV 2023 AbstractCode

Results from the Paper

Ranked #16 on

Monocular Depth Estimation

on NYU-Depth V2

(using extra training data)

Ranked #16 on

Monocular Depth Estimation

on NYU-Depth V2

(using extra training data)

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Monocular Depth Estimation | NYU-Depth V2 | NVDS(DPT-L) | RMSE | 0.282 | # 16 | ||

| absolute relative error | 0.072 | # 13 | |||||

| Delta < 1.25 | 0.9493 | # 16 | |||||

| Delta < 1.25^2 | 0.991 | # 21 | |||||

| Delta < 1.25^3 | 0.997 | # 27 | |||||

| log 10 | 0.031 | # 13 |

ADE20K

ADE20K

NYUv2

NYUv2

WSVD

WSVD

IRS

IRS