Pixel-in-Pixel Net: Towards Efficient Facial Landmark Detection in the Wild

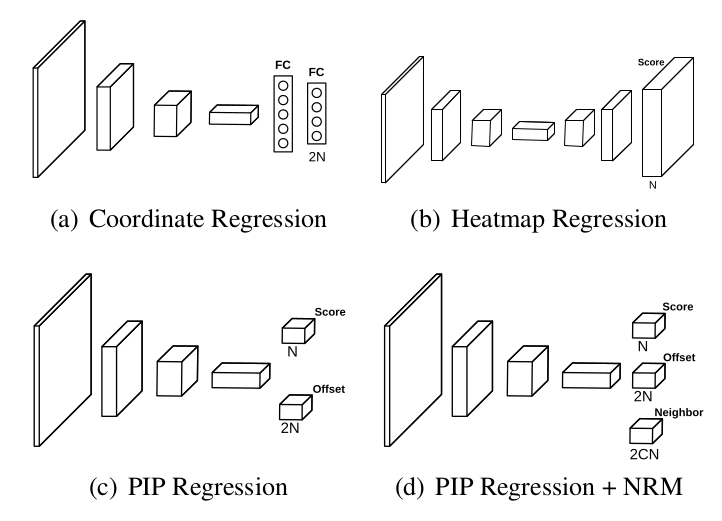

Recently, heatmap regression models have become popular due to their superior performance in locating facial landmarks. However, three major problems still exist among these models: (1) they are computationally expensive; (2) they usually lack explicit constraints on global shapes; (3) domain gaps are commonly present. To address these problems, we propose Pixel-in-Pixel Net (PIPNet) for facial landmark detection. The proposed model is equipped with a novel detection head based on heatmap regression, which conducts score and offset predictions simultaneously on low-resolution feature maps. By doing so, repeated upsampling layers are no longer necessary, enabling the inference time to be largely reduced without sacrificing model accuracy. Besides, a simple but effective neighbor regression module is proposed to enforce local constraints by fusing predictions from neighboring landmarks, which enhances the robustness of the new detection head. To further improve the cross-domain generalization capability of PIPNet, we propose self-training with curriculum. This training strategy is able to mine more reliable pseudo-labels from unlabeled data across domains by starting with an easier task, then gradually increasing the difficulty to provide more precise labels. Extensive experiments demonstrate the superiority of PIPNet, which obtains state-of-the-art results on three out of six popular benchmarks under the supervised setting. The results on two cross-domain test sets are also consistently improved compared to the baselines. Notably, our lightweight version of PIPNet runs at 35.7 FPS and 200 FPS on CPU and GPU, respectively, while still maintaining a competitive accuracy to state-of-the-art methods. The code of PIPNet is available at https://github.com/jhb86253817/PIPNet.

PDF Abstract

CelebA

CelebA

300W

300W

AFLW

AFLW

COFW

COFW

WFLW

WFLW

AFLW-19

AFLW-19