Outlier Exposure with Confidence Control for Out-of-Distribution Detection

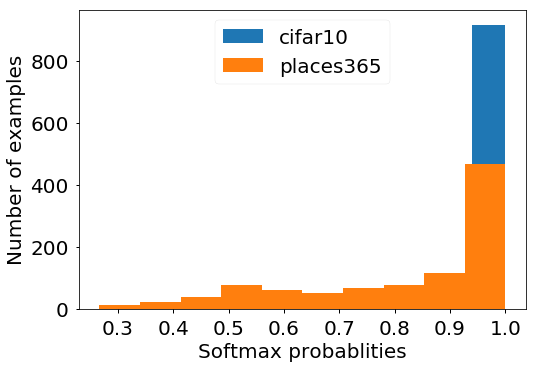

Deep neural networks have achieved great success in classification tasks during the last years. However, one major problem to the path towards artificial intelligence is the inability of neural networks to accurately detect samples from novel class distributions and therefore, most of the existent classification algorithms assume that all classes are known prior to the training stage. In this work, we propose a methodology for training a neural network that allows it to efficiently detect out-of-distribution (OOD) examples without compromising much of its classification accuracy on the test examples from known classes. We propose a novel loss function that gives rise to a novel method, Outlier Exposure with Confidence Control (OECC), which achieves superior results in OOD detection with OE both on image and text classification tasks without requiring access to OOD samples. Additionally, we experimentally show that the combination of OECC with state-of-the-art post-training OOD detection methods, like the Mahalanobis Detector (MD) and the Gramian Matrices (GM) methods, further improves their performance in the OOD detection task, demonstrating the potential of combining training and post-training methods for OOD detection.

PDF AbstractResults from the Paper

Ranked #1 on

Out-of-Distribution Detection

on CIFAR-100 vs SVHN

(using extra training data)

Ranked #1 on

Out-of-Distribution Detection

on CIFAR-100 vs SVHN

(using extra training data)

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

SVHN

SVHN

Places

Places

LSUN

LSUN

Tiny Images

Tiny Images