Street TryOn: Learning In-the-Wild Virtual Try-On from Unpaired Person Images

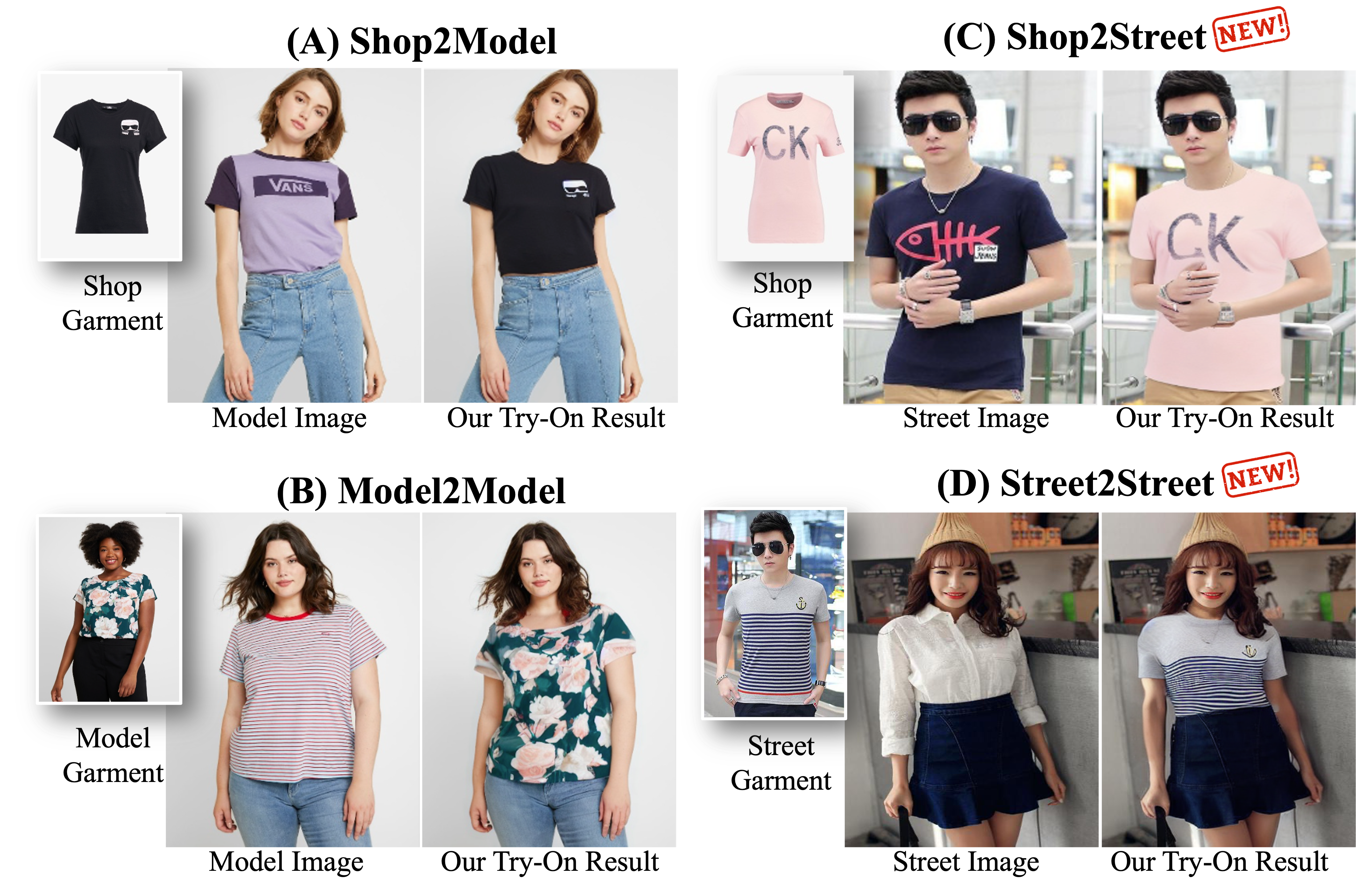

Most existing methods for virtual try-on focus on studio person images with a limited range of poses and clean backgrounds. They can achieve plausible results for this studio try-on setting by learning to warp a garment image to fit a person's body from paired training data, i.e., garment images paired with images of people wearing the same garment. Such data is often collected from commercial websites, where each garment is demonstrated both by itself and on several models. By contrast, it is hard to collect paired data for in-the-wild scenes, and therefore, virtual try-on for casual images of people with more diverse poses against cluttered backgrounds is rarely studied. In this work, we fill the gap by introducing a StreetTryOn benchmark to evaluate in-the-wild virtual try-on performance and proposing a novel method that can learn it without paired data, from a set of in-the-wild person images directly. Our method achieves robust performance across shop and street domains using a novel DensePose warping correction method combined with diffusion-based conditional inpainting. Our experiments show competitive performance for standard studio try-on tasks and SOTA performance for street try-on and cross-domain try-on tasks.

PDF Abstract

StreetTryOn

StreetTryOn

DeepFashion

DeepFashion

DensePose

DensePose

VITON-HD

VITON-HD