Trajectory Space Factorization for Deep Video-Based 3D Human Pose Estimation

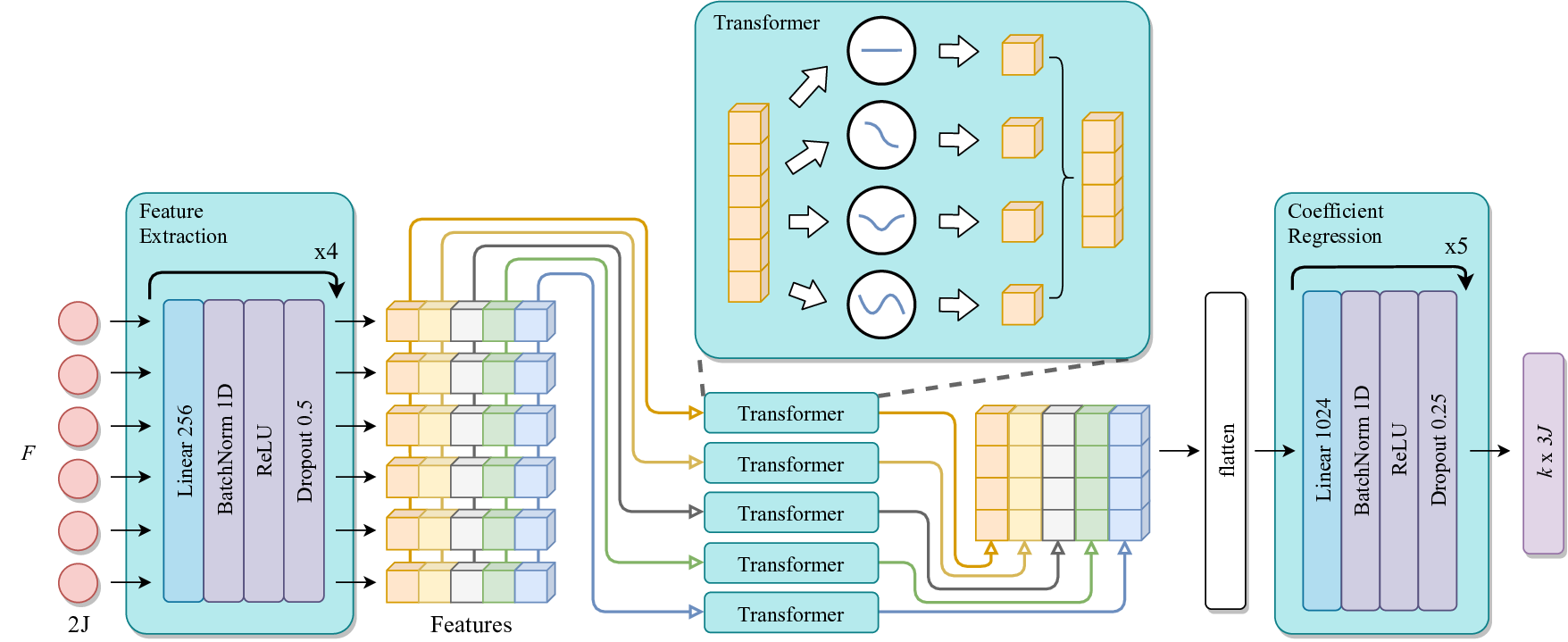

Existing deep learning approaches on 3d human pose estimation for videos are either based on Recurrent or Convolutional Neural Networks (RNNs or CNNs). However, RNN-based frameworks can only tackle sequences with limited frames because sequential models are sensitive to bad frames and tend to drift over long sequences. Although existing CNN-based temporal frameworks attempt to address the sensitivity and drift problems by concurrently processing all input frames in the sequence, the existing state-of-the-art CNN-based framework is limited to 3d pose estimation of a single frame from a sequential input. In this paper, we propose a deep learning-based framework that utilizes matrix factorization for sequential 3d human poses estimation. Our approach processes all input frames concurrently to avoid the sensitivity and drift problems, and yet outputs the 3d pose estimates for every frame in the input sequence. More specifically, the 3d poses in all frames are represented as a motion matrix factorized into a trajectory bases matrix and a trajectory coefficient matrix. The trajectory bases matrix is precomputed from matrix factorization approaches such as Singular Value Decomposition (SVD) or Discrete Cosine Transform (DCT), and the problem of sequential 3d pose estimation is reduced to training a deep network to regress the trajectory coefficient matrix. We demonstrate the effectiveness of our framework on long sequences by achieving state-of-the-art performances on multiple benchmark datasets. Our source code is available at: https://github.com/jiahaoLjh/trajectory-pose-3d.

PDF Abstract

Human3.6M

Human3.6M

MPI-INF-3DHP

MPI-INF-3DHP

LSP

LSP