VITA: Video Instance Segmentation via Object Token Association

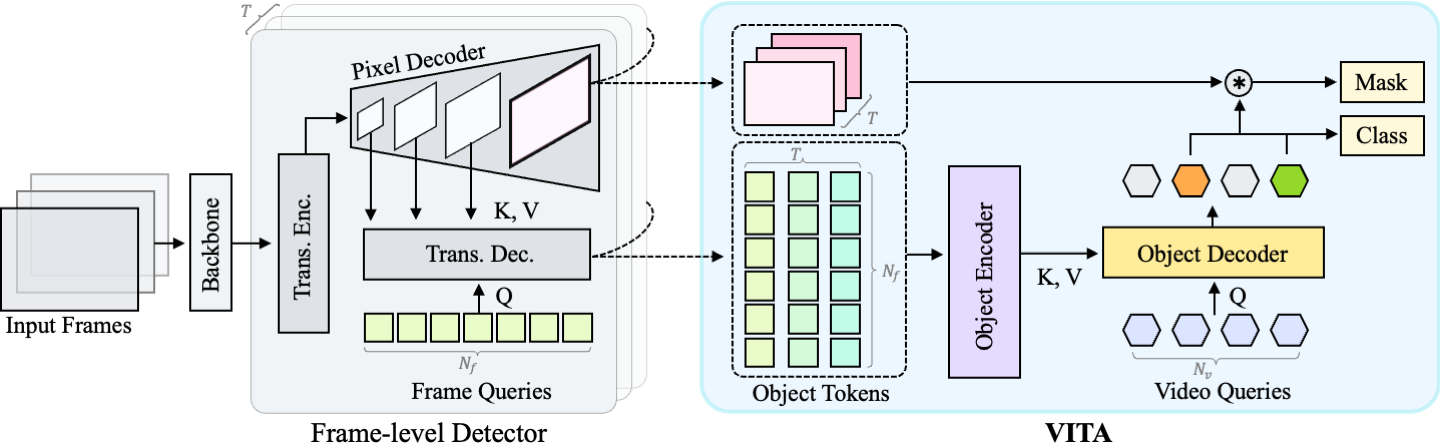

We introduce a novel paradigm for offline Video Instance Segmentation (VIS), based on the hypothesis that explicit object-oriented information can be a strong clue for understanding the context of the entire sequence. To this end, we propose VITA, a simple structure built on top of an off-the-shelf Transformer-based image instance segmentation model. Specifically, we use an image object detector as a means of distilling object-specific contexts into object tokens. VITA accomplishes video-level understanding by associating frame-level object tokens without using spatio-temporal backbone features. By effectively building relationships between objects using the condensed information, VITA achieves the state-of-the-art on VIS benchmarks with a ResNet-50 backbone: 49.8 AP, 45.7 AP on YouTube-VIS 2019 & 2021, and 19.6 AP on OVIS. Moreover, thanks to its object token-based structure that is disjoint from the backbone features, VITA shows several practical advantages that previous offline VIS methods have not explored - handling long and high-resolution videos with a common GPU, and freezing a frame-level detector trained on image domain. Code is available at https://github.com/sukjunhwang/VITA.

PDF AbstractCode

Datasets

Results from the Paper

Ranked #11 on

Video Instance Segmentation

on YouTube-VIS 2021

(using extra training data)

Ranked #11 on

Video Instance Segmentation

on YouTube-VIS 2021

(using extra training data)

MS COCO

MS COCO

YouTube-VIS 2019

YouTube-VIS 2019

OVIS

OVIS