Depth Estimation

799 papers with code • 14 benchmarks • 70 datasets

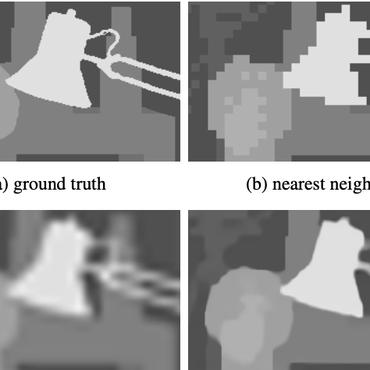

Depth Estimation is the task of measuring the distance of each pixel relative to the camera. Depth is extracted from either monocular (single) or stereo (multiple views of a scene) images. Traditional methods use multi-view geometry to find the relationship between the images. Newer methods can directly estimate depth by minimizing the regression loss, or by learning to generate a novel view from a sequence. The most popular benchmarks are KITTI and NYUv2. Models are typically evaluated according to a RMS metric.

Libraries

Use these libraries to find Depth Estimation models and implementationsSubtasks

Latest papers with no code

SGFormer: Spherical Geometry Transformer for 360 Depth Estimation

Panoramic distortion poses a significant challenge in 360 depth estimation, particularly pronounced at the north and south poles.

Mining Supervision for Dynamic Regions in Self-Supervised Monocular Depth Estimation

In the next stage, we use an object network to estimate the depth of those moving objects assuming rigid motions.

Self-Supervised Monocular Depth Estimation in the Dark: Towards Data Distribution Compensation

In this paper, we propose a self-supervised nighttime monocular depth estimation method that does not use any night images during training.

GScream: Learning 3D Geometry and Feature Consistent Gaussian Splatting for Object Removal

This paper tackles the intricate challenge of object removal to update the radiance field using the 3D Gaussian Splatting.

High-fidelity Endoscopic Image Synthesis by Utilizing Depth-guided Neural Surfaces

In surgical oncology, screening colonoscopy plays a pivotal role in providing diagnostic assistance, such as biopsy, and facilitating surgical navigation, particularly in polyp detection.

BLINK: Multimodal Large Language Models Can See but Not Perceive

We introduce Blink, a new benchmark for multimodal language models (LLMs) that focuses on core visual perception abilities not found in other evaluations.

SPIdepth: Strengthened Pose Information for Self-supervised Monocular Depth Estimation

Our approach represents a significant leap forward in self-supervised monocular depth estimation, underscoring the importance of strengthening pose information for advancing scene understanding in real-world applications.

How to deal with glare for improved perception of Autonomous Vehicles

In this paper, we investigate various glare reduction techniques, including the proposed saturated pixel-aware glare reduction technique for improved performance of the computer vision (CV) tasks employed by the perception layer of AVs.

Digging into contrastive learning for robust depth estimation with diffusion models

In this paper, we propose a novel robust depth estimation method called D4RD, featuring a custom contrastive learning mode tailored for diffusion models to mitigate performance degradation in complex environments.

In My Perspective, In My Hands: Accurate Egocentric 2D Hand Pose and Action Recognition

Our study aims to fill this research gap by exploring the field of 2D hand pose estimation for egocentric action recognition, making two contributions.

Cityscapes

Cityscapes

KITTI

KITTI

ScanNet

ScanNet

NYUv2

NYUv2

Matterport3D

Matterport3D

Middlebury

Middlebury

TUM RGB-D

TUM RGB-D

SUNCG

SUNCG

Taskonomy

Taskonomy

2D-3D-S

2D-3D-S