Scene Graph Generation

110 papers with code • 5 benchmarks • 7 datasets

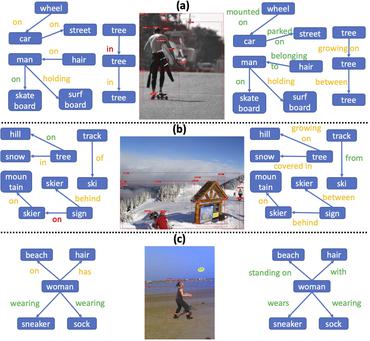

A scene graph is a structured representation of an image, where nodes in a scene graph correspond to object bounding boxes with their object categories, and edges correspond to their pairwise relationships between objects. The task of Scene Graph Generation is to generate a visually-grounded scene graph that most accurately correlates with an image.

Libraries

Use these libraries to find Scene Graph Generation models and implementationsLatest papers with no code

Expanding Scene Graph Boundaries: Fully Open-vocabulary Scene Graph Generation via Visual-Concept Alignment and Retention

For the more challenging settings of relation-involved open vocabulary SGG, the proposed approach integrates relation-aware pre-training utilizing image-caption data and retains visual-concept alignment through knowledge distillation.

Two Stream Scene Understanding on Graph Embedding

This architecture utilizes a graph feature stream and an image feature stream, aiming to merge the strengths of both modalities for improved performance in image classification and scene graph generation tasks.

Towards a Unified Transformer-based Framework for Scene Graph Generation and Human-object Interaction Detection

In light of this, we introduce SG2HOI+, a unified one-step model based on the Transformer architecture.

Semantic Scene Graph Generation Based on an Edge Dual Scene Graph and Message Passing Neural Network

Along with generative AI, interest in scene graph generation (SGG), which comprehensively captures the relationships and interactions between objects in an image and creates a structured graph-based representation, has significantly increased in recent years.

FloCoDe: Unbiased Dynamic Scene Graph Generation with Temporal Consistency and Correlation Debiasing

To address the long-tail issue of visual relationships, we propose correlation debiasing and a label correlation-based loss to learn unbiased relation representations for long-tailed classes.

VidCoM: Fast Video Comprehension through Large Language Models with Multimodal Tools

Building models that comprehends videos and responds specific user instructions is a practical and challenging topic, as it requires mastery of both vision understanding and knowledge reasoning.

TextPSG: Panoptic Scene Graph Generation from Textual Descriptions

To tackle this problem, we propose a new framework TextPSG consisting of four modules, i. e., a region grouper, an entity grounder, a segment merger, and a label generator, with several novel techniques.

Domain-wise Invariant Learning for Panoptic Scene Graph Generation

Panoptic Scene Graph Generation (PSG) involves the detection of objects and the prediction of their corresponding relationships (predicates).

Logical Bias Learning for Object Relation Prediction

Scene graph generation (SGG) aims to automatically map an image into a semantic structural graph for better scene understanding.

Predicate Classification Using Optimal Transport Loss in Scene Graph Generation

In scene graph generation (SGG), learning with cross-entropy loss yields biased predictions owing to the severe imbalance in the distribution of the relationship labels in the dataset.

MS COCO

MS COCO

Visual Genome

Visual Genome

VRD

VRD

3DSSG

3DSSG

3RScan

3RScan

PSG Dataset

PSG Dataset

4D-OR

4D-OR