Attention-guided Chained Context Aggregation for Semantic Segmentation

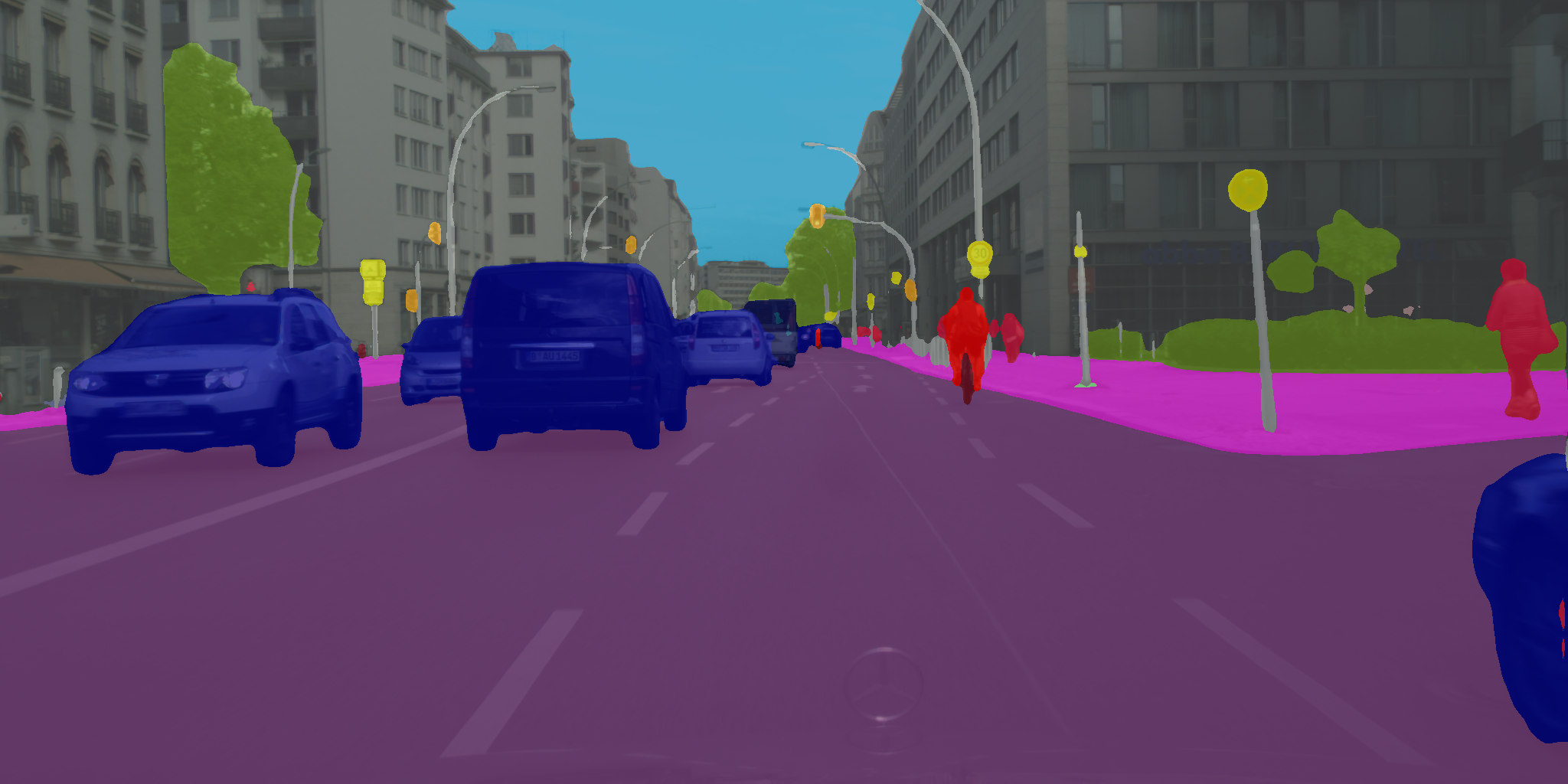

The way features propagate in Fully Convolutional Networks is of momentous importance to capture multi-scale contexts for obtaining precise segmentation masks. This paper proposes a novel series-parallel hybrid paradigm called the Chained Context Aggregation Module (CAM) to diversify feature propagation. CAM gains features of various spatial scales through chain-connected ladder-style information flows and fuses them in a two-stage process, namely pre-fusion and re-fusion. The serial flow continuously increases receptive fields of output neurons and those in parallel encode different region-based contexts. Each information flow is a shallow encoder-decoder with appropriate down-sampling scales to sufficiently capture contextual information. We further adopt an attention model in CAM to guide feature re-fusion. Based on these developments, we construct the Chained Context Aggregation Network (CANet), which employs an asymmetric decoder to recover precise spatial details of prediction maps. We conduct extensive experiments on six challenging datasets, including Pascal VOC 2012, Pascal Context, Cityscapes, CamVid, SUN-RGBD and GATECH. Results evidence that CANet achieves state-of-the-art performance.

PDF AbstractTasks

Datasets

Results from the Paper

Ranked #23 on

Semantic Segmentation

on SUN-RGBD

(using extra training data)

Ranked #23 on

Semantic Segmentation

on SUN-RGBD

(using extra training data)

MS COCO

MS COCO

Cityscapes

Cityscapes

SUN RGB-D

SUN RGB-D

PASCAL Context

PASCAL Context

CamVid

CamVid