Can an Embodied Agent Find Your "Cat-shaped Mug"? LLM-Guided Exploration for Zero-Shot Object Navigation

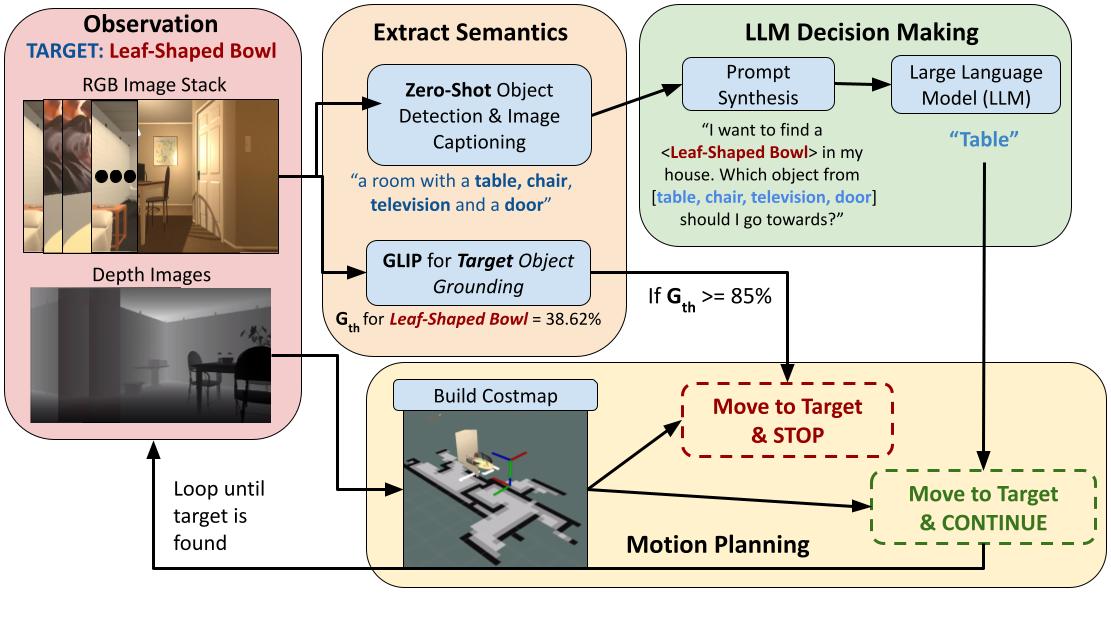

We present LGX (Language-guided Exploration), a novel algorithm for Language-Driven Zero-Shot Object Goal Navigation (L-ZSON), where an embodied agent navigates to a uniquely described target object in a previously unseen environment. Our approach makes use of Large Language Models (LLMs) for this task by leveraging the LLM's commonsense reasoning capabilities for making sequential navigational decisions. Simultaneously, we perform generalized target object detection using a pre-trained Vision-Language grounding model. We achieve state-of-the-art zero-shot object navigation results on RoboTHOR with a success rate (SR) improvement of over 27% over the current baseline of the OWL-ViT CLIP on Wheels (OWL CoW). Furthermore, we study the usage of LLMs for robot navigation and present an analysis of various prompting strategies affecting the model output. Finally, we showcase the benefits of our approach via \textit{real-world} experiments that indicate the superior performance of LGX in detecting and navigating to visually unique objects.

PDF Abstract