Formulating Event-based Image Reconstruction as a Linear Inverse Problem with Deep Regularization using Optical Flow

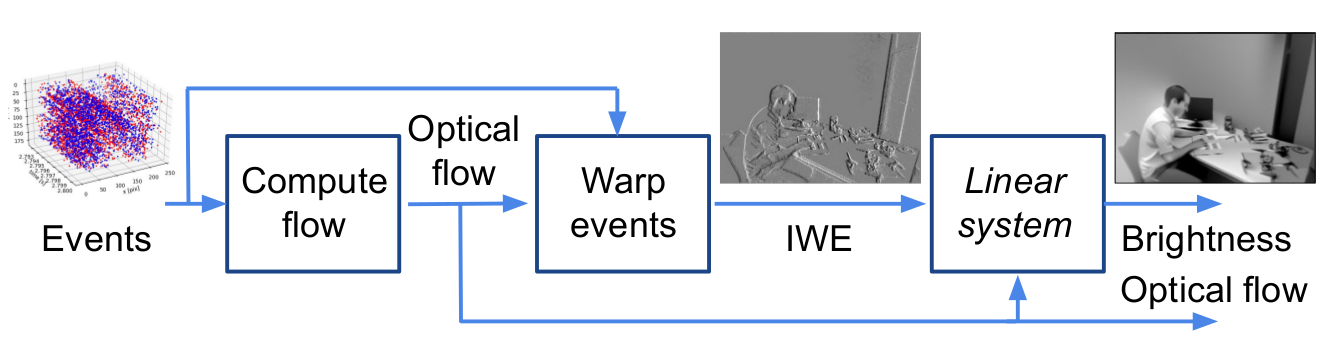

Event cameras are novel bio-inspired sensors that measure per-pixel brightness differences asynchronously. Recovering brightness from events is appealing since the reconstructed images inherit the high dynamic range (HDR) and high-speed properties of events; hence they can be used in many robotic vision applications and to generate slow-motion HDR videos. However, state-of-the-art methods tackle this problem by training an event-to-image Recurrent Neural Network (RNN), which lacks explainability and is difficult to tune. In this work we show, for the first time, how tackling the combined problem of motion and brightness estimation leads us to formulate event-based image reconstruction as a linear inverse problem that can be solved without training an image reconstruction RNN. Instead, classical and learning-based regularizers are used to solve the problem and remove artifacts from the reconstructed images. The experiments show that the proposed approach generates images with visual quality on par with state-of-the-art methods despite only using data from a short time interval. State-of-the-art results are achieved using an image denoising Convolutional Neural Network (CNN) as the regularization function. The proposed regularized formulation and solvers have a unifying character because they can be applied also to reconstruct brightness from the second derivative. Additionally, the formulation is attractive because it can be naturally combined with super-resolution, motion-segmentation and color demosaicing. Code is available at https://github.com/tub-rip/event_based_image_rec_inverse_problem

PDF Abstract

N-Caltech 101

N-Caltech 101

Event-Camera Dataset

Event-Camera Dataset