MedLSAM: Localize and Segment Anything Model for 3D CT Images

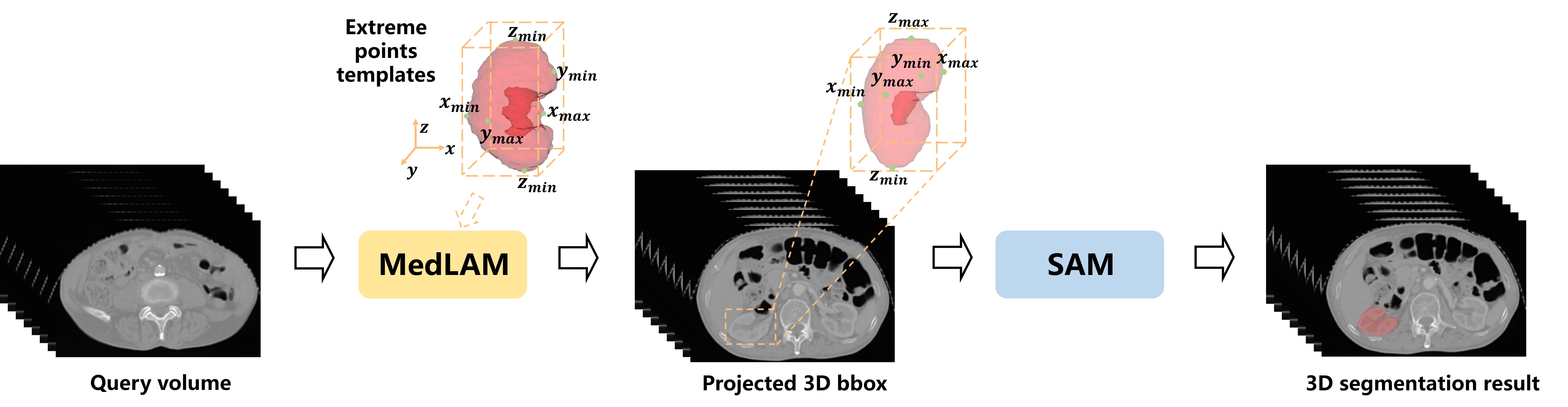

The Segment Anything Model (SAM) has recently emerged as a groundbreaking model in the field of image segmentation. Nevertheless, both the original SAM and its medical adaptations necessitate slice-by-slice annotations, which directly increase the annotation workload with the size of the dataset. We propose MedLSAM to address this issue, ensuring a constant annotation workload irrespective of dataset size and thereby simplifying the annotation process. Our model introduces a 3D localization foundation model capable of localizing any target anatomical part within the body. To achieve this, we develop a Localize Anything Model for 3D Medical Images (MedLAM), utilizing two self-supervision tasks: unified anatomical mapping (UAM) and multi-scale similarity (MSS) across a comprehensive dataset of 14,012 CT scans. We then establish a methodology for accurate segmentation by integrating MedLAM with SAM. By annotating several extreme points across three directions on a few templates, our model can autonomously identify the target anatomical region on all data scheduled for annotation. This allows our framework to generate a 2D bbox for every slice of the image, which is then leveraged by SAM to carry out segmentation. We carried out comprehensive experiments on two 3D datasets encompassing 38 distinct organs. Our findings are twofold: 1) MedLAM is capable of directly localizing any anatomical structure using just a few template scans, yet its performance surpasses that of fully supervised models; 2) MedLSAM not only aligns closely with the performance of SAM and its specialized medical adaptations with manual prompts but achieves this with minimal reliance on extreme point annotations across the entire dataset. Furthermore, MedLAM has the potential to be seamlessly integrated with future 3D SAM models, paving the way for enhanced performance.

PDF Abstract

WORD

WORD