Simple yet efficient real-time pose-based action recognition

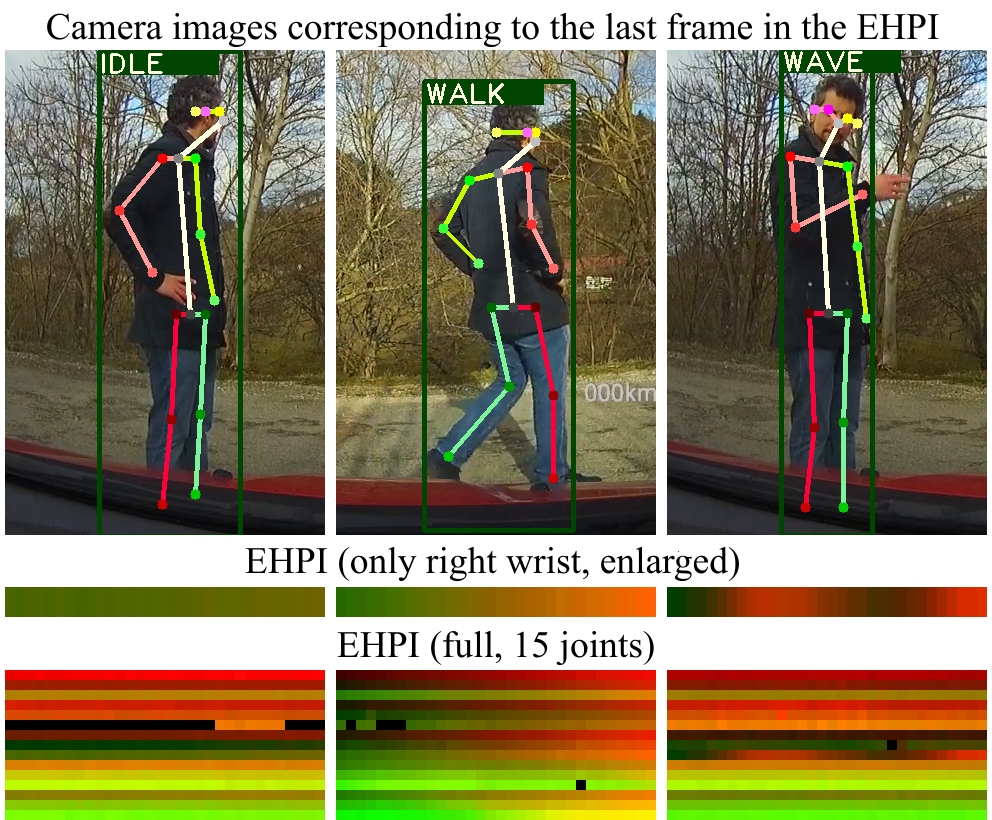

Recognizing human actions is a core challenge for autonomous systems as they directly share the same space with humans. Systems must be able to recognize and assess human actions in real-time. In order to train corresponding data-driven algorithms, a significant amount of annotated training data is required. We demonstrated a pipeline to detect humans, estimate their pose, track them over time and recognize their actions in real-time with standard monocular camera sensors. For action recognition, we encode the human pose into a new data format called Encoded Human Pose Image (EHPI) that can then be classified using standard methods from the computer vision community. With this simple procedure we achieve competitive state-of-the-art performance in pose-based action detection and can ensure real-time performance. In addition, we show a use case in the context of autonomous driving to demonstrate how such a system can be trained to recognize human actions using simulation data.

PDF Abstract

MS COCO

MS COCO

JHMDB

JHMDB