When Unseen Domain Generalization is Unnecessary? Rethinking Data Augmentation

Recent advances in deep learning for medical image segmentation demonstrate expert-level accuracy. However, in clinically realistic environments, such methods have marginal performance due to differences in image domains, including different imaging protocols, device vendors and patient populations. Here we consider the problem of domain generalization, when a model is trained once, and its performance generalizes to unseen domains. Intuitively, within a specific medical imaging modality the domain differences are smaller relative to natural images domain variability. We rethink data augmentation for medical 3D images and propose a deep stacked transformations (DST) approach for domain generalization. Specifically, a series of n stacked transformations are applied to each image in each mini-batch during network training to account for the contribution of domain-specific shifts in medical images. We comprehensively evaluate our method on three tasks: segmentation of whole prostate from 3D MRI, left atrial from 3D MRI, and left ventricle from 3D ultrasound. We demonstrate that when trained on a small source dataset, (i) on average, DST models on unseen datasets degrade only by 11% (Dice score change), compared to the conventional augmentation (degrading 39%) and CycleGAN-based domain adaptation method (degrading 25%); (ii) when evaluation on the same domain, DST is also better albeit only marginally. (iii) When training on large-sized data, DST on unseen domains reaches performance of state-of-the-art fully supervised models. These findings establish a strong benchmark for the study of domain generalization in medical imaging, and can be generalized to the design of robust deep segmentation models for clinical deployment.

PDF Abstract

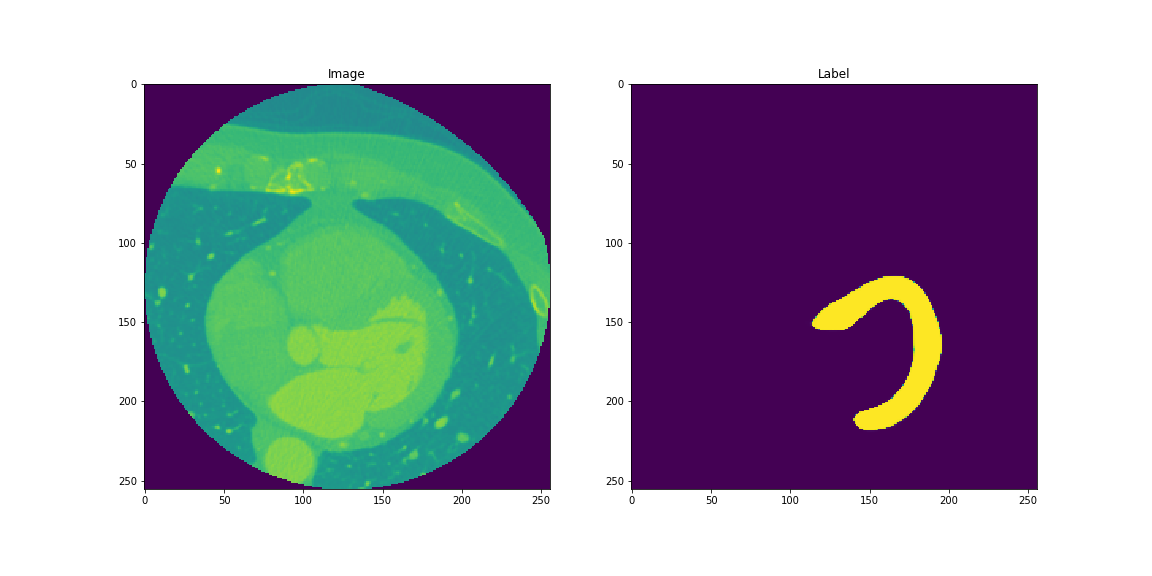

PROMISE12

PROMISE12