Generative Adversarial Networks

Generative Adversarial Networks

Adaptive Content Generating and Preserving Network

Introduced by Yang et al. in Towards Photo-Realistic Virtual Try-On by Adaptively Generating-Preserving Image ContentACGPN, or Adaptive Content Generating and Preserving Network, is a generative adversarial network for virtual try-on clothing applications.

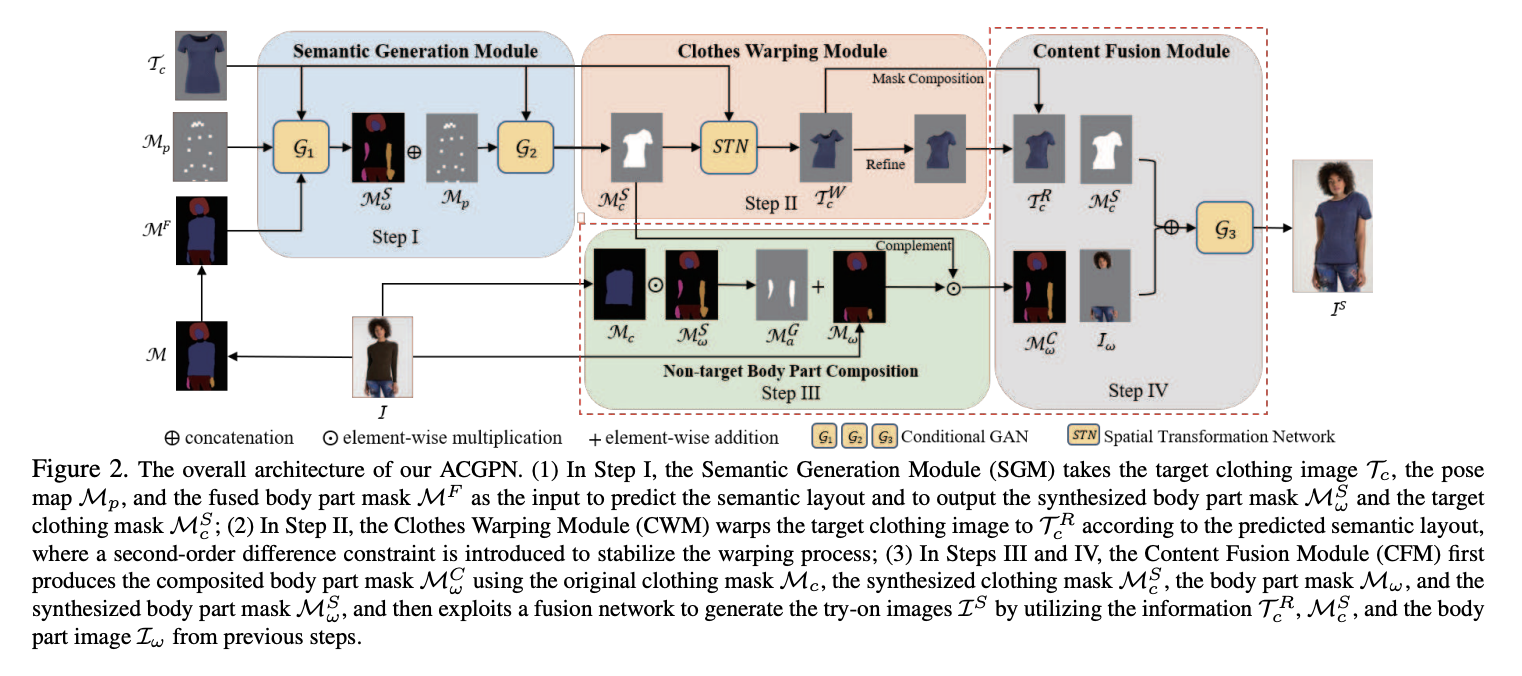

In Step I, the Semantic Generation Module (SGM) takes the target clothing image $\mathcal{T}_{c}$, the pose map $\mathcal{M}_{p}$, and the fused body part mask $\mathcal{M}^{F}$ as the input to predict the semantic layout and to output the synthesized body part mask $\mathcal{M}^{S}_{\omega}$ and the target clothing mask $\mathcal{M}^{S_{c}$.

In Step II, the Clothes Warping Module (CWM) warps the target clothing image to $\mathcal{T}^{R}_{c}$ according to the predicted semantic layout, where a second-order difference constraint is introduced to stabilize the warping process.

In Steps III and IV, the Content Fusion Module (CFM) first produces the composited body part mask $\mathcal{M}^{C}_{\omega}$ using the original clothing mask $\mathcal{M}_{c}$, the synthesized clothing mask $\mathcal{M}^{S}_{c}$, the body part mask $\mathcal{M}_{\omega}$, and the synthesized body part mask $\mathcal{M}_{\omega}^{S}$, and then exploits a fusion network to generate the try-on images $\mathcal{I}^{S}$ by utilizing the information $\mathcal{T}^{R}_{c}$, $\mathcal{M}^{S}_{c}$, and the body part image $I_{\omega}$ from previous steps.

Source: Towards Photo-Realistic Virtual Try-On by Adaptively Generating-Preserving Image ContentPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Semantic Segmentation | 2 | 25.00% |

| Virtual Try-on | 2 | 25.00% |

| Anomaly Detection | 1 | 12.50% |

| Cyber Attack Detection | 1 | 12.50% |

| Outlier Detection | 1 | 12.50% |

| Reinforcement Learning (RL) | 1 | 12.50% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |