H-Transformer-1D: Fast One-Dimensional Hierarchical Attention for Sequences

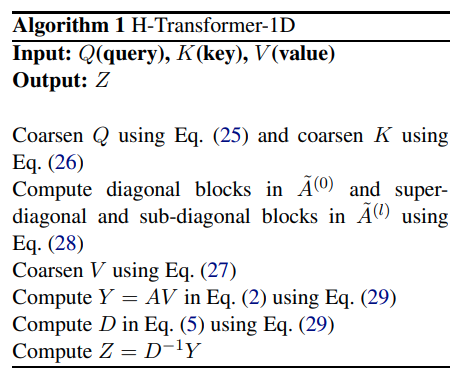

We describe an efficient hierarchical method to compute attention in the Transformer architecture. The proposed attention mechanism exploits a matrix structure similar to the Hierarchical Matrix (H-Matrix) developed by the numerical analysis community, and has linear run time and memory complexity. We perform extensive experiments to show that the inductive bias embodied by our hierarchical attention is effective in capturing the hierarchical structure in the sequences typical for natural language and vision tasks. Our method is superior to alternative sub-quadratic proposals by over +6 points on average on the Long Range Arena benchmark. It also sets a new SOTA test perplexity on One-Billion Word dataset with 5x fewer model parameters than that of the previous-best Transformer-based models.

PDF Abstract ACL 2021 PDF ACL 2021 AbstractDatasets

Results from the Paper

Ranked #1 on

Language Modelling

on One Billion Word

(Validation perplexity metric)

Ranked #1 on

Language Modelling

on One Billion Word

(Validation perplexity metric)