Continual Multimodal Knowledge Graph Construction

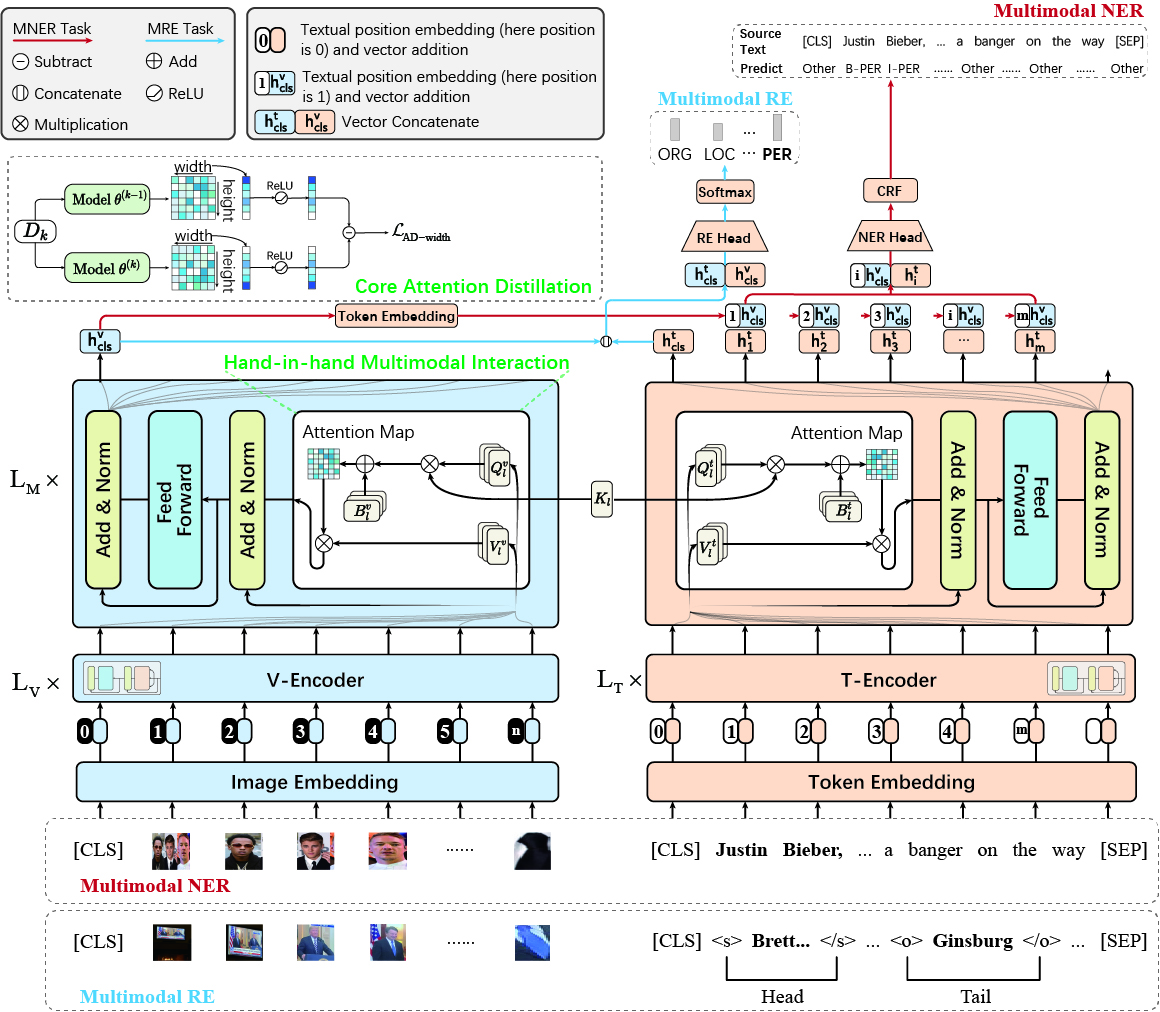

Multimodal Knowledge Graph Construction (MKGC) involves creating structured representations of entities and relations using multiple modalities, such as text and images. However, existing MKGC models face challenges in handling the addition of new entities and relations in dynamic real-world scenarios. The current continual setting for knowledge graph construction mainly focuses on entity and relation extraction from text data, overlooking other multimodal sources. Therefore, there arises the need to explore the challenge of continual MKGC to address the phenomenon of catastrophic forgetting and ensure the retention of past knowledge extracted from different forms of data. This research focuses on investigating this complex topic by developing lifelong MKGC benchmark datasets. Based on the empirical findings that several typical MKGC models, when trained on multimedia data, might unexpectedly underperform compared to those solely utilizing textual resources in a continual setting, we propose a Lifelong MultiModal Consistent Transformer Framework (LMC) for continual MKGC, which plays the strengths of the consistent multimodal optimization in continual learning and leads to a better stability-plasticity trade-off. Our experiments demonstrate the superior performance of our method over prevailing continual learning techniques or multimodal approaches in dynamic scenarios. Code and datasets can be found at https://github.com/zjunlp/ContinueMKGC.

PDF Abstract