EIT: Enhanced Interactive Transformer

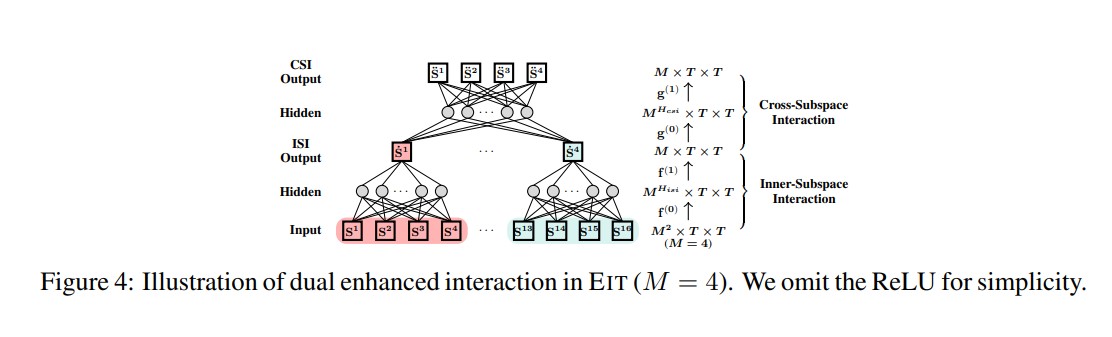

In this paper, we propose a novel architecture, the Enhanced Interactive Transformer (EIT), to address the issue of head degradation in self-attention mechanisms. Our approach replaces the traditional multi-head self-attention mechanism with the Enhanced Multi-Head Attention (EMHA) mechanism, which relaxes the one-to-one mapping constraint among queries and keys, allowing each query to attend to multiple keys. Furthermore, we introduce two interaction models, Inner-Subspace Interaction and Cross-Subspace Interaction, to fully utilize the many-to-many mapping capabilities of EMHA. Extensive experiments on a wide range of tasks (e.g. machine translation, abstractive summarization, grammar correction, language modelling and brain disease automatic diagnosis) show its superiority with a very modest increase in model size.

PDF Abstract