EM-driven unsupervised learning for efficient motion segmentation

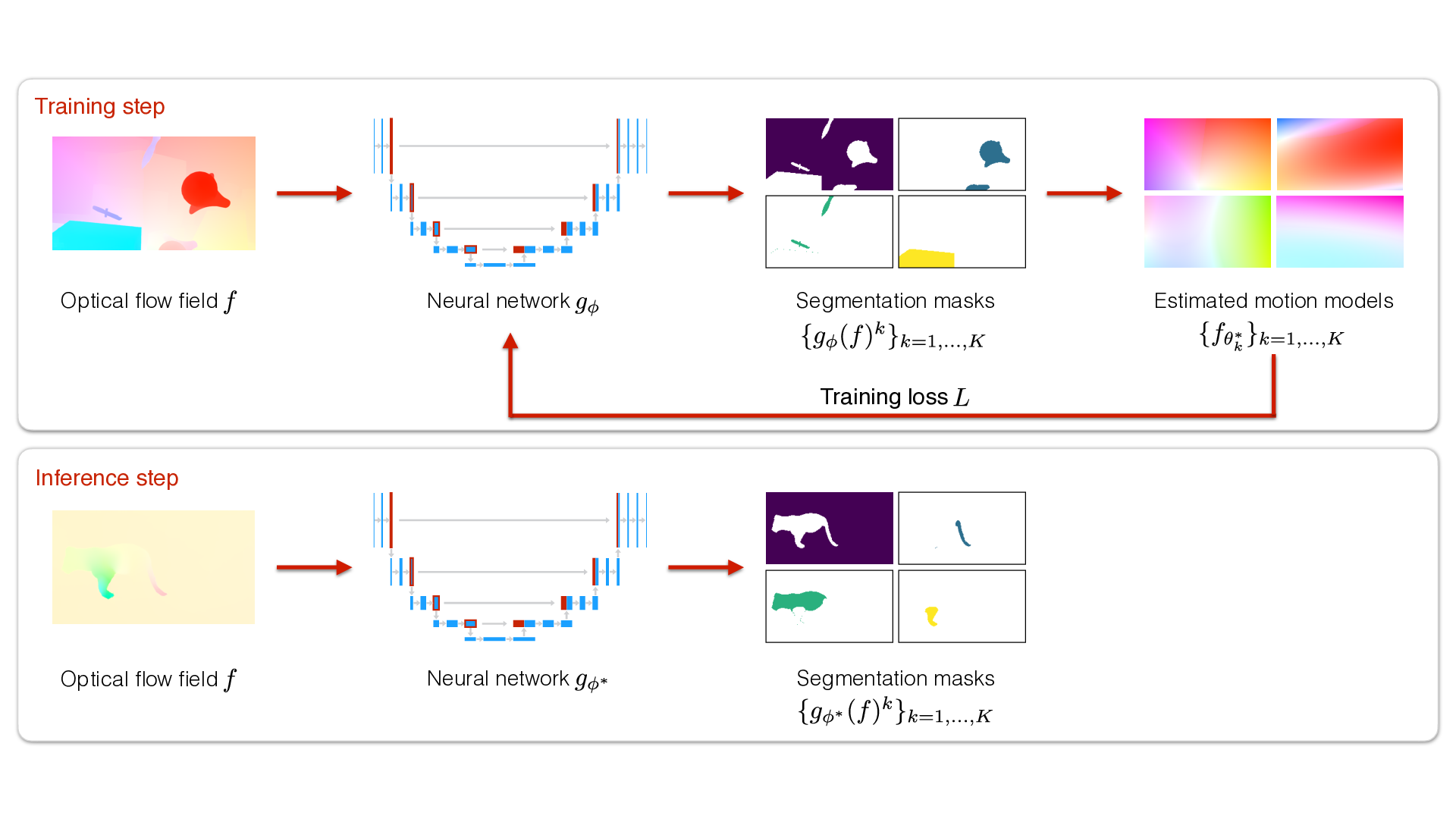

In this paper, we present a CNN-based fully unsupervised method for motion segmentation from optical flow. We assume that the input optical flow can be represented as a piecewise set of parametric motion models, typically, affine or quadratic motion models. The core idea of our work is to leverage the Expectation-Maximization (EM) framework in order to design in a well-founded manner a loss function and a training procedure of our motion segmentation neural network that does not require either ground-truth or manual annotation. However, in contrast to the classical iterative EM, once the network is trained, we can provide a segmentation for any unseen optical flow field in a single inference step and without estimating any motion models. We investigate different loss functions including robust ones and propose a novel efficient data augmentation technique on the optical flow field, applicable to any network taking optical flow as input. In addition, our method is able by design to segment multiple motions. Our motion segmentation network was tested on four benchmarks, DAVIS2016, SegTrackV2, FBMS59, and MoCA, and performed very well, while being fast at test time.

PDF Abstract

DAVIS

DAVIS

DAVIS 2016

DAVIS 2016

FBMS

FBMS

SegTrack-v2

SegTrack-v2

FBMS-59

FBMS-59