Introducing Self-Attention to Target Attentive Graph Neural Networks

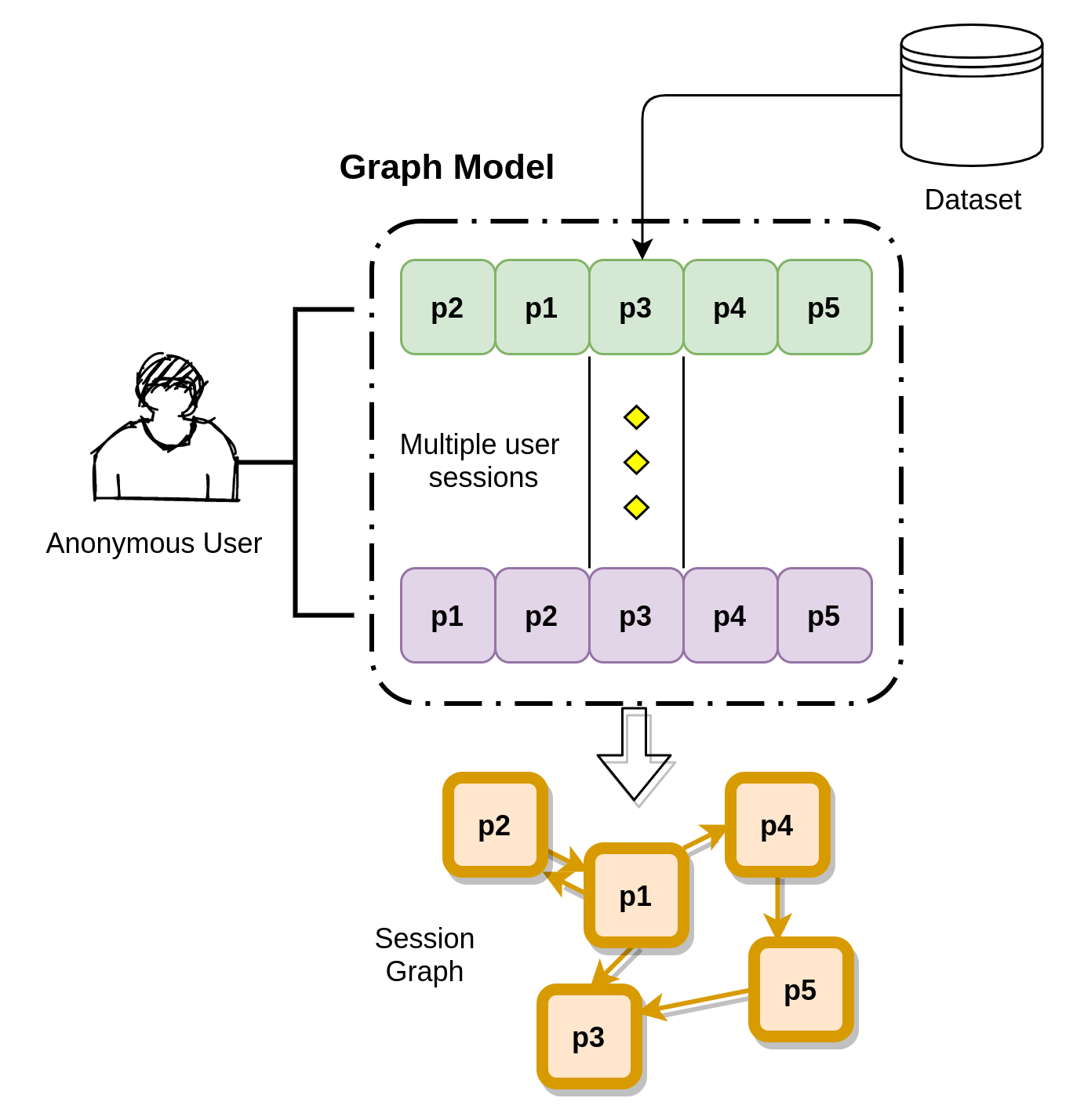

Session-based recommendation systems suggest relevant items to users by modeling user behavior and preferences using short-term anonymous sessions. Existing methods leverage Graph Neural Networks (GNNs) that propagate and aggregate information from neighboring nodes i.e., local message passing. Such graph-based architectures have representational limits, as a single sub-graph is susceptible to overfit the sequential dependencies instead of accounting for complex transitions between items in different sessions. We propose a new technique that leverages a Transformer in combination with a target attentive GNN. This allows richer representations to be learnt, which translates to empirical performance gains in comparison to a vanilla target attentive GNN. Our experimental results and ablation show that our proposed method is competitive with the existing methods on real-world benchmark datasets, improving on graph-based hypotheses. Code is available at https://github.com/The-Learning-Machines/SBR

PDF Abstract