Kformer: Knowledge Injection in Transformer Feed-Forward Layers

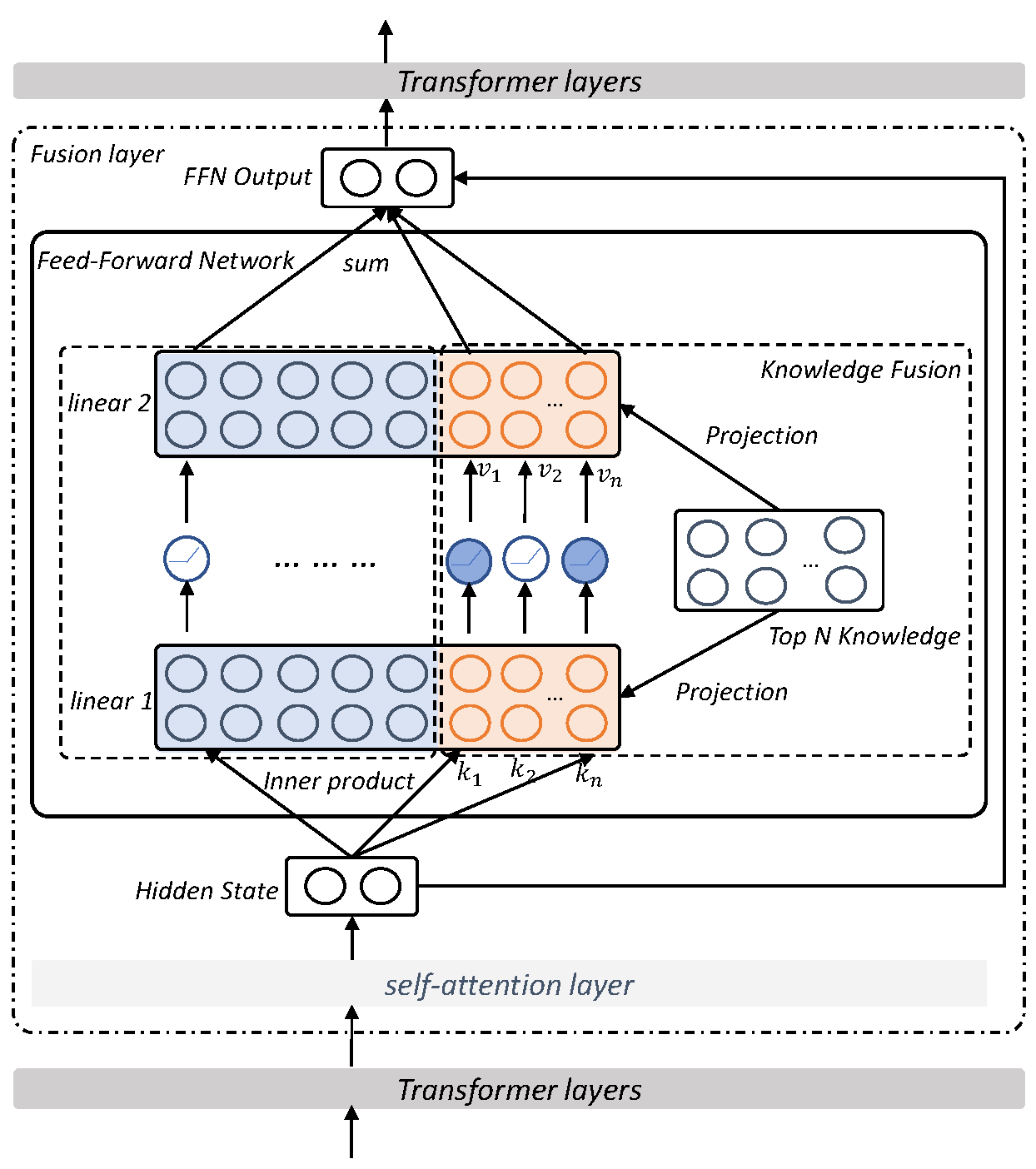

Recent days have witnessed a diverse set of knowledge injection models for pre-trained language models (PTMs); however, most previous studies neglect the PTMs' own ability with quantities of implicit knowledge stored in parameters. A recent study has observed knowledge neurons in the Feed Forward Network (FFN), which are responsible for expressing factual knowledge. In this work, we propose a simple model, Kformer, which takes advantage of the knowledge stored in PTMs and external knowledge via knowledge injection in Transformer FFN layers. Empirically results on two knowledge-intensive tasks, commonsense reasoning (i.e., SocialIQA) and medical question answering (i.e., MedQA-USMLE), demonstrate that Kformer can yield better performance than other knowledge injection technologies such as concatenation or attention-based injection. We think the proposed simple model and empirical findings may be helpful for the community to develop more powerful knowledge injection methods. Code available in https://github.com/zjunlp/Kformer.

PDF Abstract

MedQA

MedQA

SIQA

SIQA