Learning Geometry-Disentangled Representation for Complementary Understanding of 3D Object Point Cloud

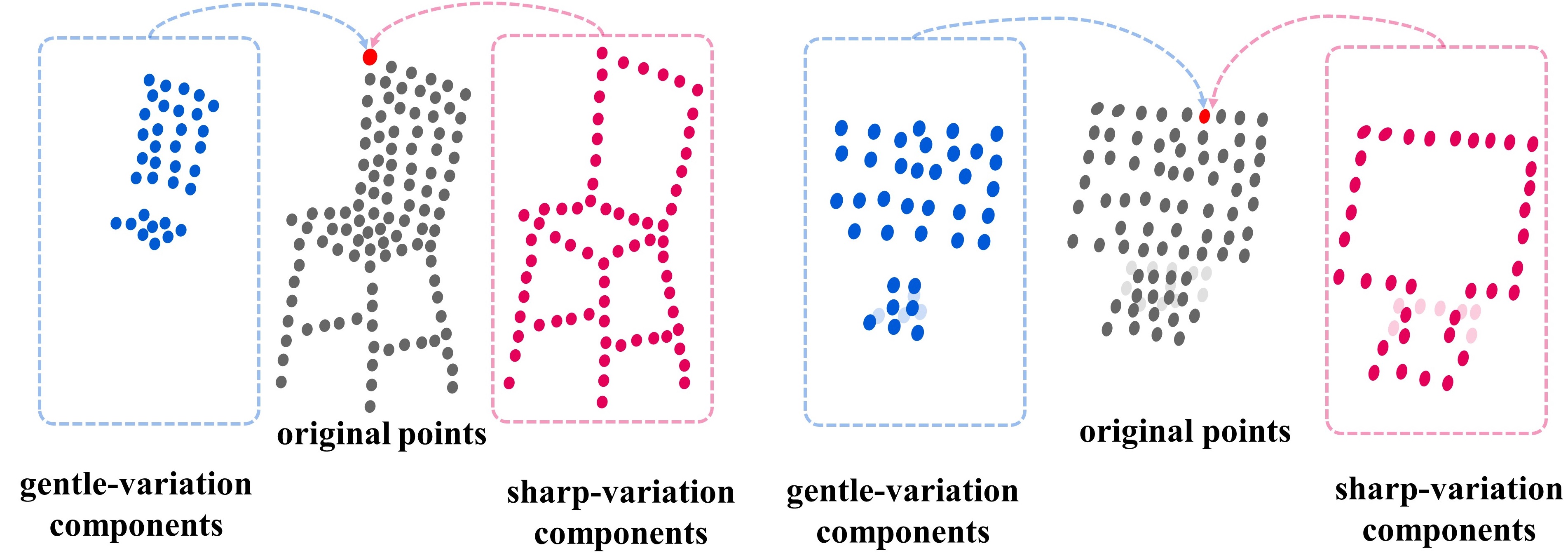

In 2D image processing, some attempts decompose images into high and low frequency components for describing edge and smooth parts respectively. Similarly, the contour and flat area of 3D objects, such as the boundary and seat area of a chair, describe different but also complementary geometries. However, such investigation is lost in previous deep networks that understand point clouds by directly treating all points or local patches equally. To solve this problem, we propose Geometry-Disentangled Attention Network (GDANet). GDANet introduces Geometry-Disentangle Module to dynamically disentangle point clouds into the contour and flat part of 3D objects, respectively denoted by sharp and gentle variation components. Then GDANet exploits Sharp-Gentle Complementary Attention Module that regards the features from sharp and gentle variation components as two holistic representations, and pays different attentions to them while fusing them respectively with original point cloud features. In this way, our method captures and refines the holistic and complementary 3D geometric semantics from two distinct disentangled components to supplement the local information. Extensive experiments on 3D object classification and segmentation benchmarks demonstrate that GDANet achieves the state-of-the-arts with fewer parameters. Code is released on https://github.com/mutianxu/GDANet.

PDF Abstract

ShapeNet

ShapeNet

ModelNet

ModelNet

ScanObjectNN

ScanObjectNN

PointCloud-C

PointCloud-C