Sliced Recursive Transformer

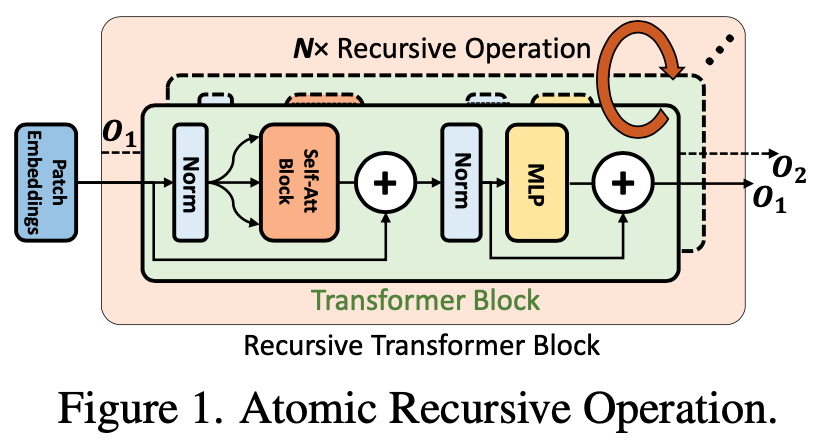

We present a neat yet effective recursive operation on vision transformers that can improve parameter utilization without involving additional parameters. This is achieved by sharing weights across the depth of transformer networks. The proposed method can obtain a substantial gain (~2%) simply using naive recursive operation, requires no special or sophisticated knowledge for designing principles of networks, and introduces minimal computational overhead to the training procedure. To reduce the additional computation caused by recursive operation while maintaining the superior accuracy, we propose an approximating method through multiple sliced group self-attentions across recursive layers which can reduce the cost consumption by 10~30% with minimal performance loss. We call our model Sliced Recursive Transformer (SReT), a novel and parameter-efficient vision transformer design that is compatible with a broad range of other designs for efficient ViT architectures. Our best model establishes significant improvement on ImageNet-1K over state-of-the-art methods while containing fewer parameters. The proposed weight sharing mechanism by sliced recursion structure allows us to build a transformer with more than 100 or even 1000 shared layers with ease while keeping a compact size (13~15M), to avoid optimization difficulties when the model is too large. The flexible scalability has shown great potential for scaling up models and constructing extremely deep vision transformers. Code is available at https://github.com/szq0214/SReT.

PDF Abstract

ImageNet

ImageNet