Image Model Blocks

Image Model Blocks

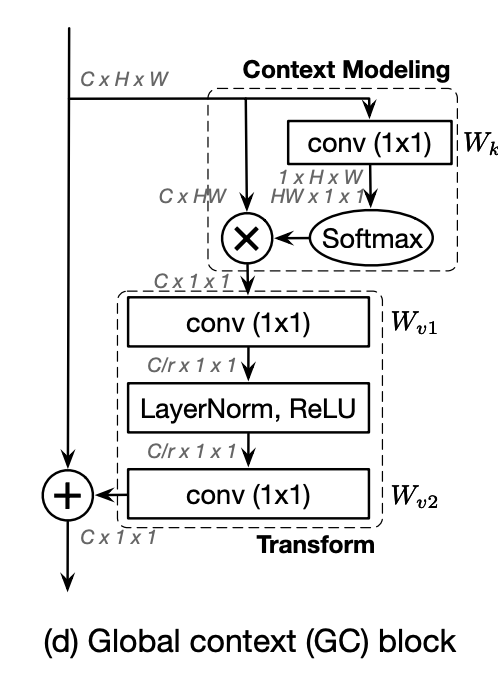

Global Context Block

Introduced by Cao et al. in GCNet: Non-local Networks Meet Squeeze-Excitation Networks and BeyondA Global Context Block is an image model block for global context modeling. The aim is to have both the benefits of the simplified non-local block with effective modeling of long-range dependencies, and the squeeze-excitation block with lightweight computation.

In the Global Context framework, we have (a) global attention pooling, which adopts a 1x1 convolution $W_{k}$ and softmax function to obtain the attention weights, and then performs the attention pooling to obtain the global context features, (b) feature transform via a 1x1 convolution $W_{v}$; (c) feature aggregation, which employs addition to aggregate the global context features to the features of each position. Taken as a whole, the GC block is proposed as a lightweight way to achieve global context modeling.

Source: GCNet: Non-local Networks Meet Squeeze-Excitation Networks and BeyondPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Object Detection | 3 | 20.00% |

| Stereo Matching | 2 | 13.33% |

| Instance Segmentation | 2 | 13.33% |

| Point Cloud Registration | 1 | 6.67% |

| Metric Learning | 1 | 6.67% |

| Robot Navigation | 1 | 6.67% |

| Management | 1 | 6.67% |

| Multi-Object Tracking | 1 | 6.67% |

| Object Tracking | 1 | 6.67% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

1x1 Convolution

1x1 Convolution

|

Convolutions | |

Layer Normalization

Layer Normalization

|

Normalization | |

ReLU

ReLU

|

Activation Functions | |

Residual Connection

Residual Connection

|

Skip Connections | |

Softmax

Softmax

|

Output Functions |