Increasingly Packing Multiple Facial-Informatics Modules in A Unified Deep-Learning Model via Lifelong Learning

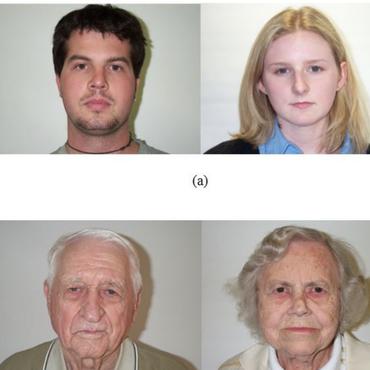

Simultaneously running multiple modules is a key requirement for a smart multimedia system for facial applications including face recognition, facial expression understanding, and gender identification. To effectively integrate them, a continual learning approach to learn new tasks without forgetting is introduced. Unlike previous methods growing monotonically in size, our approach maintains the compactness in continual learning. The proposed packing-and-expanding method is effective and easy to implement, which can iteratively shrink and enlarge the model to integrate new functions. Our integrated multitask model can achieve similar accuracy with only 39.9% of the original size.

PDF AbstractCode

Results from the Paper

Ranked #1 on

Gender Prediction

on FotW Gender

(using extra training data)

Ranked #1 on

Gender Prediction

on FotW Gender

(using extra training data)

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Age And Gender Classification | Adience Age | PAENet (single crop, tensorflow) | Accuracy (5-fold) | 57.3 | # 11 | ||

| Age And Gender Classification | Adience Gender | PAENet (single crop, tensorflow) | Accuracy (5-fold) | 89.08 | # 6 | ||

| Facial Expression Recognition (FER) | AffectNet | PAENet | Accuracy (7 emotion) | 65.29 | # 17 | ||

| Continual Learning | Cifar100 (20 tasks) | PAENet | Average Accuracy | 77.1 | # 7 | ||

| Gender Prediction | FotW Gender | PAENet | Accuracy (%) | 92.93 | # 1 |

CIFAR-100

CIFAR-100

AffectNet

AffectNet

Adience

Adience