Search Results for author: Runjian Chen

Found 12 papers, 8 papers with code

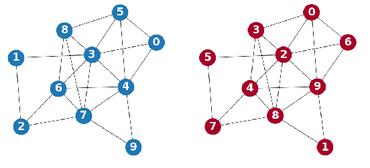

CoSimGNN: Towards Large-scale Graph Similarity Computation

no code implementations • 14 May 2020 • Haoyan Xu, Runjian Chen, Yueyang Wang, Ziheng Duan, Jie Feng

In this paper, we focus on similarity computation for large-scale graphs and propose the "embedding-coarsening-matching" framework CoSimGNN, which first embeds and coarsens large graphs with adaptive pooling operation and then deploys fine-grained interactions on the coarsened graphs for final similarity scores.

Graph Partitioning and Graph Neural Network based Hierarchical Graph Matching for Graph Similarity Computation

no code implementations • 16 May 2020 • Haoyan Xu, Ziheng Duan, Jie Feng, Runjian Chen, Qianru Zhang, Zhongbin Xu, Yueyang Wang

Next, a novel graph neural network with an attention mechanism is designed to map each subgraph into an embedding vector.

RaLL: End-to-end Radar Localization on Lidar Map Using Differentiable Measurement Model

1 code implementation • 15 Sep 2020 • Huan Yin, Runjian Chen, Yue Wang, Rong Xiong

In this paper, we propose an end-to-end deep learning framework for Radar Localization on Lidar Map (RaLL) to bridge the gap, which not only achieves the robust radar localization but also exploits the mature lidar mapping technique, thus reducing the cost of radar mapping.

CycleMLP: A MLP-like Architecture for Dense Prediction

8 code implementations • ICLR 2022 • Shoufa Chen, Enze Xie, Chongjian Ge, Runjian Chen, Ding Liang, Ping Luo

We build a family of models which surpass existing MLPs and even state-of-the-art Transformer-based models, e. g., Swin Transformer, while using fewer parameters and FLOPs.

Ranked #15 on

Semantic Segmentation

on DensePASS

Ranked #15 on

Semantic Segmentation

on DensePASS

RestoreFormer: High-Quality Blind Face Restoration from Undegraded Key-Value Pairs

1 code implementation • CVPR 2022 • Zhouxia Wang, Jiawei Zhang, Runjian Chen, Wenping Wang, Ping Luo

Blind face restoration is to recover a high-quality face image from unknown degradations.

CO^3: Cooperative Unsupervised 3D Representation Learning for Autonomous Driving

1 code implementation • 8 Jun 2022 • Runjian Chen, Yao Mu, Runsen Xu, Wenqi Shao, Chenhan Jiang, Hang Xu, Zhenguo Li, Ping Luo

In this paper, we propose CO^3, namely Cooperative Contrastive Learning and Contextual Shape Prediction, to learn 3D representation for outdoor-scene point clouds in an unsupervised manner.

CtrlFormer: Learning Transferable State Representation for Visual Control via Transformer

1 code implementation • 17 Jun 2022 • Yao Mu, Shoufa Chen, Mingyu Ding, Jianyu Chen, Runjian Chen, Ping Luo

In visual control, learning transferable state representation that can transfer between different control tasks is important to reduce the training sample size.

MV-JAR: Masked Voxel Jigsaw and Reconstruction for LiDAR-Based Self-Supervised Pre-Training

1 code implementation • CVPR 2023 • Runsen Xu, Tai Wang, Wenwei Zhang, Runjian Chen, Jinkun Cao, Jiangmiao Pang, Dahua Lin

This paper introduces the Masked Voxel Jigsaw and Reconstruction (MV-JAR) method for LiDAR-based self-supervised pre-training and a carefully designed data-efficient 3D object detection benchmark on the Waymo dataset.

SPOT: Scalable 3D Pre-training via Occupancy Prediction for Autonomous Driving

1 code implementation • 19 Sep 2023 • Xiangchao Yan, Runjian Chen, Bo Zhang, Jiakang Yuan, Xinyu Cai, Botian Shi, Wenqi Shao, Junchi Yan, Ping Luo, Yu Qiao

Our contributions are threefold: (1) Occupancy prediction is shown to be promising for learning general representations, which is demonstrated by extensive experiments on plenty of datasets and tasks.

CurriculumLoc: Enhancing Cross-Domain Geolocalization through Multi-Stage Refinement

1 code implementation • 20 Nov 2023 • Boni Hu, Lin Chen, Runjian Chen, Shuhui Bu, Pengcheng Han, Haowei Li

Visual geolocalization is a cost-effective and scalable task that involves matching one or more query images, taken at some unknown location, to a set of geo-tagged reference images.

RoboCodeX: Multimodal Code Generation for Robotic Behavior Synthesis

no code implementations • 25 Feb 2024 • Yao Mu, Junting Chen, Qinglong Zhang, Shoufa Chen, Qiaojun Yu, Chongjian Ge, Runjian Chen, Zhixuan Liang, Mengkang Hu, Chaofan Tao, Peize Sun, Haibao Yu, Chao Yang, Wenqi Shao, Wenhai Wang, Jifeng Dai, Yu Qiao, Mingyu Ding, Ping Luo

Robotic behavior synthesis, the problem of understanding multimodal inputs and generating precise physical control for robots, is an important part of Embodied AI.

Ranked #72 on

Visual Question Answering

on MM-Vet

Ranked #72 on

Visual Question Answering

on MM-Vet

Towards Implicit Prompt For Text-To-Image Models

no code implementations • 4 Mar 2024 • Yue Yang, Yuqi Lin, Hong Liu, Wenqi Shao, Runjian Chen, Hailong Shang, Yu Wang, Yu Qiao, Kaipeng Zhang, Ping Luo

We call for increased attention to the potential and risks of implicit prompts in the T2I community and further investigation into the capabilities and impacts of implicit prompts, advocating for a balanced approach that harnesses their benefits while mitigating their risks.