ParCo: Part-Coordinating Text-to-Motion Synthesis

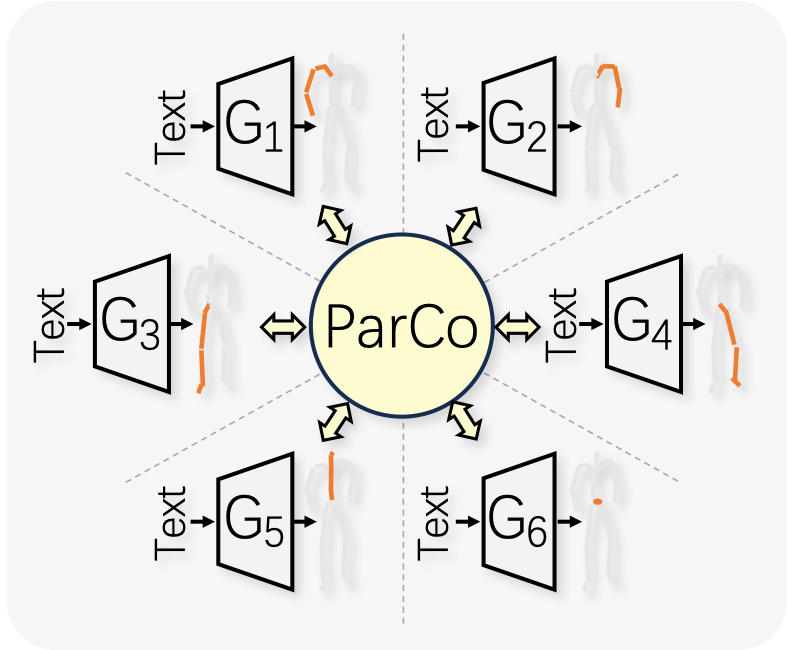

We study a challenging task: text-to-motion synthesis, aiming to generate motions that align with textual descriptions and exhibit coordinated movements. Currently, the part-based methods introduce part partition into the motion synthesis process to achieve finer-grained generation. However, these methods encounter challenges such as the lack of coordination between different part motions and difficulties for networks to understand part concepts. Moreover, introducing finer-grained part concepts poses computational complexity challenges. In this paper, we propose Part-Coordinating Text-to-Motion Synthesis (ParCo), endowed with enhanced capabilities for understanding part motions and communication among different part motion generators, ensuring a coordinated and fined-grained motion synthesis. Specifically, we discretize whole-body motion into multiple part motions to establish the prior concept of different parts. Afterward, we employ multiple lightweight generators designed to synthesize different part motions and coordinate them through our part coordination module. Our approach demonstrates superior performance on common benchmarks with economic computations, including HumanML3D and KIT-ML, providing substantial evidence of its effectiveness. Code is available at https://github.com/qrzou/ParCo .

PDF Abstract

HumanML3D

HumanML3D