Language as Queries for Referring Video Object Segmentation

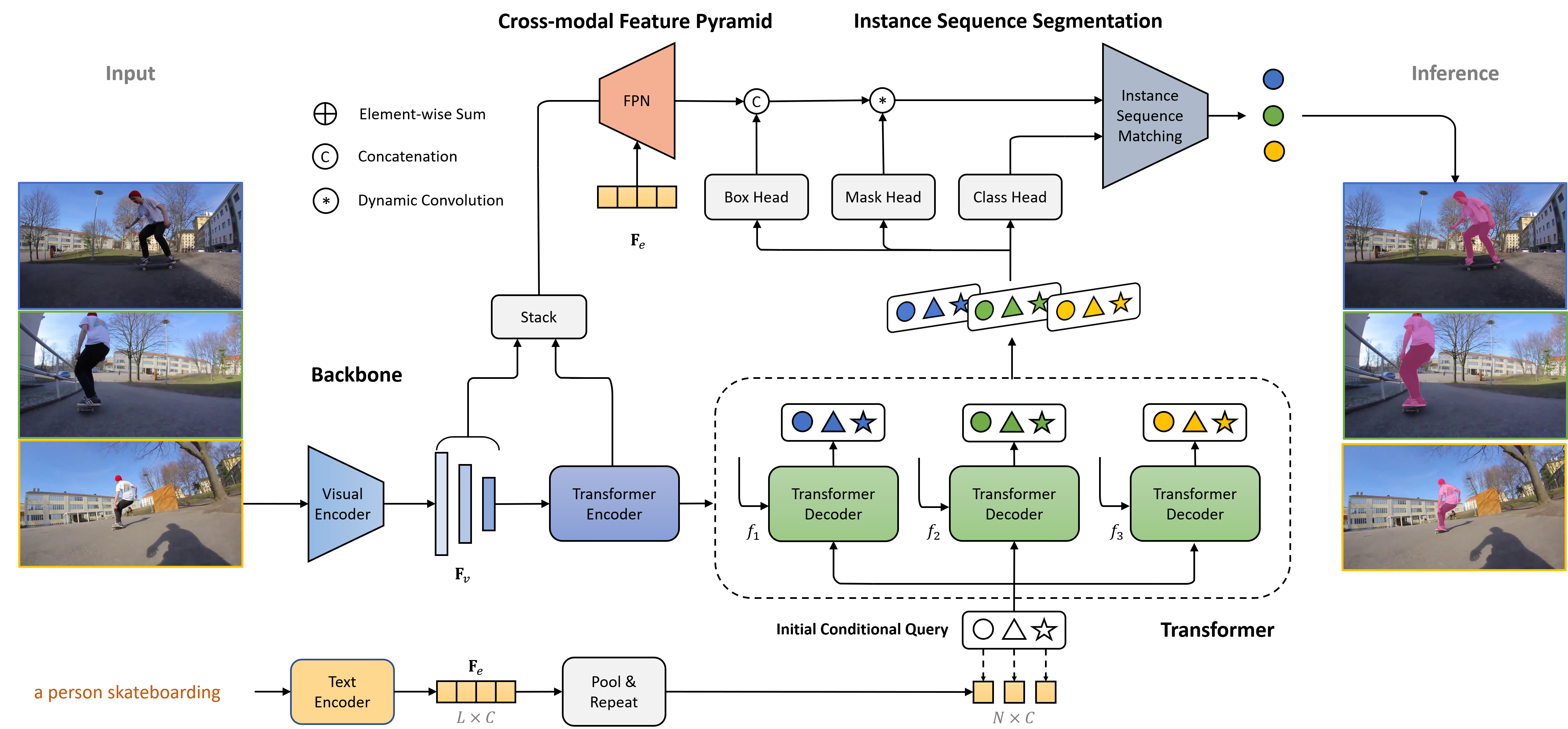

Referring video object segmentation (R-VOS) is an emerging cross-modal task that aims to segment the target object referred by a language expression in all video frames. In this work, we propose a simple and unified framework built upon Transformer, termed ReferFormer. It views the language as queries and directly attends to the most relevant regions in the video frames. Concretely, we introduce a small set of object queries conditioned on the language as the input to the Transformer. In this manner, all the queries are obligated to find the referred objects only. They are eventually transformed into dynamic kernels which capture the crucial object-level information, and play the role of convolution filters to generate the segmentation masks from feature maps. The object tracking is achieved naturally by linking the corresponding queries across frames. This mechanism greatly simplifies the pipeline and the end-to-end framework is significantly different from the previous methods. Extensive experiments on Ref-Youtube-VOS, Ref-DAVIS17, A2D-Sentences and JHMDB-Sentences show the effectiveness of ReferFormer. On Ref-Youtube-VOS, Refer-Former achieves 55.6J&F with a ResNet-50 backbone without bells and whistles, which exceeds the previous state-of-the-art performance by 8.4 points. In addition, with the strong Swin-Large backbone, ReferFormer achieves the best J&F of 64.2 among all existing methods. Moreover, we show the impressive results of 55.0 mAP and 43.7 mAP on A2D-Sentences andJHMDB-Sentences respectively, which significantly outperforms the previous methods by a large margin. Code is publicly available at https://github.com/wjn922/ReferFormer.

PDF Abstract CVPR 2022 PDF CVPR 2022 AbstractCode

Results from the Paper

Ranked #3 on

Referring Expression Segmentation

on A2D Sentences

(using extra training data)

Ranked #3 on

Referring Expression Segmentation

on A2D Sentences

(using extra training data)

MS COCO

MS COCO

DAVIS

DAVIS

RefCOCO

RefCOCO

DAVIS 2017

DAVIS 2017

JHMDB

JHMDB

Referring Expressions for DAVIS 2016 & 2017

Referring Expressions for DAVIS 2016 & 2017

Refer-YouTube-VOS

Refer-YouTube-VOS

A2D Sentences

A2D Sentences

MeViS

MeViS