Search Results for author: Xuemeng Song

Found 20 papers, 8 papers with code

MMGRec: Multimodal Generative Recommendation with Transformer Model

no code implementations • 25 Apr 2024 • Han Liu, Yinwei Wei, Xuemeng Song, Weili Guan, Yuan-Fang Li, Liqiang Nie

Multimodal recommendation aims to recommend user-preferred candidates based on her/his historically interacted items and associated multimodal information.

Interactive Garment Recommendation with User in the Loop

no code implementations • 18 Feb 2024 • Federico Becattini, Xiaolin Chen, Andrea Puccia, Haokun Wen, Xuemeng Song, Liqiang Nie, Alberto del Bimbo

Recommending fashion items often leverages rich user profiles and makes targeted suggestions based on past history and previous purchases.

Sentiment-enhanced Graph-based Sarcasm Explanation in Dialogue

no code implementations • 6 Feb 2024 • Kun Ouyang, Liqiang Jing, Xuemeng Song, Meng Liu, Yupeng Hu, Liqiang Nie

Although existing studies have achieved great success based on the generative pretrained language model BART, they overlook exploiting the sentiments residing in the utterance, video and audio, which are vital clues for sarcasm explanation.

Prompt-based Multi-interest Learning Method for Sequential Recommendation

no code implementations • 9 Jan 2024 • Xue Dong, Xuemeng Song, Tongliang Liu, Weili Guan

Multi-interest learning method for sequential recommendation aims to predict the next item according to user multi-faceted interests given the user historical interactions.

VK-G2T: Vision and Context Knowledge enhanced Gloss2Text

no code implementations • 15 Dec 2023 • Liqiang Jing, Xuemeng Song, Xinxing Zu, Na Zheng, Zhongzhou Zhao, Liqiang Nie

Existing sign language translation methods follow a two-stage pipeline: first converting the sign language video to a gloss sequence (i. e. Sign2Gloss) and then translating the generated gloss sequence into a spoken language sentence (i. e. Gloss2Text).

Multi-source Semantic Graph-based Multimodal Sarcasm Explanation Generation

1 code implementation • 29 Jun 2023 • Liqiang Jing, Xuemeng Song, Kun Ouyang, Mengzhao Jia, Liqiang Nie

Multimodal Sarcasm Explanation (MuSE) is a new yet challenging task, which aims to generate a natural language sentence for a multimodal social post (an image as well as its caption) to explain why it contains sarcasm.

Dual Semantic Knowledge Composed Multimodal Dialog Systems

no code implementations • 17 May 2023 • Xiaolin Chen, Xuemeng Song, Yinwei Wei, Liqiang Nie, Tat-Seng Chua

Thereafter, considering that the attribute knowledge and relation knowledge can benefit the responding to different levels of questions, we design a multi-level knowledge composition module in MDS-S2 to obtain the latent composed response representation.

Stylized Data-to-Text Generation: A Case Study in the E-Commerce Domain

no code implementations • 5 May 2023 • Liqiang Jing, Xuemeng Song, Xuming Lin, Zhongzhou Zhao, Wei Zhou, Liqiang Nie

This task is non-trivial, due to three challenges: the logic of the generated text, unstructured style reference, and biased training samples.

Multimodal Matching-aware Co-attention Networks with Mutual Knowledge Distillation for Fake News Detection

no code implementations • 12 Dec 2022 • Linmei Hu, Ziwang Zhao, Weijian Qi, Xuemeng Song, Liqiang Nie

Additionally, based on the designed image-text matching-aware co-attention mechanism, we propose to build two co-attention networks respectively centered on text and image for mutual knowledge distillation to improve fake news detection.

Counterfactual Reasoning for Out-of-distribution Multimodal Sentiment Analysis

1 code implementation • 24 Jul 2022 • Teng Sun, Wenjie Wang, Liqiang Jing, Yiran Cui, Xuemeng Song, Liqiang Nie

Inspired by this, we devise a model-agnostic counterfactual framework for multimodal sentiment analysis, which captures the direct effect of textual modality via an extra text model and estimates the indirect one by a multimodal model.

Multimodal Dialog Systems with Dual Knowledge-enhanced Generative Pretrained Language Model

no code implementations • 16 Jul 2022 • Xiaolin Chen, Xuemeng Song, Liqiang Jing, Shuo Li, Linmei Hu, Liqiang Nie

To address these limitations, we propose a novel dual knowledge-enhanced generative pretrained language model for multimodal task-oriented dialog systems (DKMD), consisting of three key components: dual knowledge selection, dual knowledge-enhanced context learning, and knowledge-enhanced response generation.

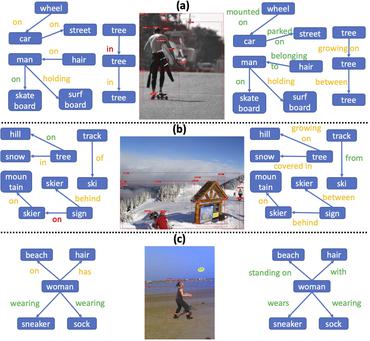

Stacked Hybrid-Attention and Group Collaborative Learning for Unbiased Scene Graph Generation

1 code implementation • CVPR 2022 • Xingning Dong, Tian Gan, Xuemeng Song, Jianlong Wu, Yuan Cheng, Liqiang Nie

Scene Graph Generation, which generally follows a regular encoder-decoder pipeline, aims to first encode the visual contents within the given image and then parse them into a compact summary graph.

Ranked #1 on

Unbiased Scene Graph Generation

on Visual Genome

(mR@20 metric)

Ranked #1 on

Unbiased Scene Graph Generation

on Visual Genome

(mR@20 metric)

MERIt: Meta-Path Guided Contrastive Learning for Logical Reasoning

1 code implementation • Findings (ACL) 2022 • Fangkai Jiao, Yangyang Guo, Xuemeng Song, Liqiang Nie

Logical reasoning is of vital importance to natural language understanding.

Ranked #3 on

Reading Comprehension

on ReClor

Ranked #3 on

Reading Comprehension

on ReClor

Dual Preference Distribution Learning for Item Recommendation

no code implementations • 24 Jan 2022 • Xue Dong, Xuemeng Song, Na Zheng, Yinwei Wei, Zhongzhou Zhao

Moreover, we can summarize a preferred attribute profile for each user, depicting his/her preferred item attributes.

Hierarchical Deep Residual Reasoning for Temporal Moment Localization

1 code implementation • 31 Oct 2021 • Ziyang Ma, Xianjing Han, Xuemeng Song, Yiran Cui, Liqiang Nie

Temporal Moment Localization (TML) in untrimmed videos is a challenging task in the field of multimedia, which aims at localizing the start and end points of the activity in the video, described by a sentence query.

Multi-Modal Interaction Graph Convolutional Network for Temporal Language Localization in Videos

1 code implementation • 12 Oct 2021 • Zongmeng Zhang, Xianjing Han, Xuemeng Song, Yan Yan, Liqiang Nie

Towards this end, in this work, we propose a Multi-modal Interaction Graph Convolutional Network (MIGCN), which jointly explores the complex intra-modal relations and inter-modal interactions residing in the video and sentence query to facilitate the understanding and semantic correspondence capture of the video and sentence query.

Answer Questions with Right Image Regions: A Visual Attention Regularization Approach

1 code implementation • 3 Feb 2021 • Yibing Liu, Yangyang Guo, Jianhua Yin, Xuemeng Song, Weifeng Liu, Liqiang Nie

However, recent studies have pointed out that the highlighted image regions from the visual attention are often irrelevant to the given question and answer, leading to model confusion for correct visual reasoning.

Market2Dish: Health-aware Food Recommendation

1 code implementation • 11 Dec 2020 • Wenjie Wang, Ling-Yu Duan, Hao Jiang, Peiguang Jing, Xuemeng Song, Liqiang Nie

With the rising incidence of some diseases, such as obesity and diabetes, a healthy diet is arousing increasing attention.

Adaptive Collaborative Similarity Learning for Unsupervised Multi-view Feature Selection

no code implementations • 25 Apr 2019 • Xiao Dong, Lei Zhu, Xuemeng Song, Jingjing Li, Zhiyong Cheng

We propose to dynamically learn the collaborative similarity structure, and further integrate it with the ultimate feature selection into a unified framework.

Neural Compatibility Modeling with Attentive Knowledge Distillation

no code implementations • 17 Apr 2018 • Xuemeng Song, Fuli Feng, Xianjing Han, Xin Yang, Wei Liu, Liqiang Nie

Nevertheless, existing studies overlook the rich valuable knowledge (rules) accumulated in fashion domain, especially the rules regarding clothing matching.