Search Results for author: Junjie Hu

Found 72 papers, 40 papers with code

XTREME: A Massively Multilingual Multi-task Benchmark for Evaluating Cross-lingual Generalisation

2 code implementations • ICML 2020 • Junjie Hu, Sebastian Ruder, Aditya Siddhant, Graham Neubig, Orhan Firat, Melvin Johnson

However, these broad-coverage benchmarks have been mostly limited to English, and despite an increasing interest in multilingual models, a benchmark that enables the comprehensive evaluation of such methods on a diverse range of languages and tasks is still missing.

Ranked #1 on

Zero-Shot Cross-Lingual Transfer

on XTREME

(AVG metric)

Ranked #1 on

Zero-Shot Cross-Lingual Transfer

on XTREME

(AVG metric)

Automatic Quantification of Serial PET/CT Images for Pediatric Hodgkin Lymphoma Patients Using a Longitudinally-Aware Segmentation Network

1 code implementation • 12 Apr 2024 • Xin Tie, Muheon Shin, Changhee Lee, Scott B. Perlman, Zachary Huemann, Amy J. Weisman, Sharon M. Castellino, Kara M. Kelly, Kathleen M. McCarten, Adina L. Alazraki, Junjie Hu, Steve Y. Cho, Tyler J. Bradshaw

For baseline segmentation, LAS-Net achieved a mean Dice score of 0. 772.

How does Multi-Task Training Affect Transformer In-Context Capabilities? Investigations with Function Classes

1 code implementation • 4 Apr 2024 • Harmon Bhasin, Timothy Ossowski, Yiqiao Zhong, Junjie Hu

Large language models (LLM) have recently shown the extraordinary ability to perform unseen tasks based on few-shot examples provided as text, also known as in-context learning (ICL).

Lookahead Exploration with Neural Radiance Representation for Continuous Vision-Language Navigation

1 code implementation • 2 Apr 2024 • Zihan Wang, Xiangyang Li, Jiahao Yang, Yeqi Liu, Junjie Hu, Ming Jiang, Shuqiang Jiang

Vision-and-language navigation (VLN) enables the agent to navigate to a remote location following the natural language instruction in 3D environments.

Data Augmentation using LLMs: Data Perspectives, Learning Paradigms and Challenges

no code implementations • 5 Mar 2024 • Bosheng Ding, Chengwei Qin, Ruochen Zhao, Tianze Luo, Xinze Li, Guizhen Chen, Wenhan Xia, Junjie Hu, Anh Tuan Luu, Shafiq Joty

In the rapidly evolving field of machine learning (ML), data augmentation (DA) has emerged as a pivotal technique for enhancing model performance by diversifying training examples without the need for additional data collection.

Mitigating Fine-tuning Jailbreak Attack with Backdoor Enhanced Alignment

no code implementations • 22 Feb 2024 • Jiongxiao Wang, Jiazhao Li, Yiquan Li, Xiangyu Qi, Junjie Hu, Yixuan Li, Patrick McDaniel, Muhao Chen, Bo Li, Chaowei Xiao

Despite the general capabilities of Large Language Models (LLMs) like GPT-4 and Llama-2, these models still request fine-tuning or adaptation with customized data when it comes to meeting the specific business demands and intricacies of tailored use cases.

Chatbot Meets Pipeline: Augment Large Language Model with Definite Finite Automaton

no code implementations • 6 Feb 2024 • Yiyou Sun, Junjie Hu, Wei Cheng, Haifeng Chen

This paper introduces the Definite Finite Automaton augmented large language model (DFA-LLM), a novel framework designed to enhance the capabilities of conversational agents using large language models (LLMs).

How Useful is Continued Pre-Training for Generative Unsupervised Domain Adaptation?

no code implementations • 31 Jan 2024 • Rheeya Uppaal, Yixuan Li, Junjie Hu

In this work, we evaluate the utility of CPT for generative UDA.

Learning Label Hierarchy with Supervised Contrastive Learning

1 code implementation • 31 Jan 2024 • Ruixue Lian, William A. Sethares, Junjie Hu

This paper introduces a family of Label-Aware SCL methods (LASCL) that incorporates hierarchical information to SCL by leveraging similarities between classes, resulting in creating a more well-structured and discriminative feature space.

Prompting Large Vision-Language Models for Compositional Reasoning

1 code implementation • 20 Jan 2024 • Timothy Ossowski, Ming Jiang, Junjie Hu

Vision-language models such as CLIP have shown impressive capabilities in encoding texts and images into aligned embeddings, enabling the retrieval of multimodal data in a shared embedding space.

Ranked #22 on

Visual Reasoning

on Winoground

Ranked #22 on

Visual Reasoning

on Winoground

Simulating Opinion Dynamics with Networks of LLM-based Agents

1 code implementation • 16 Nov 2023 • Yun-Shiuan Chuang, Agam Goyal, Nikunj Harlalka, Siddharth Suresh, Robert Hawkins, Sijia Yang, Dhavan Shah, Junjie Hu, Timothy T. Rogers

Accurately simulating human opinion dynamics is crucial for understanding a variety of societal phenomena, including polarization and the spread of misinformation.

Evolving Domain Adaptation of Pretrained Language Models for Text Classification

no code implementations • 16 Nov 2023 • Yun-Shiuan Chuang, Yi Wu, Dhruv Gupta, Rheeya Uppaal, Ananya Kumar, Luhang Sun, Makesh Narsimhan Sreedhar, Sijia Yang, Timothy T. Rogers, Junjie Hu

Adapting pre-trained language models (PLMs) for time-series text classification amidst evolving domain shifts (EDS) is critical for maintaining accuracy in applications like stance detection.

The Wisdom of Partisan Crowds: Comparing Collective Intelligence in Humans and LLM-based Agents

no code implementations • 16 Nov 2023 • Yun-Shiuan Chuang, Siddharth Suresh, Nikunj Harlalka, Agam Goyal, Robert Hawkins, Sijia Yang, Dhavan Shah, Junjie Hu, Timothy T. Rogers

Human groups are able to converge on more accurate beliefs through deliberation, even in the presence of polarization and partisan bias -- a phenomenon known as the "wisdom of partisan crowds."

CFBenchmark: Chinese Financial Assistant Benchmark for Large Language Model

1 code implementation • 10 Nov 2023 • Yang Lei, Jiangtong Li, Ming Jiang, Junjie Hu, Dawei Cheng, Zhijun Ding, Changjun Jiang

Large language models (LLMs) have demonstrated great potential in the financial domain.

Automatic Personalized Impression Generation for PET Reports Using Large Language Models

2 code implementations • 18 Sep 2023 • Xin Tie, Muheon Shin, Ali Pirasteh, Nevein Ibrahim, Zachary Huemann, Sharon M. Castellino, Kara M. Kelly, John Garrett, Junjie Hu, Steve Y. Cho, Tyler J. Bradshaw

Twelve language models were trained on a corpus of PET reports using the teacher-forcing algorithm, with the report findings as input and the clinical impressions as reference.

Single Sequence Prediction over Reasoning Graphs for Multi-hop QA

no code implementations • 1 Jul 2023 • Gowtham Ramesh, Makesh Sreedhar, Junjie Hu

Recent generative approaches for multi-hop question answering (QA) utilize the fusion-in-decoder method~\cite{izacard-grave-2021-leveraging} to generate a single sequence output which includes both a final answer and a reasoning path taken to arrive at that answer, such as passage titles and key facts from those passages.

Multimodal Prompt Retrieval for Generative Visual Question Answering

1 code implementation • 30 Jun 2023 • Timothy Ossowski, Junjie Hu

Recent years have witnessed impressive results of pre-trained vision-language models on knowledge-intensive tasks such as visual question answering (VQA).

Benchmarking LLM-based Machine Translation on Cultural Awareness

no code implementations • 23 May 2023 • Binwei Yao, Ming Jiang, Diyi Yang, Junjie Hu

Furthermore, we devise a novel evaluation metric to assess the understandability of translations in a reference-free manner by GPT-4.

Is Fine-tuning Needed? Pre-trained Language Models Are Near Perfect for Out-of-Domain Detection

1 code implementation • 22 May 2023 • Rheeya Uppaal, Junjie Hu, Yixuan Li

Fine-tuning with pre-trained language models has been a de facto procedure to derive OOD detectors with respect to in-distribution (ID) data.

Explicit Attention-Enhanced Fusion for RGB-Thermal Perception Tasks

1 code implementation • 28 Mar 2023 • Mingjian Liang, Junjie Hu, Chenyu Bao, Hua Feng, Fuqin Deng, Tin Lun Lam

Specifically, we consider the following cases: i) both RGB data and thermal data, ii) only one of the types of data, and iii) none of them generate discriminative features.

Ranked #2 on

Thermal Image Segmentation

on Noisy RS RGB-T Dataset

Ranked #2 on

Thermal Image Segmentation

on Noisy RS RGB-T Dataset

Lifelong-MonoDepth: Lifelong Learning for Multi-Domain Monocular Metric Depth Estimation

1 code implementation • 9 Mar 2023 • Junjie Hu, Chenyou Fan, Liguang Zhou, Qing Gao, Honghai Liu, Tin Lun Lam

With the rapid advancements in autonomous driving and robot navigation, there is a growing demand for lifelong learning models capable of estimating metric (absolute) depth.

ConTEXTual Net: A Multimodal Vision-Language Model for Segmentation of Pneumothorax

1 code implementation • 2 Mar 2023 • Zachary Huemann, Xin Tie, Junjie Hu, Tyler J. Bradshaw

ConTEXTual Net was trained on the CANDID-PTX dataset consisting of 3, 196 positive cases of pneumothorax with segmentation annotations from 6 different physicians as well as clinical radiology reports.

Domain-adapted large language models for classifying nuclear medicine reports

no code implementations • 1 Mar 2023 • Zachary Huemann, Changhee Lee, Junjie Hu, Steve Y. Cho, Tyler Bradshaw

Domain adaptation improved the performance of large language models in interpreting nuclear medicine text reports.

Attentional Graph Convolutional Network for Structure-aware Audio-Visual Scene Classification

no code implementations • 31 Dec 2022 • Liguang Zhou, Yuhongze Zhou, Xiaonan Qi, Junjie Hu, Tin Lun Lam, Yangsheng Xu

Then, to build multi-scale hierarchical information of input features, we utilize an attention fusion mechanism to aggregate features from multiple layers of the backbone network.

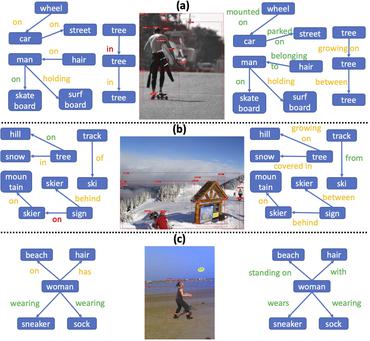

Peer Learning for Unbiased Scene Graph Generation

no code implementations • 31 Dec 2022 • Liguang Zhou, Junjie Hu, Yuhongze Zhou, Tin Lun Lam, Yangsheng Xu

Unbiased scene graph generation (USGG) is a challenging task that requires predicting diverse and heavily imbalanced predicates between objects in an image.

Beyond Counting Datasets: A Survey of Multilingual Dataset Construction and Necessary Resources

no code implementations • 28 Nov 2022 • Xinyan Velocity Yu, Akari Asai, Trina Chatterjee, Junjie Hu, Eunsol Choi

While the NLP community is generally aware of resource disparities among languages, we lack research that quantifies the extent and types of such disparity.

Progressive Self-Distillation for Ground-to-Aerial Perception Knowledge Transfer

1 code implementation • 29 Aug 2022 • Junjie Hu, Chenyou Fan, Mete Ozay, Hua Feng, Yuan Gao, Tin Lun Lam

In this paper, we introduce the ground-to-aerial perception knowledge transfer and propose a progressive semi-supervised learning framework that enables drone perception using only labeled data of ground viewpoint and unlabeled data of flying viewpoints.

Dense Depth Distillation with Out-of-Distribution Simulated Images

no code implementations • 26 Aug 2022 • Junjie Hu, Chenyou Fan, Mete Ozay, Hualie Jiang, Tin Lun Lam

We study data-free knowledge distillation (KD) for monocular depth estimation (MDE), which learns a lightweight model for real-world depth perception tasks by compressing it from a trained teacher model while lacking training data in the target domain.

MIA 2022 Shared Task: Evaluating Cross-lingual Open-Retrieval Question Answering for 16 Diverse Languages

no code implementations • NAACL (MIA) 2022 • Akari Asai, Shayne Longpre, Jungo Kasai, Chia-Hsuan Lee, Rui Zhang, Junjie Hu, Ikuya Yamada, Jonathan H. Clark, Eunsol Choi

We present the results of the Workshop on Multilingual Information Access (MIA) 2022 Shared Task, evaluating cross-lingual open-retrieval question answering (QA) systems in 16 typologically diverse languages.

Local Byte Fusion for Neural Machine Translation

1 code implementation • 23 May 2022 • Makesh Narsimhan Sreedhar, Xiangpeng Wan, Yu Cheng, Junjie Hu

Subword tokenization schemes are the dominant technique used in current NLP models.

Utilizing Language-Image Pretraining for Efficient and Robust Bilingual Word Alignment

1 code implementation • 23 May 2022 • Tuan Dinh, Jy-yong Sohn, Shashank Rajput, Timothy Ossowski, Yifei Ming, Junjie Hu, Dimitris Papailiopoulos, Kangwook Lee

Word translation without parallel corpora has become feasible, rivaling the performance of supervised methods.

Deep Depth Completion from Extremely Sparse Data: A Survey

no code implementations • 11 May 2022 • Junjie Hu, Chenyu Bao, Mete Ozay, Chenyou Fan, Qing Gao, Honghai Liu, Tin Lun Lam

Depth completion aims at predicting dense pixel-wise depth from an extremely sparse map captured from a depth sensor, e. g., LiDARs.

DEEP: DEnoising Entity Pre-training for Neural Machine Translation

no code implementations • ACL 2022 • Junjie Hu, Hiroaki Hayashi, Kyunghyun Cho, Graham Neubig

It has been shown that machine translation models usually generate poor translations for named entities that are infrequent in the training corpus.

Advanced Statistical Learning on Short Term Load Process Forecasting

no code implementations • 19 Oct 2021 • Junjie Hu, Brenda López Cabrera, Awdesch Melzer

The predictive information is fundamental for the risk and production management of electricity consumers.

FEANet: Feature-Enhanced Attention Network for RGB-Thermal Real-time Semantic Segmentation

1 code implementation • 18 Oct 2021 • Fuqin Deng, Hua Feng, Mingjian Liang, Hongmin Wang, Yong Yang, Yuan Gao, Junfeng Chen, Junjie Hu, Xiyue Guo, Tin Lun Lam

To better extract detail spatial information, we propose a two-stage Feature-Enhanced Attention Network (FEANet) for the RGB-T semantic segmentation task.

Ranked #10 on

Semantic Segmentation

on FMB Dataset

Ranked #10 on

Semantic Segmentation

on FMB Dataset

GlobalWoZ: Globalizing MultiWoZ to Develop Multilingual Task-Oriented Dialogue Systems

1 code implementation • ACL 2022 • Bosheng Ding, Junjie Hu, Lidong Bing, Sharifah Mahani Aljunied, Shafiq Joty, Luo Si, Chunyan Miao

Much recent progress in task-oriented dialogue (ToD) systems has been driven by available annotation data across multiple domains for training.

PLNet: Plane and Line Priors for Unsupervised Indoor Depth Estimation

1 code implementation • 12 Oct 2021 • Hualie Jiang, Laiyan Ding, Junjie Hu, Rui Huang

Unsupervised learning of depth from indoor monocular videos is challenging as the artificial environment contains many textureless regions.

AfroMT: Pretraining Strategies and Reproducible Benchmarks for Translation of 8 African Languages

1 code implementation • EMNLP 2021 • Machel Reid, Junjie Hu, Graham Neubig, Yutaka Matsuo

Reproducible benchmarks are crucial in driving progress of machine translation research.

Learn2Agree: Fitting with Multiple Annotators without Objective Ground Truth

no code implementations • 8 Sep 2021 • Chongyang Wang, Yuan Gao, Chenyou Fan, Junjie Hu, Tin Lun Lam, Nicholas D. Lane, Nadia Bianchi-Berthouze

For such issues, we propose a novel Learning to Agreement (Learn2Agree) framework to tackle the challenge of learning from multiple annotators without objective ground truth.

Networks of News and Cross-Sectional Returns

no code implementations • 12 Aug 2021 • Junjie Hu, Wolfgang Karl Härdle

We uncover networks from news articles to study cross-sectional stock returns.

Phrase-level Active Learning for Neural Machine Translation

no code implementations • WMT (EMNLP) 2021 • Junjie Hu, Graham Neubig

Neural machine translation (NMT) is sensitive to domain shift.

Boosting Light-Weight Depth Estimation Via Knowledge Distillation

2 code implementations • 13 May 2021 • Junjie Hu, Chenyou Fan, Hualie Jiang, Xiyue Guo, Yuan Gao, Xiangyong Lu, Tin Lun Lam

However, this KD process can be challenging and insufficient due to the large model capacity gap between the teacher and the student.

XTREME-R: Towards More Challenging and Nuanced Multilingual Evaluation

1 code implementation • EMNLP 2021 • Sebastian Ruder, Noah Constant, Jan Botha, Aditya Siddhant, Orhan Firat, Jinlan Fu, PengFei Liu, Junjie Hu, Dan Garrette, Graham Neubig, Melvin Johnson

While a sizeable gap to human-level performance remains, improvements have been easier to achieve in some tasks than in others.

Multilingual Multimodal Pre-training for Zero-Shot Cross-Lingual Transfer of Vision-Language Models

1 code implementation • NAACL 2021 • Po-Yao Huang, Mandela Patrick, Junjie Hu, Graham Neubig, Florian Metze, Alexander Hauptmann

Specifically, we focus on multilingual text-to-video search and propose a Transformer-based model that learns contextualized multilingual multimodal embeddings.

Semantic Histogram Based Graph Matching for Real-Time Multi-Robot Global Localization in Large Scale Environment

3 code implementations • 19 Oct 2020 • Xiyue Guo, Junjie Hu, Junfeng Chen, Fuqin Deng, Tin Lun Lam

The core problem of visual multi-robot simultaneous localization and mapping (MR-SLAM) is how to efficiently and accurately perform multi-robot global localization (MR-GL).

A Two-stage Unsupervised Approach for Low light Image Enhancement

no code implementations • 19 Oct 2020 • Junjie Hu, Xiyue Guo, Junfeng Chen, Guanqi Liang, Fuqin Deng, Tin Lun Lam

However, most of them suffer from the following problems: 1) the need of pairs of low light and normal light images for training, 2) the poor performance for dark images, 3) the amplification of noise.

Low-Light Image Enhancement

Low-Light Image Enhancement

Simultaneous Localization and Mapping

+1

Simultaneous Localization and Mapping

+1

Explicit Alignment Objectives for Multilingual Bidirectional Encoders

no code implementations • NAACL 2021 • Junjie Hu, Melvin Johnson, Orhan Firat, Aditya Siddhant, Graham Neubig

Pre-trained cross-lingual encoders such as mBERT (Devlin et al., 2019) and XLMR (Conneau et al., 2020) have proven to be impressively effective at enabling transfer-learning of NLP systems from high-resource languages to low-resource languages.

On Learning Language-Invariant Representations for Universal Machine Translation

no code implementations • ICML 2020 • Han Zhao, Junjie Hu, Andrej Risteski

The goal of universal machine translation is to learn to translate between any pair of languages, given a corpus of paired translated documents for \emph{a small subset} of all pairs of languages.

TICO-19: the Translation Initiative for Covid-19

no code implementations • EMNLP (NLP-COVID19) 2020 • Antonios Anastasopoulos, Alessandro Cattelan, Zi-Yi Dou, Marcello Federico, Christian Federman, Dmitriy Genzel, Francisco Guzmán, Junjie Hu, Macduff Hughes, Philipp Koehn, Rosie Lazar, Will Lewis, Graham Neubig, Mengmeng Niu, Alp Öktem, Eric Paquin, Grace Tang, Sylwia Tur

Further, the team is converting the test and development data into translation memories (TMXs) that can be used by localizers from and to any of the languages.

Unsupervised Multimodal Neural Machine Translation with Pseudo Visual Pivoting

no code implementations • ACL 2020 • Po-Yao Huang, Junjie Hu, Xiaojun Chang, Alexander Hauptmann

In this paper, we investigate how to utilize visual content for disambiguation and promoting latent space alignment in unsupervised MMT.

XTREME: A Massively Multilingual Multi-task Benchmark for Evaluating Cross-lingual Generalization

4 code implementations • 24 Mar 2020 • Junjie Hu, Sebastian Ruder, Aditya Siddhant, Graham Neubig, Orhan Firat, Melvin Johnson

However, these broad-coverage benchmarks have been mostly limited to English, and despite an increasing interest in multilingual models, a benchmark that enables the comprehensive evaluation of such methods on a diverse range of languages and tasks is still missing.

Risk of Bitcoin Market: Volatility, Jumps, and Forecasts

no code implementations • 11 Dec 2019 • Junjie Hu, Wolfgang Karl Härdle, Weiyu Kuo

Cryptocurrency, the most controversial and simultaneously the most interesting asset, has attracted many investors and speculators in recent years.

Analysis of Deep Networks for Monocular Depth Estimation Through Adversarial Attacks with Proposal of a Defense Method

no code implementations • 20 Nov 2019 • Junjie Hu, Takayuki Okatani

However, the prediction of saliency maps is itself vulnerable to the attacks, even though it is not the direct target of the attacks.

Domain Differential Adaptation for Neural Machine Translation

1 code implementation • WS 2019 • Zi-Yi Dou, Xinyi Wang, Junjie Hu, Graham Neubig

We then use these learned domain differentials to adapt models for the target task accordingly.

What Makes A Good Story? Designing Composite Rewards for Visual Storytelling

1 code implementation • 11 Sep 2019 • Junjie Hu, Yu Cheng, Zhe Gan, Jingjing Liu, Jianfeng Gao, Graham Neubig

Previous storytelling approaches mostly focused on optimizing traditional metrics such as BLEU, ROUGE and CIDEr.

Ranked #10 on

Visual Storytelling

on VIST

Ranked #10 on

Visual Storytelling

on VIST

REO-Relevance, Extraness, Omission: A Fine-grained Evaluation for Image Captioning

1 code implementation • IJCNLP 2019 • Ming Jiang, Junjie Hu, Qiuyuan Huang, Lei Zhang, Jana Diesner, Jianfeng Gao

In this study, we present a fine-grained evaluation method REO for automatically measuring the performance of image captioning systems.

Handling Syntactic Divergence in Low-resource Machine Translation

1 code implementation • IJCNLP 2019 • Chunting Zhou, Xuezhe Ma, Junjie Hu, Graham Neubig

Despite impressive empirical successes of neural machine translation (NMT) on standard benchmarks, limited parallel data impedes the application of NMT models to many language pairs.

Unsupervised Domain Adaptation for Neural Machine Translation with Domain-Aware Feature Embeddings

1 code implementation • IJCNLP 2019 • Zi-Yi Dou, Junjie Hu, Antonios Anastasopoulos, Graham Neubig

The recent success of neural machine translation models relies on the availability of high quality, in-domain data.

Domain Adaptation of Neural Machine Translation by Lexicon Induction

2 code implementations • ACL 2019 • Junjie Hu, Mengzhou Xia, Graham Neubig, Jaime Carbonell

It has been previously noted that neural machine translation (NMT) is very sensitive to domain shift.

A Hybrid Retrieval-Generation Neural Conversation Model

1 code implementation • 19 Apr 2019 • Liu Yang, Junjie Hu, Minghui Qiu, Chen Qu, Jianfeng Gao, W. Bruce Croft, Xiaodong Liu, Yelong Shen, Jingjing Liu

In this paper, we propose a hybrid neural conversation model that combines the merits of both response retrieval and generation methods.

Visualization of Convolutional Neural Networks for Monocular Depth Estimation

1 code implementation • ICCV 2019 • Junjie Hu, Yan Zhang, Takayuki Okatani

We formulate it as an optimization problem of identifying the smallest number of image pixels from which the CNN can estimate a depth map with the minimum difference from the estimate from the entire image.

compare-mt: A Tool for Holistic Comparison of Language Generation Systems

2 code implementations • NAACL 2019 • Graham Neubig, Zi-Yi Dou, Junjie Hu, Paul Michel, Danish Pruthi, Xinyi Wang, John Wieting

In this paper, we describe compare-mt, a tool for holistic analysis and comparison of the results of systems for language generation tasks such as machine translation.

The ARIEL-CMU Systems for LoReHLT18

no code implementations • 24 Feb 2019 • Aditi Chaudhary, Siddharth Dalmia, Junjie Hu, Xinjian Li, Austin Matthews, Aldrian Obaja Muis, Naoki Otani, Shruti Rijhwani, Zaid Sheikh, Nidhi Vyas, Xinyi Wang, Jiateng Xie, Ruochen Xu, Chunting Zhou, Peter J. Jansen, Yiming Yang, Lori Levin, Florian Metze, Teruko Mitamura, David R. Mortensen, Graham Neubig, Eduard Hovy, Alan W. black, Jaime Carbonell, Graham V. Horwood, Shabnam Tafreshi, Mona Diab, Efsun S. Kayi, Noura Farra, Kathleen McKeown

This paper describes the ARIEL-CMU submissions to the Low Resource Human Language Technologies (LoReHLT) 2018 evaluations for the tasks Machine Translation (MT), Entity Discovery and Linking (EDL), and detection of Situation Frames in Text and Speech (SF Text and Speech).

Contextual Encoding for Translation Quality Estimation

1 code implementation • WS 2018 • Junjie Hu, Wei-Cheng Chang, Yuexin Wu, Graham Neubig

In this paper, propose a method to effectively encode the local and global contextual information for each target word using a three-part neural network approach.

Rapid Adaptation of Neural Machine Translation to New Languages

1 code implementation • EMNLP 2018 • Graham Neubig, Junjie Hu

This paper examines the problem of adapting neural machine translation systems to new, low-resourced languages (LRLs) as effectively and rapidly as possible.

Automatic Estimation of Simultaneous Interpreter Performance

1 code implementation • ACL 2018 • Craig Stewart, Nikolai Vogler, Junjie Hu, Jordan Boyd-Graber, Graham Neubig

Simultaneous interpretation, translation of the spoken word in real-time, is both highly challenging and physically demanding.

Revisiting Single Image Depth Estimation: Toward Higher Resolution Maps with Accurate Object Boundaries

4 code implementations • 23 Mar 2018 • Junjie Hu, Mete Ozay, Yan Zhang, Takayuki Okatani

Experimental results show that these two improvements enable to attain higher accuracy than the current state-of-the-arts, which is given by finer resolution reconstruction, for example, with small objects and object boundaries.

Ranked #60 on

Monocular Depth Estimation

on NYU-Depth V2

Ranked #60 on

Monocular Depth Estimation

on NYU-Depth V2

Principled Hybrids of Generative and Discriminative Domain Adaptation

no code implementations • ICLR 2018 • Han Zhao, Zhenyao Zhu, Junjie Hu, Adam Coates, Geoff Gordon

This provides us a very general way to interpolate between generative and discriminative extremes through different choices of priors.

Structural Embedding of Syntactic Trees for Machine Comprehension

no code implementations • EMNLP 2017 • Rui Liu, Junjie Hu, Wei Wei, Zi Yang, Eric Nyberg

Deep neural networks for machine comprehension typically utilizes only word or character embeddings without explicitly taking advantage of structured linguistic information such as constituency trees and dependency trees.

Ranked #40 on

Question Answering

on SQuAD1.1 dev

Ranked #40 on

Question Answering

on SQuAD1.1 dev

Semi-Supervised QA with Generative Domain-Adaptive Nets

no code implementations • ACL 2017 • Zhilin Yang, Junjie Hu, Ruslan Salakhutdinov, William W. Cohen

In this framework, we train a generative model to generate questions based on the unlabeled text, and combine model-generated questions with human-generated questions for training question answering models.

Words or Characters? Fine-grained Gating for Reading Comprehension

1 code implementation • 6 Nov 2016 • Zhilin Yang, Bhuwan Dhingra, Ye Yuan, Junjie Hu, William W. Cohen, Ruslan Salakhutdinov

Previous work combines word-level and character-level representations using concatenation or scalar weighting, which is suboptimal for high-level tasks like reading comprehension.

Ranked #50 on

Question Answering

on SQuAD1.1 dev

Ranked #50 on

Question Answering

on SQuAD1.1 dev

Learning Lexical Entries for Robotic Commands using Crowdsourcing

no code implementations • 8 Sep 2016 • Junjie Hu, Jean Oh, Anatole Gershman

Robotic commands in natural language usually contain various spatial descriptions that are semantically similar but syntactically different.