Search Results for author: Klaus-Robert Müller

Found 139 papers, 57 papers with code

Molecular relaxation by reverse diffusion with time step prediction

1 code implementation • 16 Apr 2024 • Khaled Kahouli, Stefaan Simon Pierre Hessmann, Klaus-Robert Müller, Shinichi Nakajima, Stefan Gugler, Niklas Wolf Andreas Gebauer

As a remedy, we propose MoreRed, molecular relaxation by reverse diffusion, a conceptually novel and purely statistical approach where non-equilibrium structures are treated as noisy instances of their corresponding equilibrium states.

XpertAI: uncovering model strategies for sub-manifolds

no code implementations • 12 Mar 2024 • Simon Letzgus, Klaus-Robert Müller, Grégoire Montavon

In regression, explanations need to be precisely formulated to address specific user queries (e. g.\ distinguishing between `Why is the output above 0?'

Manipulating Feature Visualizations with Gradient Slingshots

1 code implementation • 11 Jan 2024 • Dilyara Bareeva, Marina M. -C. Höhne, Alexander Warnecke, Lukas Pirch, Klaus-Robert Müller, Konrad Rieck, Kirill Bykov

Deep Neural Networks (DNNs) are capable of learning complex and versatile representations, however, the semantic nature of the learned concepts remains unknown.

RudolfV: A Foundation Model by Pathologists for Pathologists

no code implementations • 8 Jan 2024 • Jonas Dippel, Barbara Feulner, Tobias Winterhoff, Simon Schallenberg, Gabriel Dernbach, Andreas Kunft, Stephan Tietz, Timo Milbich, Simon Heinke, Marie-Lisa Eich, Julika Ribbat-Idel, Rosemarie Krupar, Philipp Jurmeister, David Horst, Lukas Ruff, Klaus-Robert Müller, Frederick Klauschen, Maximilian Alber

Histopathology plays a central role in clinical medicine and biomedical research.

Getting aligned on representational alignment

no code implementations • 18 Oct 2023 • Ilia Sucholutsky, Lukas Muttenthaler, Adrian Weller, Andi Peng, Andreea Bobu, Been Kim, Bradley C. Love, Erin Grant, Iris Groen, Jascha Achterberg, Joshua B. Tenenbaum, Katherine M. Collins, Katherine L. Hermann, Kerem Oktar, Klaus Greff, Martin N. Hebart, Nori Jacoby, Qiuyi Zhang, Raja Marjieh, Robert Geirhos, Sherol Chen, Simon Kornblith, Sunayana Rane, Talia Konkle, Thomas P. O'Connell, Thomas Unterthiner, Andrew K. Lampinen, Klaus-Robert Müller, Mariya Toneva, Thomas L. Griffiths

Finally, we lay out open problems in representational alignment where progress can benefit all three of these fields.

Insightful analysis of historical sources at scales beyond human capabilities using unsupervised Machine Learning and XAI

no code implementations • 13 Oct 2023 • Oliver Eberle, Jochen Büttner, Hassan El-Hajj, Grégoire Montavon, Klaus-Robert Müller, Matteo Valleriani

An ML based analysis of these tables helps to unveil important facets of the spatio-temporal evolution of knowledge and innovation in the field of mathematical astronomy in the period, as taught at European universities.

From Peptides to Nanostructures: A Euclidean Transformer for Fast and Stable Machine Learned Force Fields

1 code implementation • 21 Sep 2023 • J. Thorben Frank, Oliver T. Unke, Klaus-Robert Müller, Stefan Chmiela

Recent years have seen vast progress in the development of machine learned force fields (MLFFs) based on ab-initio reference calculations.

Set Learning for Accurate and Calibrated Models

1 code implementation • 5 Jul 2023 • Lukas Muttenthaler, Robert A. Vandermeulen, Qiuyi Zhang, Thomas Unterthiner, Klaus-Robert Müller

Model overconfidence and poor calibration are common in machine learning and difficult to account for when applying standard empirical risk minimization.

An XAI framework for robust and transparent data-driven wind turbine power curve models

1 code implementation • 19 Apr 2023 • Simon Letzgus, Klaus-Robert Müller

Alongside this paper, we publish a Python implementation of the presented framework and hope this can guide researchers and practitioners alike toward training, selecting and utilizing more transparent and robust data-driven wind turbine power curve models.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

+1

Explainable Artificial Intelligence (XAI)

+1

Preemptively Pruning Clever-Hans Strategies in Deep Neural Networks

no code implementations • 12 Apr 2023 • Lorenz Linhardt, Klaus-Robert Müller, Grégoire Montavon

In this paper, we demonstrate that acceptance of explanations by the user is not a guarantee for a machine learning model to be robust against Clever Hans effects, which may remain undetected.

Mark My Words: Dangers of Watermarked Images in ImageNet

no code implementations • 9 Mar 2023 • Kirill Bykov, Klaus-Robert Müller, Marina M. -C. Höhne

The utilization of pre-trained networks, especially those trained on ImageNet, has become a common practice in Computer Vision.

Disentangled Explanations of Neural Network Predictions by Finding Relevant Subspaces

no code implementations • 30 Dec 2022 • Pattarawat Chormai, Jan Herrmann, Klaus-Robert Müller, Grégoire Montavon

Explanations often take the form of a heatmap identifying input features (e. g. pixels) that are relevant to the model's decision.

Reconstructing Kernel-based Machine Learning Force Fields with Super-linear Convergence

1 code implementation • 24 Dec 2022 • Stefan Blücher, Klaus-Robert Müller, Stefan Chmiela

Kernel machines have sustained continuous progress in the field of quantum chemistry.

Shortcomings of Top-Down Randomization-Based Sanity Checks for Evaluations of Deep Neural Network Explanations

no code implementations • CVPR 2023 • Alexander Binder, Leander Weber, Sebastian Lapuschkin, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

To address shortcomings of this test, we start by observing an experimental gap in the ranking of explanation methods between randomization-based sanity checks [1] and model output faithfulness measures (e. g. [25]).

Algorithmic Differentiation for Automated Modeling of Machine Learned Force Fields

1 code implementation • 25 Aug 2022 • Niklas Frederik Schmitz, Klaus-Robert Müller, Stefan Chmiela

Reconstructing force fields (FFs) from atomistic simulation data is a challenge since accurate data can be highly expensive.

Self-Supervised Training with Autoencoders for Visual Anomaly Detection

no code implementations • 23 Jun 2022 • Alexander Bauer, Shinichi Nakajima, Klaus-Robert Müller

We focus on a specific use case in anomaly detection where the distribution of normal samples is supported by a lower-dimensional manifold.

Diffeomorphic Counterfactuals with Generative Models

1 code implementation • 10 Jun 2022 • Ann-Kathrin Dombrowski, Jan E. Gerken, Klaus-Robert Müller, Pan Kessel

Counterfactuals can explain classification decisions of neural networks in a human interpretable way.

DORA: Exploring Outlier Representations in Deep Neural Networks

1 code implementation • 9 Jun 2022 • Kirill Bykov, Mayukh Deb, Dennis Grinwald, Klaus-Robert Müller, Marina M. -C. Höhne

Deep Neural Networks (DNNs) excel at learning complex abstractions within their internal representations.

So3krates: Equivariant attention for interactions on arbitrary length-scales in molecular systems

1 code implementation • 28 May 2022 • J. Thorben Frank, Oliver T. Unke, Klaus-Robert Müller

The application of machine learning methods in quantum chemistry has enabled the study of numerous chemical phenomena, which are computationally intractable with traditional ab-initio methods.

Exposing Outlier Exposure: What Can Be Learned From Few, One, and Zero Outlier Images

1 code implementation • 23 May 2022 • Philipp Liznerski, Lukas Ruff, Robert A. Vandermeulen, Billy Joe Franks, Klaus-Robert Müller, Marius Kloft

We find that standard classifiers and semi-supervised one-class methods trained to discern between normal samples and relatively few random natural images are able to outperform the current state of the art on an established AD benchmark with ImageNet.

Ranked #1 on

Anomaly Detection

on One-class CIFAR-10

(using extra training data)

Ranked #1 on

Anomaly Detection

on One-class CIFAR-10

(using extra training data)

Accurate Machine Learned Quantum-Mechanical Force Fields for Biomolecular Simulations

no code implementations • 17 May 2022 • Oliver T. Unke, Martin Stöhr, Stefan Ganscha, Thomas Unterthiner, Hartmut Maennel, Sergii Kashubin, Daniel Ahlin, Michael Gastegger, Leonardo Medrano Sandonas, Alexandre Tkatchenko, Klaus-Robert Müller

Molecular dynamics (MD) simulations allow atomistic insights into chemical and biological processes.

Automatic Identification of Chemical Moieties

no code implementations • 30 Mar 2022 • Jonas Lederer, Michael Gastegger, Kristof T. Schütt, Michael Kampffmeyer, Klaus-Robert Müller, Oliver T. Unke

In recent years, the prediction of quantum mechanical observables with machine learning methods has become increasingly popular.

XAI for Transformers: Better Explanations through Conservative Propagation

1 code implementation • 15 Feb 2022 • Ameen Ali, Thomas Schnake, Oliver Eberle, Grégoire Montavon, Klaus-Robert Müller, Lior Wolf

Transformers have become an important workhorse of machine learning, with numerous applications.

Automated Dissipation Control for Turbulence Simulation with Shell Models

no code implementations • 7 Jan 2022 • Ann-Kathrin Dombrowski, Klaus-Robert Müller, Wolf Christian Müller

The application of machine learning (ML) techniques, especially neural networks, has seen tremendous success at processing images and language.

Super-resolution in Molecular Dynamics Trajectory Reconstruction with Bi-Directional Neural Networks

no code implementations • 2 Jan 2022 • Ludwig Winkler, Klaus-Robert Müller, Huziel E. Sauceda

Molecular dynamics simulations are a cornerstone in science, allowing to investigate from the system's thermodynamics to analyse intricate molecular interactions.

Toward Explainable AI for Regression Models

1 code implementation • 21 Dec 2021 • Simon Letzgus, Patrick Wagner, Jonas Lederer, Wojciech Samek, Klaus-Robert Müller, Gregoire Montavon

In addition to the impressive predictive power of machine learning (ML) models, more recently, explanation methods have emerged that enable an interpretation of complex non-linear learning models such as deep neural networks.

Scrutinizing XAI using linear ground-truth data with suppressor variables

1 code implementation • 14 Nov 2021 • Rick Wilming, Céline Budding, Klaus-Robert Müller, Stefan Haufe

It has been demonstrated that some saliency methods can highlight features that have no statistical association with the prediction target (suppressor variables).

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Feature Importance

Feature Importance

Efficient Hierarchical Bayesian Inference for Spatio-temporal Regression Models in Neuroimaging

1 code implementation • NeurIPS 2021 • Ali Hashemi, Yijing Gao, Chang Cai, Sanjay Ghosh, Klaus-Robert Müller, Srikantan S. Nagarajan, Stefan Haufe

Several problems in neuroimaging and beyond require inference on the parameters of multi-task sparse hierarchical regression models.

Evaluating deep transfer learning for whole-brain cognitive decoding

1 code implementation • 1 Nov 2021 • Armin W. Thomas, Ulman Lindenberger, Wojciech Samek, Klaus-Robert Müller

Here, we systematically evaluate TL for the application of DL models to the decoding of cognitive states (e. g., viewing images of faces or houses) from whole-brain functional Magnetic Resonance Imaging (fMRI) data.

Inverse design of 3d molecular structures with conditional generative neural networks

1 code implementation • 10 Sep 2021 • Niklas W. A. Gebauer, Michael Gastegger, Stefaan S. P. Hessmann, Klaus-Robert Müller, Kristof T. Schütt

The rational design of molecules with desired properties is a long-standing challenge in chemistry.

Explaining Bayesian Neural Networks

no code implementations • 23 Aug 2021 • Kirill Bykov, Marina M. -C. Höhne, Adelaida Creosteanu, Klaus-Robert Müller, Frederick Klauschen, Shinichi Nakajima, Marius Kloft

Bayesian approaches such as Bayesian Neural Networks (BNNs) so far have a limited form of transparency (model transparency) already built-in through their prior weight distribution, but notably, they lack explanations of their predictions for given instances.

On the Robustness of Pretraining and Self-Supervision for a Deep Learning-based Analysis of Diabetic Retinopathy

no code implementations • 25 Jun 2021 • Vignesh Srinivasan, Nils Strodthoff, Jackie Ma, Alexander Binder, Klaus-Robert Müller, Wojciech Samek

Our results indicate that models initialized from ImageNet pretraining report a significant increase in performance, generalization and robustness to image distortions.

Software for Dataset-wide XAI: From Local Explanations to Global Insights with Zennit, CoRelAy, and ViRelAy

3 code implementations • 24 Jun 2021 • Christopher J. Anders, David Neumann, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin

Deep Neural Networks (DNNs) are known to be strong predictors, but their prediction strategies can rarely be understood.

Explainable artificial intelligence

Explainable artificial intelligence

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Learning Domain Invariant Representations by Joint Wasserstein Distance Minimization

1 code implementation • 9 Jun 2021 • Léo Andeol, Yusei Kawakami, Yuichiro Wada, Takafumi Kanamori, Klaus-Robert Müller, Grégoire Montavon

However, common ML losses do not give strong guarantees on how consistently the ML model performs for different domains, in particular, whether the model performs well on a domain at the expense of its performance on another domain.

BIGDML: Towards Exact Machine Learning Force Fields for Materials

no code implementations • 8 Jun 2021 • Huziel E. Sauceda, Luis E. Gálvez-González, Stefan Chmiela, Lauro Oliver Paz-Borbón, Klaus-Robert Müller, Alexandre Tkatchenko

Machine-learning force fields (MLFF) should be accurate, computationally and data efficient, and applicable to molecules, materials, and interfaces thereof.

SE(3)-equivariant prediction of molecular wavefunctions and electronic densities

no code implementations • NeurIPS 2021 • Oliver T. Unke, Mihail Bogojeski, Michael Gastegger, Mario Geiger, Tess Smidt, Klaus-Robert Müller

Machine learning has enabled the prediction of quantum chemical properties with high accuracy and efficiency, allowing to bypass computationally costly ab initio calculations.

SpookyNet: Learning Force Fields with Electronic Degrees of Freedom and Nonlocal Effects

no code implementations • 1 May 2021 • Oliver T. Unke, Stefan Chmiela, Michael Gastegger, Kristof T. Schütt, Huziel E. Sauceda, Klaus-Robert Müller

Machine-learned force fields (ML-FFs) combine the accuracy of ab initio methods with the efficiency of conventional force fields.

Towards Robust Explanations for Deep Neural Networks

no code implementations • 18 Dec 2020 • Ann-Kathrin Dombrowski, Christopher J. Anders, Klaus-Robert Müller, Pan Kessel

Explanation methods shed light on the decision process of black-box classifiers such as deep neural networks.

Machine learning of solvent effects on molecular spectra and reactions

1 code implementation • 28 Oct 2020 • Michael Gastegger, Kristof T. Schütt, Klaus-Robert Müller

We employ FieldSchNet to study the influence of solvent effects on molecular spectra and a Claisen rearrangement reaction.

Machine Learning Force Fields

no code implementations • 14 Oct 2020 • Oliver T. Unke, Stefan Chmiela, Huziel E. Sauceda, Michael Gastegger, Igor Poltavsky, Kristof T. Schütt, Alexandre Tkatchenko, Klaus-Robert Müller

In recent years, the use of Machine Learning (ML) in computational chemistry has enabled numerous advances previously out of reach due to the computational complexity of traditional electronic-structure methods.

A Unifying Review of Deep and Shallow Anomaly Detection

no code implementations • 24 Sep 2020 • Lukas Ruff, Jacob R. Kauffmann, Robert A. Vandermeulen, Grégoire Montavon, Wojciech Samek, Marius Kloft, Thomas G. Dietterich, Klaus-Robert Müller

Deep learning approaches to anomaly detection have recently improved the state of the art in detection performance on complex datasets such as large collections of images or text.

Langevin Cooling for Domain Translation

1 code implementation • 31 Aug 2020 • Vignesh Srinivasan, Klaus-Robert Müller, Wojciech Samek, Shinichi Nakajima

Domain translation is the task of finding correspondence between two domains.

Fairwashing Explanations with Off-Manifold Detergent

1 code implementation • ICML 2020 • Christopher J. Anders, Plamen Pasliev, Ann-Kathrin Dombrowski, Klaus-Robert Müller, Pan Kessel

Explanation methods promise to make black-box classifiers more transparent.

Explainable Deep One-Class Classification

2 code implementations • ICLR 2021 • Philipp Liznerski, Lukas Ruff, Robert A. Vandermeulen, Billy Joe Franks, Marius Kloft, Klaus-Robert Müller

Deep one-class classification variants for anomaly detection learn a mapping that concentrates nominal samples in feature space causing anomalies to be mapped away.

Ranked #5 on

Anomaly Detection

on One-class ImageNet-30

(using extra training data)

Ranked #5 on

Anomaly Detection

on One-class ImageNet-30

(using extra training data)

The Clever Hans Effect in Anomaly Detection

no code implementations • 18 Jun 2020 • Jacob Kauffmann, Lukas Ruff, Grégoire Montavon, Klaus-Robert Müller

The 'Clever Hans' effect occurs when the learned model produces correct predictions based on the 'wrong' features.

Anomaly Detection

Anomaly Detection

Explainable Artificial Intelligence (XAI)

+1

Explainable Artificial Intelligence (XAI)

+1

How Much Can I Trust You? -- Quantifying Uncertainties in Explaining Neural Networks

1 code implementation • 16 Jun 2020 • Kirill Bykov, Marina M. -C. Höhne, Klaus-Robert Müller, Shinichi Nakajima, Marius Kloft

Explainable AI (XAI) aims to provide interpretations for predictions made by learning machines, such as deep neural networks, in order to make the machines more transparent for the user and furthermore trustworthy also for applications in e. g. safety-critical areas.

Higher-Order Explanations of Graph Neural Networks via Relevant Walks

no code implementations • 5 Jun 2020 • Thomas Schnake, Oliver Eberle, Jonas Lederer, Shinichi Nakajima, Kristof T. Schütt, Klaus-Robert Müller, Grégoire Montavon

In this paper, we show that GNNs can in fact be naturally explained using higher-order expansions, i. e. by identifying groups of edges that jointly contribute to the prediction.

Rethinking Assumptions in Deep Anomaly Detection

1 code implementation • 30 May 2020 • Lukas Ruff, Robert A. Vandermeulen, Billy Joe Franks, Klaus-Robert Müller, Marius Kloft

Though anomaly detection (AD) can be viewed as a classification problem (nominal vs. anomalous) it is usually treated in an unsupervised manner since one typically does not have access to, or it is infeasible to utilize, a dataset that sufficiently characterizes what it means to be "anomalous."

Ensemble Learning of Coarse-Grained Molecular Dynamics Force Fields with a Kernel Approach

no code implementations • 4 May 2020 • Jiang Wang, Stefan Chmiela, Klaus-Robert Müller, Frank Noè, Cecilia Clementi

Using ensemble learning and stratified sampling, we propose a 2-layer training scheme that enables GDML to learn an effective coarse-grained model.

Risk Estimation of SARS-CoV-2 Transmission from Bluetooth Low Energy Measurements

no code implementations • 22 Apr 2020 • Felix Sattler, Jackie Ma, Patrick Wagner, David Neumann, Markus Wenzel, Ralf Schäfer, Wojciech Samek, Klaus-Robert Müller, Thomas Wiegand

Digital contact tracing approaches based on Bluetooth low energy (BLE) have the potential to efficiently contain and delay outbreaks of infectious diseases such as the ongoing SARS-CoV-2 pandemic.

Automatic Identification of Types of Alterations in Historical Manuscripts

no code implementations • 20 Mar 2020 • David Lassner, Anne Baillot, Sergej Dogadov, Klaus-Robert Müller, Shinichi Nakajima

In addition to the findings based on the digital scholarly edition Berlin Intellectuals, we present a general framework for the analysis of text genesis that can be used in the context of other digital resources representing document variants.

Explaining Deep Neural Networks and Beyond: A Review of Methods and Applications

no code implementations • 17 Mar 2020 • Wojciech Samek, Grégoire Montavon, Sebastian Lapuschkin, Christopher J. Anders, Klaus-Robert Müller

With the broader and highly successful usage of machine learning in industry and the sciences, there has been a growing demand for Explainable AI.

Building and Interpreting Deep Similarity Models

1 code implementation • 11 Mar 2020 • Oliver Eberle, Jochen Büttner, Florian Kräutli, Klaus-Robert Müller, Matteo Valleriani, Grégoire Montavon

Many learning algorithms such as kernel machines, nearest neighbors, clustering, or anomaly detection, are based on the concept of 'distance' or 'similarity'.

Autonomous robotic nanofabrication with reinforcement learning

1 code implementation • 27 Feb 2020 • Philipp Leinen, Malte Esders, Kristof T. Schütt, Christian Wagner, Klaus-Robert Müller, F. Stefan Tautz

Here, we present a strategy to work around both obstacles, and demonstrate autonomous robotic nanofabrication by manipulating single molecules.

Forecasting Industrial Aging Processes with Machine Learning Methods

no code implementations • 5 Feb 2020 • Mihail Bogojeski, Simeon Sauer, Franziska Horn, Klaus-Robert Müller

Accurately predicting industrial aging processes makes it possible to schedule maintenance events further in advance, ensuring a cost-efficient and reliable operation of the plant.

Finding and Removing Clever Hans: Using Explanation Methods to Debug and Improve Deep Models

2 code implementations • 22 Dec 2019 • Christopher J. Anders, Leander Weber, David Neumann, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin

Based on a recent technique - Spectral Relevance Analysis - we propose the following technical contributions and resulting findings: (a) a scalable quantification of artifactual and poisoned classes where the machine learning models under study exhibit CH behavior, (b) several approaches denoted as Class Artifact Compensation (ClArC), which are able to effectively and significantly reduce a model's CH behavior.

Pruning by Explaining: A Novel Criterion for Deep Neural Network Pruning

1 code implementation • 18 Dec 2019 • Seul-Ki Yeom, Philipp Seegerer, Sebastian Lapuschkin, Alexander Binder, Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek

The success of convolutional neural networks (CNNs) in various applications is accompanied by a significant increase in computation and parameter storage costs.

Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI)

Model Compression

+2

Model Compression

+2

Machine learning for molecular simulation

no code implementations • 7 Nov 2019 • Frank Noé, Alexandre Tkatchenko, Klaus-Robert Müller, Cecilia Clementi

Machine learning (ML) is transforming all areas of science.

Clustered Federated Learning: Model-Agnostic Distributed Multi-Task Optimization under Privacy Constraints

2 code implementations • 4 Oct 2019 • Felix Sattler, Klaus-Robert Müller, Wojciech Samek

Federated Learning (FL) is currently the most widely adopted framework for collaborative training of (deep) machine learning models under privacy constraints.

Towards Explainable Artificial Intelligence

no code implementations • 26 Sep 2019 • Wojciech Samek, Klaus-Robert Müller

Deep learning models are at the forefront of this development.

Explaining and Interpreting LSTMs

no code implementations • 25 Sep 2019 • Leila Arras, Jose A. Arjona-Medina, Michael Widrich, Grégoire Montavon, Michael Gillhofer, Klaus-Robert Müller, Sepp Hochreiter, Wojciech Samek

While neural networks have acted as a strong unifying force in the design of modern AI systems, the neural network architectures themselves remain highly heterogeneous due to the variety of tasks to be solved.

Resolving challenges in deep learning-based analyses of histopathological images using explanation methods

no code implementations • 15 Aug 2019 • Miriam Hägele, Philipp Seegerer, Sebastian Lapuschkin, Michael Bockmayr, Wojciech Samek, Frederick Klauschen, Klaus-Robert Müller, Alexander Binder

Deep learning has recently gained popularity in digital pathology due to its high prediction quality.

Deep Transfer Learning For Whole-Brain fMRI Analyses

no code implementations • 2 Jul 2019 • Armin W. Thomas, Klaus-Robert Müller, Wojciech Samek

Even further, the pre-trained DL model variant is already able to correctly decode 67. 51% of the cognitive states from a test dataset with 100 individuals, when fine-tuned on a dataset of the size of only three subjects.

Explanations can be manipulated and geometry is to blame

2 code implementations • NeurIPS 2019 • Ann-Kathrin Dombrowski, Maximilian Alber, Christopher J. Anders, Marcel Ackermann, Klaus-Robert Müller, Pan Kessel

Explanation methods aim to make neural networks more trustworthy and interpretable.

From Clustering to Cluster Explanations via Neural Networks

no code implementations • 18 Jun 2019 • Jacob Kauffmann, Malte Esders, Lukas Ruff, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller

Cluster predictions of the obtained networks can then be quickly and accurately attributed to the input features.

Deep Semi-Supervised Anomaly Detection

7 code implementations • ICLR 2020 • Lukas Ruff, Robert A. Vandermeulen, Nico Görnitz, Alexander Binder, Emmanuel Müller, Klaus-Robert Müller, Marius Kloft

Deep approaches to anomaly detection have recently shown promising results over shallow methods on large and complex datasets.

Evaluating Recurrent Neural Network Explanations

1 code implementation • WS 2019 • Leila Arras, Ahmed Osman, Klaus-Robert Müller, Wojciech Samek

Recently, several methods have been proposed to explain the predictions of recurrent neural networks (RNNs), in particular of LSTMs.

Black-Box Decision based Adversarial Attack with Symmetric $α$-stable Distribution

no code implementations • 11 Apr 2019 • Vignesh Srinivasan, Ercan E. Kuruoglu, Klaus-Robert Müller, Wojciech Samek, Shinichi Nakajima

Many existing methods employ Gaussian random variables for exploring the data space to find the most adversarial (for attacking) or least adversarial (for defense) point.

Comment on "Solving Statistical Mechanics Using VANs": Introducing saVANt - VANs Enhanced by Importance and MCMC Sampling

no code implementations • 26 Mar 2019 • Kim Nicoli, Pan Kessel, Nils Strodthoff, Wojciech Samek, Klaus-Robert Müller, Shinichi Nakajima

In this comment on "Solving Statistical Mechanics Using Variational Autoregressive Networks" by Wu et al., we propose a subtle yet powerful modification of their approach.

Robust and Communication-Efficient Federated Learning from Non-IID Data

1 code implementation • 7 Mar 2019 • Felix Sattler, Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek

Federated Learning allows multiple parties to jointly train a deep learning model on their combined data, without any of the participants having to reveal their local data to a centralized server.

Local Function Complexity for Active Learning via Mixture of Gaussian Processes

no code implementations • 27 Feb 2019 • Danny Panknin, Stefan Chmiela, Klaus-Robert Müller, Shinichi Nakajima

Inhomogeneities in real-world data, e. g., due to changes in the observation noise level or variations in the structural complexity of the source function, pose a unique set of challenges for statistical inference.

Unmasking Clever Hans Predictors and Assessing What Machines Really Learn

1 code implementation • 26 Feb 2019 • Sebastian Lapuschkin, Stephan Wäldchen, Alexander Binder, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller

Current learning machines have successfully solved hard application problems, reaching high accuracy and displaying seemingly "intelligent" behavior.

Molecular Force Fields with Gradient-Domain Machine Learning: Construction and Application to Dynamics of Small Molecules with Coupled Cluster Forces

1 code implementation • 19 Jan 2019 • Huziel E. Sauceda, Stefan Chmiela, Igor Poltavsky, Klaus-Robert Müller, Alexandre Tkatchenko

The analysis of sGDML molecular dynamics trajectories yields new qualitative insights into dynamics and spectroscopy of small molecules close to spectroscopic accuracy.

Chemical Physics Computational Physics Data Analysis, Statistics and Probability

Automating the search for a patent's prior art with a full text similarity search

1 code implementation • 10 Jan 2019 • Lea Helmers, Franziska Horn, Franziska Biegler, Tim Oppermann, Klaus-Robert Müller

The evaluation results show that our automated approach, besides accelerating the search process, also improves the search results for prior art with respect to their quality.

Entropy-Constrained Training of Deep Neural Networks

no code implementations • 18 Dec 2018 • Simon Wiedemann, Arturo Marban, Klaus-Robert Müller, Wojciech Samek

We propose a general framework for neural network compression that is motivated by the Minimum Description Length (MDL) principle.

sGDML: Constructing Accurate and Data Efficient Molecular Force Fields Using Machine Learning

1 code implementation • 12 Dec 2018 • Stefan Chmiela, Huziel E. Sauceda, Igor Poltavsky, Klaus-Robert Müller, Alexandre Tkatchenko

We present an optimized implementation of the recently proposed symmetric gradient domain machine learning (sGDML) model.

Computational Physics

Learning representations of molecules and materials with atomistic neural networks

no code implementations • 11 Dec 2018 • Kristof T. Schütt, Alexandre Tkatchenko, Klaus-Robert Müller

Deep Learning has been shown to learn efficient representations for structured data such as image, text or audio.

Analyzing Neuroimaging Data Through Recurrent Deep Learning Models

1 code implementation • 23 Oct 2018 • Armin W. Thomas, Hauke R. Heekeren, Klaus-Robert Müller, Wojciech Samek

We further demonstrate DeepLight's ability to study the fine-grained temporo-spatial variability of brain activity over sequences of single fMRI samples.

Explaining the Unique Nature of Individual Gait Patterns with Deep Learning

1 code implementation • 13 Aug 2018 • Fabian Horst, Sebastian Lapuschkin, Wojciech Samek, Klaus-Robert Müller, Wolfgang I. Schöllhorn

Machine learning (ML) techniques such as (deep) artificial neural networks (DNN) are solving very successfully a plethora of tasks and provide new predictive models for complex physical, chemical, biological and social systems.

iNNvestigate neural networks!

1 code implementation • 13 Aug 2018 • Maximilian Alber, Sebastian Lapuschkin, Philipp Seegerer, Miriam Hägele, Kristof T. Schütt, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller, Sven Dähne, Pieter-Jan Kindermans

The presented library iNNvestigate addresses this by providing a common interface and out-of-the- box implementation for many analysis methods, including the reference implementation for PatternNet and PatternAttribution as well as for LRP-methods.

AudioMNIST: Exploring Explainable Artificial Intelligence for Audio Analysis on a Simple Benchmark

3 code implementations • 9 Jul 2018 • Sören Becker, Johanna Vielhaben, Marcel Ackermann, Klaus-Robert Müller, Sebastian Lapuschkin, Wojciech Samek

Explainable Artificial Intelligence (XAI) is targeted at understanding how models perform feature selection and derive their classification decisions.

Unsupervised Detection and Explanation of Latent-class Contextual Anomalies

no code implementations • 29 Jun 2018 • Jacob Kauffmann, Grégoire Montavon, Luiz Alberto Lima, Shinichi Nakajima, Klaus-Robert Müller, Nico Görnitz

Detecting and explaining anomalies is a challenging effort.

Quantum-chemical insights from interpretable atomistic neural networks

no code implementations • 27 Jun 2018 • Kristof T. Schütt, Michael Gastegger, Alexandre Tkatchenko, Klaus-Robert Müller

With the rise of deep neural networks for quantum chemistry applications, there is a pressing need for architectures that, beyond delivering accurate predictions of chemical properties, are readily interpretable by researchers.

Understanding Patch-Based Learning by Explaining Predictions

no code implementations • 11 Jun 2018 • Christopher Anders, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller

We apply the deep Taylor / LRP technique to understand the deep network's classification decisions, and identify a "border effect": a tendency of the classifier to look mainly at the bordering frames of the input.

Robustifying Models Against Adversarial Attacks by Langevin Dynamics

no code implementations • 30 May 2018 • Vignesh Srinivasan, Arturo Marban, Klaus-Robert Müller, Wojciech Samek, Shinichi Nakajima

Adversarial attacks on deep learning models have compromised their performance considerably.

Towards computational fluorescence microscopy: Machine learning-based integrated prediction of morphological and molecular tumor profiles

no code implementations • 28 May 2018 • Alexander Binder, Michael Bockmayr, Miriam Hägele, Stephan Wienert, Daniel Heim, Katharina Hellweg, Albrecht Stenzinger, Laura Parlow, Jan Budczies, Benjamin Goeppert, Denise Treue, Manato Kotani, Masaru Ishii, Manfred Dietel, Andreas Hocke, Carsten Denkert, Klaus-Robert Müller, Frederick Klauschen

Recent advances in cancer research largely rely on new developments in microscopic or molecular profiling techniques offering high level of detail with respect to either spatial or molecular features, but usually not both.

Compact and Computationally Efficient Representation of Deep Neural Networks

no code implementations • 27 May 2018 • Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek

These new matrix formats have the novel property that their memory and algorithmic complexity are implicitly bounded by the entropy of the matrix, consequently implying that they are guaranteed to become more efficient as the entropy of the matrix is being reduced.

Sparse Binary Compression: Towards Distributed Deep Learning with minimal Communication

no code implementations • 22 May 2018 • Felix Sattler, Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek

A major issue in distributed training is the limited communication bandwidth between contributing nodes or prohibitive communication cost in general.

Towards Explaining Anomalies: A Deep Taylor Decomposition of One-Class Models

no code implementations • 16 May 2018 • Jacob Kauffmann, Klaus-Robert Müller, Grégoire Montavon

The proposed One-Class DTD is applicable to a number of common distance-based SVM kernels and is able to reliably explain a wide set of data anomalies.

Towards Exact Molecular Dynamics Simulations with Machine-Learned Force Fields

1 code implementation • 26 Feb 2018 • Stefan Chmiela, Huziel E. Sauceda, Klaus-Robert Müller, Alexandre Tkatchenko

Molecular dynamics (MD) simulations employing classical force fields constitute the cornerstone of contemporary atomistic modeling in chemistry, biology, and materials science.

Chemical Physics

SchNet - a deep learning architecture for molecules and materials

5 code implementations • J. Chem. Phys. 2017 • Kristof T. Schütt, Huziel E. Sauceda, Pieter-Jan Kindermans, Alexandre Tkatchenko, Klaus-Robert Müller

Deep learning has led to a paradigm shift in artificial intelligence, including web, text and image search, speech recognition, as well as bioinformatics, with growing impact in chemical physics.

Ranked #6 on

Formation Energy

on Materials Project

Ranked #6 on

Formation Energy

on Materials Project

Formation Energy

Chemical Physics

Materials Science

Formation Energy

Chemical Physics

Materials Science

An Empirical Study on The Properties of Random Bases for Kernel Methods

no code implementations • NeurIPS 2017 • Maximilian Alber, Pieter-Jan Kindermans, Kristof Schütt, Klaus-Robert Müller, Fei Sha

Kernel machines as well as neural networks possess universal function approximation properties.

Optimizing for Measure of Performance in Max-Margin Parsing

no code implementations • 5 Sep 2017 • Alexander Bauer, Shinichi Nakajima, Nico Görnitz, Klaus-Robert Müller

Many statistical learning problems in the area of natural language processing including sequence tagging, sequence segmentation and syntactic parsing has been successfully approached by means of structured prediction methods.

Explainable Artificial Intelligence: Understanding, Visualizing and Interpreting Deep Learning Models

no code implementations • 28 Aug 2017 • Wojciech Samek, Thomas Wiegand, Klaus-Robert Müller

With the availability of large databases and recent improvements in deep learning methodology, the performance of AI systems is reaching or even exceeding the human level on an increasing number of complex tasks.

Explainable artificial intelligence

Explainable artificial intelligence

General Classification

+2

General Classification

+2

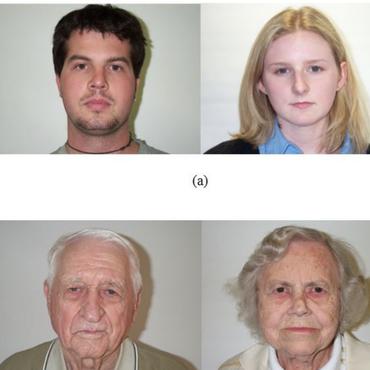

Understanding and Comparing Deep Neural Networks for Age and Gender Classification

no code implementations • 25 Aug 2017 • Sebastian Lapuschkin, Alexander Binder, Klaus-Robert Müller, Wojciech Samek

Recently, deep neural networks have demonstrated excellent performances in recognizing the age and gender on human face images.

Minimizing Trust Leaks for Robust Sybil Detection

no code implementations • ICML 2017 • János Höner, Shinichi Nakajima, Alexander Bauer, Klaus-Robert Müller, Nico Görnitz

Sybil detection is a crucial task to protect online social networks (OSNs) against intruders who try to manipulate automatic services provided by OSNs to their customers.

Discovering topics in text datasets by visualizing relevant words

1 code implementation • 18 Jul 2017 • Franziska Horn, Leila Arras, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

When dealing with large collections of documents, it is imperative to quickly get an overview of the texts' contents.

Exploring text datasets by visualizing relevant words

2 code implementations • 17 Jul 2017 • Franziska Horn, Leila Arras, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

When working with a new dataset, it is important to first explore and familiarize oneself with it, before applying any advanced machine learning algorithms.

SchNet: A continuous-filter convolutional neural network for modeling quantum interactions

5 code implementations • NeurIPS 2017 • Kristof T. Schütt, Pieter-Jan Kindermans, Huziel E. Sauceda, Stefan Chmiela, Alexandre Tkatchenko, Klaus-Robert Müller

Deep learning has the potential to revolutionize quantum chemistry as it is ideally suited to learn representations for structured data and speed up the exploration of chemical space.

Ranked #1 on

Time Series

on QM9

Ranked #1 on

Time Series

on QM9

Methods for Interpreting and Understanding Deep Neural Networks

no code implementations • 24 Jun 2017 • Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller

This paper provides an entry point to the problem of interpreting a deep neural network model and explaining its predictions.

Explaining Recurrent Neural Network Predictions in Sentiment Analysis

1 code implementation • WS 2017 • Leila Arras, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

Recently, a technique called Layer-wise Relevance Propagation (LRP) was shown to deliver insightful explanations in the form of input space relevances for understanding feed-forward neural network classification decisions.

Learning how to explain neural networks: PatternNet and PatternAttribution

3 code implementations • ICLR 2018 • Pieter-Jan Kindermans, Kristof T. Schütt, Maximilian Alber, Klaus-Robert Müller, Dumitru Erhan, Been Kim, Sven Dähne

We show that these methods do not produce the theoretically correct explanation for a linear model.

Predicting Pairwise Relations with Neural Similarity Encoders

1 code implementation • 6 Feb 2017 • Franziska Horn, Klaus-Robert Müller

Matrix factorization is at the heart of many machine learning algorithms, for example, dimensionality reduction (e. g. kernel PCA) or recommender systems relying on collaborative filtering.

"What is Relevant in a Text Document?": An Interpretable Machine Learning Approach

1 code implementation • 23 Dec 2016 • Leila Arras, Franziska Horn, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

Text documents can be described by a number of abstract concepts such as semantic category, writing style, or sentiment.

Deep Neural Networks for No-Reference and Full-Reference Image Quality Assessment

2 code implementations • 6 Dec 2016 • Sebastian Bosse, Dominique Maniry, Klaus-Robert Müller, Thomas Wiegand, Wojciech Samek

We present a deep neural network-based approach to image quality assessment (IQA).

Wasserstein Training of Restricted Boltzmann Machines

no code implementations • NeurIPS 2016 • Grégoire Montavon, Klaus-Robert Müller, Marco Cuturi

This metric between observations can then be used to define the Wasserstein distance between the distribution induced by the Boltzmann machine on the one hand, and that given by the training sample on the other hand.

Interpreting the Predictions of Complex ML Models by Layer-wise Relevance Propagation

no code implementations • 24 Nov 2016 • Wojciech Samek, Grégoire Montavon, Alexander Binder, Sebastian Lapuschkin, Klaus-Robert Müller

Complex nonlinear models such as deep neural network (DNNs) have become an important tool for image classification, speech recognition, natural language processing, and many other fields of application.

Investigating the influence of noise and distractors on the interpretation of neural networks

no code implementations • 22 Nov 2016 • Pieter-Jan Kindermans, Kristof Schütt, Klaus-Robert Müller, Sven Dähne

Understanding neural networks is becoming increasingly important.

Feature Importance Measure for Non-linear Learning Algorithms

1 code implementation • 22 Nov 2016 • Marina M. -C. Vidovic, Nico Görnitz, Klaus-Robert Müller, Marius Kloft

MFI is general and can be applied to any arbitrary learning machine (including kernel machines and deep learning).

By-passing the Kohn-Sham equations with machine learning

2 code implementations • 9 Sep 2016 • Felix Brockherde, Leslie Vogt, Li Li, Mark E. Tuckerman, Kieron Burke, Klaus-Robert Müller

Last year, at least 30, 000 scientific papers used the Kohn-Sham scheme of density functional theory to solve electronic structure problems in a wide variety of scientific fields, ranging from materials science to biochemistry to astrophysics.

Language Detection For Short Text Messages In Social Media

no code implementations • 30 Aug 2016 • Ivana Balazevic, Mikio Braun, Klaus-Robert Müller

These approaches include the use of the well-known classifiers such as SVM and logistic regression, a dictionary based approach, and a probabilistic model based on modified Kneser-Ney smoothing.

Object Boundary Detection and Classification with Image-level Labels

no code implementations • 29 Jun 2016 • Jing Yu Koh, Wojciech Samek, Klaus-Robert Müller, Alexander Binder

We propose a novel strategy for solving this task, when pixel-level annotations are not available, performing it in an almost zero-shot manner by relying on conventional whole image neural net classifiers that were trained using large bounding boxes.

Explaining Predictions of Non-Linear Classifiers in NLP

1 code implementation • WS 2016 • Leila Arras, Franziska Horn, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

Layer-wise relevance propagation (LRP) is a recently proposed technique for explaining predictions of complex non-linear classifiers in terms of input variables.

Identifying individual facial expressions by deconstructing a neural network

no code implementations • 23 Jun 2016 • Farhad Arbabzadah, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

We further observe that the explanation method provides important insights into the nature of features of the base model, which allow one to assess the aptitude of the base model for a given transfer learning task.

Interpretable Deep Neural Networks for Single-Trial EEG Classification

no code implementations • 27 Apr 2016 • Irene Sturm, Sebastian Bach, Wojciech Samek, Klaus-Robert Müller

With LRP a new quality of high-resolution assessment of neural activity can be reached.

Layer-wise Relevance Propagation for Neural Networks with Local Renormalization Layers

no code implementations • 4 Apr 2016 • Alexander Binder, Grégoire Montavon, Sebastian Bach, Klaus-Robert Müller, Wojciech Samek

Layer-wise relevance propagation is a framework which allows to decompose the prediction of a deep neural network computed over a sample, e. g. an image, down to relevance scores for the single input dimensions of the sample such as subpixels of an image.

Controlling Explanatory Heatmap Resolution and Semantics via Decomposition Depth

no code implementations • 21 Mar 2016 • Sebastian Bach, Alexander Binder, Klaus-Robert Müller, Wojciech Samek

We present an application of the Layer-wise Relevance Propagation (LRP) algorithm to state of the art deep convolutional neural networks and Fisher Vector classifiers to compare the image perception and prediction strategies of both classifiers with the use of visualized heatmaps.

Explaining NonLinear Classification Decisions with Deep Taylor Decomposition

4 code implementations • 8 Dec 2015 • Grégoire Montavon, Sebastian Bach, Alexander Binder, Wojciech Samek, Klaus-Robert Müller

Although our focus is on image classification, the method is applicable to a broad set of input data, learning tasks and network architectures.

Analyzing Classifiers: Fisher Vectors and Deep Neural Networks

no code implementations • CVPR 2016 • Sebastian Bach, Alexander Binder, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek

Fisher Vector classifiers and Deep Neural Networks (DNNs) are popular and successful algorithms for solving image classification problems.

Validity of time reversal for testing Granger causality

no code implementations • 25 Sep 2015 • Irene Winkler, Danny Panknin, Daniel Bartz, Klaus-Robert Müller, Stefan Haufe

Inferring causal interactions from observed data is a challenging problem, especially in the presence of measurement noise.

Evaluating the visualization of what a Deep Neural Network has learned

1 code implementation • 21 Sep 2015 • Wojciech Samek, Alexander Binder, Grégoire Montavon, Sebastian Bach, Klaus-Robert Müller

Our main result is that the recently proposed Layer-wise Relevance Propagation (LRP) algorithm qualitatively and quantitatively provides a better explanation of what made a DNN arrive at a particular classification decision than the sensitivity-based approach or the deconvolution method.

Wasserstein Training of Boltzmann Machines

no code implementations • 7 Jul 2015 • Grégoire Montavon, Klaus-Robert Müller, Marco Cuturi

The Boltzmann machine provides a useful framework to learn highly complex, multimodal and multiscale data distributions that occur in the real world.

Understanding Kernel Ridge Regression: Common behaviors from simple functions to density functionals

no code implementations • 16 Jan 2015 • Kevin Vu, John Snyder, Li Li, Matthias Rupp, Brandon F. Chen, Tarek Khelif, Klaus-Robert Müller, Kieron Burke

Accurate approximations to density functionals have recently been obtained via machine learning (ML).

Multi-Target Shrinkage

no code implementations • 5 Dec 2014 • Daniel Bartz, Johannes Höhne, Klaus-Robert Müller

For the sample mean and the sample covariance as specific instances, we derive conditions under which the optimality of MTS is applicable.

Learning with Algebraic Invariances, and the Invariant Kernel Trick

no code implementations • 28 Nov 2014 • Franz J. Király, Andreas Ziehe, Klaus-Robert Müller

When solving data analysis problems it is important to integrate prior knowledge and/or structural invariances.

Understanding Machine-learned Density Functionals

no code implementations • 4 Apr 2014 • Li Li, John C. Snyder, Isabelle M. Pelaschier, Jessica Huang, Uma-Naresh Niranjan, Paul Duncan, Matthias Rupp, Klaus-Robert Müller, Kieron Burke

Kernel ridge regression is used to approximate the kinetic energy of non-interacting fermions in a one-dimensional box as a functional of their density.

Robust Spatial Filtering with Beta Divergence

no code implementations • NeurIPS 2013 • Wojciech Samek, Duncan Blythe, Klaus-Robert Müller, Motoaki Kawanabe

The efficiency of Brain-Computer Interfaces (BCI) largely depends upon a reliable extraction of informative features from the high-dimensional EEG signal.

Generalizing Analytic Shrinkage for Arbitrary Covariance Structures

no code implementations • NeurIPS 2013 • Daniel Bartz, Klaus-Robert Müller

Analytic shrinkage is a statistical technique that offers a fast alternative to cross-validation for the regularization of covariance matrices and has appealing consistency properties.

Optical Character Recognition

Optical Character Recognition

Optical Character Recognition (OCR)

Optical Character Recognition (OCR)

Multiple Kernel Learning for Brain-Computer Interfacing

no code implementations • 22 Oct 2013 • Wojciech Samek, Alexander Binder, Klaus-Robert Müller

Combining information from different sources is a common way to improve classification accuracy in Brain-Computer Interfacing (BCI).

Orbital-free Bond Breaking via Machine Learning

no code implementations • 7 Jun 2013 • John C. Snyder, Matthias Rupp, Katja Hansen, Leo Blooston, Klaus-Robert Müller, Kieron Burke

Machine learning is used to approximate the kinetic energy of one dimensional diatomics as a functional of the electron density.

Learning Invariant Representations of Molecules for Atomization Energy Prediction

no code implementations • NeurIPS 2012 • Grégoire Montavon, Katja Hansen, Siamac Fazli, Matthias Rupp, Franziska Biegler, Andreas Ziehe, Alexandre Tkatchenko, Anatole V. Lilienfeld, Klaus-Robert Müller

The accurate prediction of molecular energetics in chemical compound space is a crucial ingredient for rational compound design.

Transferring Subspaces Between Subjects in Brain-Computer Interfacing

no code implementations • 18 Sep 2012 • Wojciech Samek, Frank C. Meinecke, Klaus-Robert Müller

Compensating changes between a subjects' training and testing session in Brain Computer Interfacing (BCI) is challenging but of great importance for a robust BCI operation.

Regression for sets of polynomial equations

no code implementations • 20 Oct 2011 • Franz Johannes Király, Paul von Bünau, Jan Saputra Müller, Duncan Blythe, Frank Meinecke, Klaus-Robert Müller

We propose a method called ideal regression for approximating an arbitrary system of polynomial equations by a system of a particular type.

Layer-wise analysis of deep networks with Gaussian kernels

no code implementations • NeurIPS 2010 • Grégoire Montavon, Klaus-Robert Müller, Mikio L. Braun

Deep networks can potentially express a learning problem more efficiently than local learning machines.

Subject independent EEG-based BCI decoding

no code implementations • NeurIPS 2009 • Siamac Fazli, Cristian Grozea, Marton Danoczy, Benjamin Blankertz, Florin Popescu, Klaus-Robert Müller

In the quest to make Brain Computer Interfacing (BCI) more usable, dry electrodes have emerged that get rid of the initial 30 minutes required for placing an electrode cap.

Efficient and Accurate Lp-Norm Multiple Kernel Learning

no code implementations • NeurIPS 2009 • Marius Kloft, Ulf Brefeld, Pavel Laskov, Klaus-Robert Müller, Alexander Zien, Sören Sonnenburg

Previous approaches to multiple kernel learning (MKL) promote sparse kernel combinations and hence support interpretability.

Estimating vector fields using sparse basis field expansions

no code implementations • NeurIPS 2008 • Stefan Haufe, Vadim V. Nikulin, Andreas Ziehe, Klaus-Robert Müller, Guido Nolte

We introduce a novel framework for estimating vector fields using sparse basis field expansions (S-FLEX).

Playing Pinball with non-invasive BCI

no code implementations • NeurIPS 2008 • Matthias Krauledat, Konrad Grzeska, Max Sagebaum, Benjamin Blankertz, Carmen Vidaurre, Klaus-Robert Müller, Michael Schröder

Compared to invasive Brain-Computer Interfaces (BCI), non-invasive BCI systems based on Electroencephalogram (EEG) signals have not been applied successfully for complex control tasks.